In CockroachDB, data is replicated and distributed across the nodes of a cluster for consistency and resiliency, and read and write requests are automatically routed between nodes as appropriate. In a single-region cluster, this behavior doesn't affect performance because network latency between nodes is sub-millisecond. In a cluster spread across multiple geographic regions, however, the distribution of data becomes a key performance bottleneck, and for that reason, it is important to think about the latency requirements of each table and then use the appropriate data topologies to locate data for optimal performance.

This tutorial walks you through the process of deploying a 9-node CockroachDB cluster across 3 US regions, 3 AZs per region, with a fictional vehicle-sharing application called MovR running concurrently in each region. Initially, you'll see the effect of network latency when requests must move back and forth across the US. Then you'll use two important multi-region data topologies, Geo-Partitioned Replicas and Duplicate Indexes, to remove this bottleneck and dramatically lower latency, with the majority of reads and writes executing in 2 milliseconds or less. Finally, you'll experience the cluster's resiliency to AZ-level failure.

See it in action

Watch a demo

Watch this webinar recording to see a demonstration of the concepts and features in this tutorial.

Read a case study

Read about how an electronic lock manufacturer and multi-national bank are using the Geo-Partitioned Replicas topology in production for improved performance and regulatory compliance.

Before you begin

- Request a trial license

- Review important concepts

- Review the cluster setup

- Review the MovR application

Request a trial license

Some CockroachDB features used in this tutorial require an Enterprise license, so request a 30-day trial license before you get started.

You should receive your trial license via email within a few minutes. You'll enable your license once your cluster is up-and-running.

Review important concepts

To understand performance in a geographically distributed CockroachDB cluster, it's important to first review how reads and writes work in CockroachDB.

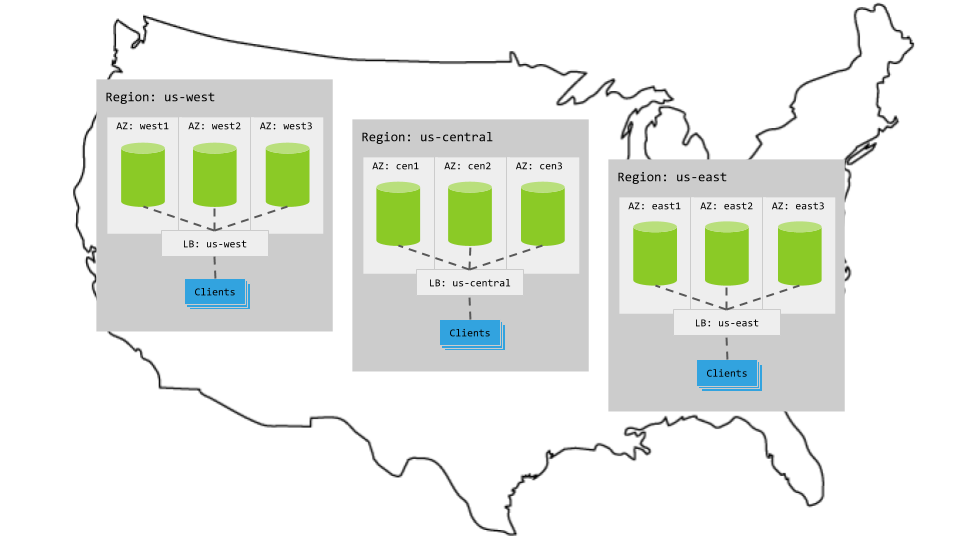

Review the cluster setup

You'll deploy a 9-node CockroachDB cluster across 3 GCE regions, with each node on a VM in a distinct availability zone for optimal resiliency:

A few notes:

- For each CockroachDB node, you'll use the

n2-standard-4machine type (4 vCPUs, 16 GB memory) with the Ubuntu 16.04 OS image and a local SSD disk. - You'll start each node with the

--localityflag describing the node's region and availability zone. Initially, this locality information will lead CockroachDB to evenly distribute data across the 3 regions. Then, it will be used to apply data topologies for lower latency. - There will be an extra VM in each region for an instance of the MovR application and the open-source HAProxy load balancer. The application in each region will be pointed at the local load balancer, which will direct connections only to the CockroachDB nodes in the same region.

Review the MovR application

For your application, you'll use our open-source, fictional, peer-to-peer vehicle-sharing app, MovR. You'll run 3 instances of MovR, one in each US region, with each instance representing users in a specific city: New York, Chicago, or Seattle.

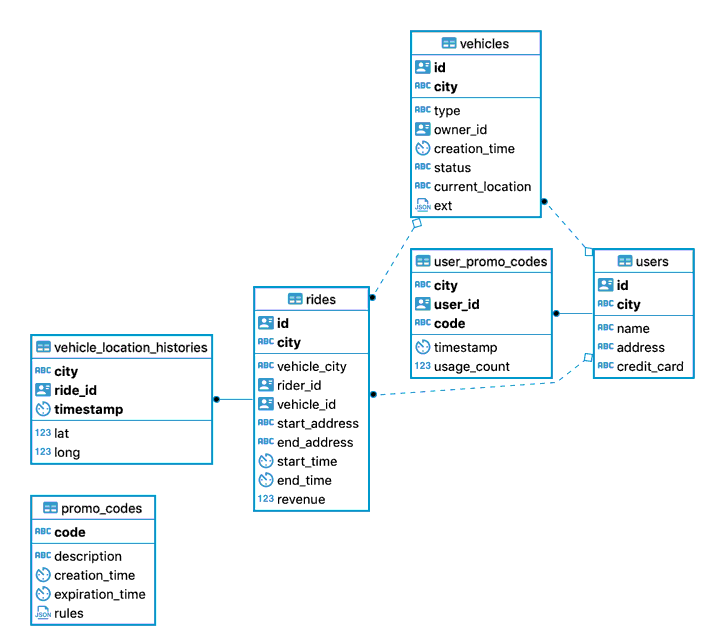

The schema

The six tables in the movr database store user, vehicle, and ride data for MovR:

| Table | Description |

|---|---|

users |

People registered for the service. |

vehicles |

The pool of vehicles available for the service. |

rides |

When and where users have rented a vehicle. |

promo_codes |

Promotional codes for users. |

user_promo_codes |

Promotional codes in use by users. |

vehicle_location_histories |

Vehicle location history. |

All of the tables except promo_codes have a multi-column primary key of city and id, with city being the first in the key. As such, the rows in these tables are geographically specific and ordered by geography. These tables are read and updated very frequently, and so to keep read and write latency low, you'll use the Geo-Partitioned Replicas topology for these tables.

In contrast, the data in the promo_codes table is not tied to geography, and the data is read frequently but rarely updated. This type of table is often referred to as a "reference table" or "lookup table". In this case, you'll use the Duplicate Indexes topology to keep just read latency very low, since that's primary.

The workflow

The workflow for MovR is as follows:

A user loads the app and sees the 25 closest vehicles.

For example:

> SELECT id, city, status FROM vehicles WHERE city='amsterdam' limit 25;The user signs up for the service.

For example:

> INSERT INTO users (id, name, address, city, credit_card) VALUES ('66666666-6666-4400-8000-00000000000f', 'Mariah Lam', '88194 Angela Gardens Suite 60', 'amsterdam', '123245696');Note:Usually for Universally Unique Identifier (UUID) you would need to generate it automatically but for the sake of this follow up we will use predetermined UUID to keep track of them in our examples.In some cases, the user adds their own vehicle to share.

For example:

> INSERT INTO vehicles (id, city, type, owner_id,creation_time,status, current_location, ext) VALUES ('ffffffff-ffff-4400-8000-00000000000f', 'amsterdam', 'skateboard', '66666666-6666-4400-8000-00000000000f', current_timestamp(), 'available', '88194 Angela Gardens Suite 60', '{"color": "blue"}');More often, the user reserves a vehicle and starts a ride, applying a promo code, if available and valid.

For example:

> SELECT code FROM user_promo_codes WHERE user_id ='66666666-6666-4400-8000-00000000000f';> UPDATE vehicles SET status = 'in_use' WHERE id='bbbbbbbb-bbbb-4800-8000-00000000000b';> INSERT INTO rides (id, city, vehicle_city, rider_id, vehicle_id, start_address,end_address, start_time, end_time, revenue) VALUES ('cd032f56-cf1a-4800-8000-00000000066f', 'amsterdam', 'amsterdam', '66666666-6666-4400-8000-00000000000f', 'bbbbbbbb-bbbb-4800-8000-00000000000b', '70458 Mary Crest', '', TIMESTAMP '2020-10-01 10:00:00.123456', NULL, 0.0);During the ride, MovR tracks the location of the vehicle.

For example:

> INSERT INTO vehicle_location_histories (city, ride_id, timestamp, lat, long) VALUES ('amsterdam', 'cd032f56-cf1a-4800-8000-00000000066f', current_timestamp(), -101, 60);The user ends the ride and releases the vehicle.

For example:

> UPDATE vehicles SET status = 'available' WHERE id='bbbbbbbb-bbbb-4800-8000-00000000000b';> UPDATE rides SET end_address ='33862 Charles Junctions Apt. 49', end_time=TIMESTAMP '2020-10-01 10:30:00.123456', revenue=88.6 WHERE id='cd032f56-cf1a-4800-8000-00000000066f';

Step 1. Set up the environment

Configure your network

CockroachDB requires TCP communication on two ports:

- 26257 (

tcp:26257) for inter-node communication (i.e., working as a cluster) - 8080 (

tcp:8080) for accessing the DB Console

Since GCE instances communicate on their internal IP addresses by default, you do not need to take any action to enable inter-node communication. However, to access the DB Console from your local network, you must create a firewall rule for your project:

| Field | Recommended Value |

|---|---|

| Name | cockroachweb |

| Source filter | IP ranges |

| Source IP ranges | Your local network's IP ranges |

| Allowed protocols | tcp:8080 |

| Target tags | cockroachdb |

The tag feature will let you easily apply the rule to your instances.

Provision VMs

You need 9 VMs across 3 GCE regions, 3 per region with each VM in a distinct availability zone. You also need 3 extra VMs, 1 per region, for a region-specific version of MovR and the HAProxy load balancer.

Create 9 VMs for CockroachDB nodes.

When creating each VM:

- Use the

n2-standard-4machine type (4 vCPUs, 16 GB memory) and the Ubuntu 16.04 OS image. Select one of the following region and availability zone configurations. Be sure to use each region/availability combination only once.

VM Region Availability Zone 1 us-east1us-east1-b2 us-east1us-east1-c3 us-east1us-east1-d4 us-central1us-central1-a5 us-central1us-central1-b6 us-central1us-central1-c7 us-west1us-west1-a8 us-west1us-west1-b9 us-west1us-west1-cTo apply the DB Console firewall rule you created earlier, click Management, disk, networking, SSH keys, select the Networking tab, and then enter

cockroachdbin the Network tags field.

- Use the

Create 3 VMs for the region-specific versions of MovR and HAProxy, one in each of the regions mentioned above, using same machine types and OS image as mentioned above.

Note the internal IP address of each VM. You'll need these addresses when starting the CockroachDB nodes, configuring HAProxy, and running the MovR application.

Step 2. Start CockroachDB

Now that you have VMs in place, start your CockroachDB cluster across the three US regions.

Start nodes in US East

SSH to the first VM in the US East region where you want to run a CockroachDB node.

Download the CockroachDB archive for Linux, extract the binary, and copy it into the

PATH:$ curl https://binaries.cockroachdb.com/cockroach-v20.2.19.linux-amd64.tgz \ | tar -xz$ sudo cp -i cockroach-v20.2.19.linux-amd64/cockroach /usr/local/bin/Run the

cockroach startcommand:$ cockroach start \ --insecure \ --advertise-addr=<node1 internal address> \ --join=<node1 internal address>:26257,<node2 internal address>:26257,<node3 internal address>:26257 \ --locality=cloud=gce,region=us-east1,zone=<relevant zone> \ --cache=.25 \ --max-sql-memory=.25 \ --backgroundRepeat steps 1 - 3 for the other two CockroachDB nodes in the region. Each time, be sure to:

- Adjust the

--advertise-addrflag. - Use the appropriate availability zone of the VM in the

zoneportion of the--localityflag.

- Adjust the

Start nodes in US Central

SSH to the first VM in the US Central region where you want to run a CockroachDB node.

Download the CockroachDB archive for Linux, extract the binary, and copy it into the

PATH:$ curl https://binaries.cockroachdb.com/cockroach-v20.2.19.linux-amd64.tgz \ | tar -xz$ sudo cp -i cockroach-v20.2.19.linux-amd64/cockroach /usr/local/bin/Run the

cockroach startcommand:$ cockroach start \ --insecure \ --advertise-addr=<node1 internal address> \ --join=<node1 internal address>:26257,<node2 internal address>:26257,<node3 internal address>:26257 \ --locality=cloud=gce,region=us-central1,zone=<relevant zone> \ --cache=.25 \ --max-sql-memory=.25 \ --backgroundRepeat steps 1 - 3 for the other two CockroachDB nodes in the region. Each time, be sure to:

- Adjust the

--advertise-addrflag. - Use the appropriate availability zone of the VM in the

zoneportion of the--localityflag.

- Adjust the

Start nodes in US West

SSH to the first VM in the US West region where you want to run a CockroachDB node.

Download the CockroachDB archive for Linux, extract the binary, and copy it into the

PATH:$ curl https://binaries.cockroachdb.com/cockroach-v20.2.19.linux-amd64.tgz \ | tar -xz$ sudo cp -i cockroach-v20.2.19.linux-amd64/cockroach /usr/local/bin/Run the

cockroach startcommand:$ cockroach start \ --insecure \ --advertise-addr=<node1 internal address> \ --join=<node1 internal address>:26257,<node2 internal address>:26257,<node3 internal address>:26257 \ --locality=cloud=gce,region=us-west1,zone=<relevant zone> \ --cache=.25 \ --max-sql-memory=.25 \ --backgroundRepeat steps 1 - 3 for the other two CockroachDB nodes in the region. Each time, be sure to:

- Adjust the

--advertise-addrflag. - Use the appropriate availability zone of the VM in the

zoneportion of the--localityflag.

- Adjust the

Initialize the cluster

On any of the VMs, run the one-time cockroach init command to join the first nodes into a cluster:

$ cockroach init --insecure --host=<address of any node>

Step 3. Start MovR

- Set up the client VMs

- Configure the cluster for MovR

- Start MovR in US East

- Start MovR in US Central

- Start MovR in US West

Set up the client VMs

Next, install Docker and HAProxy on each client VM. Docker is required so you can later run MovR from a Docker image, and HAProxy will serve as the region-specific load balancer for MovR in each region.

SSH to the VM in the US East region where you want to run MovR and HAProxy.

Install Docker:

$ sudo apt-get update && \ sudo apt-get install -y apt-transport-https ca-certificates curl gnupg-agent software-properties-common && \ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - && \ sudo apt-key fingerprint 0EBFCD88 && \ sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" && \ sudo apt-get update && \ sudo apt-get install -y docker-ce docker-ce-cli containerd.ioIf you get an error, run one command at a time or follow the official Docker instructions.

Install HAProxy:

$ sudo apt-get update$ sudo apt-get install haproxyDownload the CockroachDB archive for Linux, extract the binary, and copy it into the

PATH:$ curl https://binaries.cockroachdb.com/cockroach-v20.2.19.linux-amd64.tgz \ | tar -xz$ sudo cp -i cockroach-v20.2.19.linux-amd64/cockroach /usr/local/bin/The

cockroachbinary needs to be on these VMs so you can run some client commands built into the binary, such as the command in the next step and the command for starting the built-in SQL shell.Run the

cockroach gen haproxycommand to generate an HAProxy config file, specifying the address of any CockroachDB node and the--localityof nodes in the US East region:$ cockroach gen haproxy \ --insecure \ --host=<address of any node> \ --locality=region=us-east1The generated configuration file is called

haproxy.cfgand looks as follows, with theserveraddresses pre-populated with just the nodes in US East based on the--localityflag used:global maxconn 4096 defaults mode tcp # Timeout values should be configured for your specific use. # See: https://cbonte.github.io/haproxy-dconv/1.8/configuration.html#4-timeout%20connect timeout connect 10s timeout client 1m timeout server 1m # TCP keep-alive on client side. Server already enables them. option clitcpka listen psql bind :26257 mode tcp balance roundrobin option httpchk GET /health?ready=1 server cockroach1 <node1 address>:26257 check port 8080 server cockroach2 <node2 address>:26257 check port 8080 server cockroach3 <node3 address>:26257 check port 8080Start HAProxy, with the

-fflag pointing to thehaproxy.cfgfile:$ haproxy -f haproxy.cfg &Repeat the steps above for the client VMs in the other two regions. For each region, be sure to adjust the

--localityflag when running thecockroach gen haproxycommand.

Configure the cluster for MovR

Before you can run MovR against the cluster, you must create a movr database and enable an Enterprise license.

SSH to the client VM in the US East region.

Use the

cockroach sqlcommand to start the built-in SQL shell, specifying the address of the HAProxy load balancer in the region:$ cockroach sql --insecure --host=<address of HAProxy in US East>In the SQL shell, create the

movrdatabase:> CREATE DATABASE movr;Enable the trial license you requested earlier:

> SET CLUSTER SETTING cluster.organization = '<your organization>';> SET CLUSTER SETTING enterprise.license = '<your license key>';Set the longitude and latitude of the regions where you are running CockroachDB nodes:

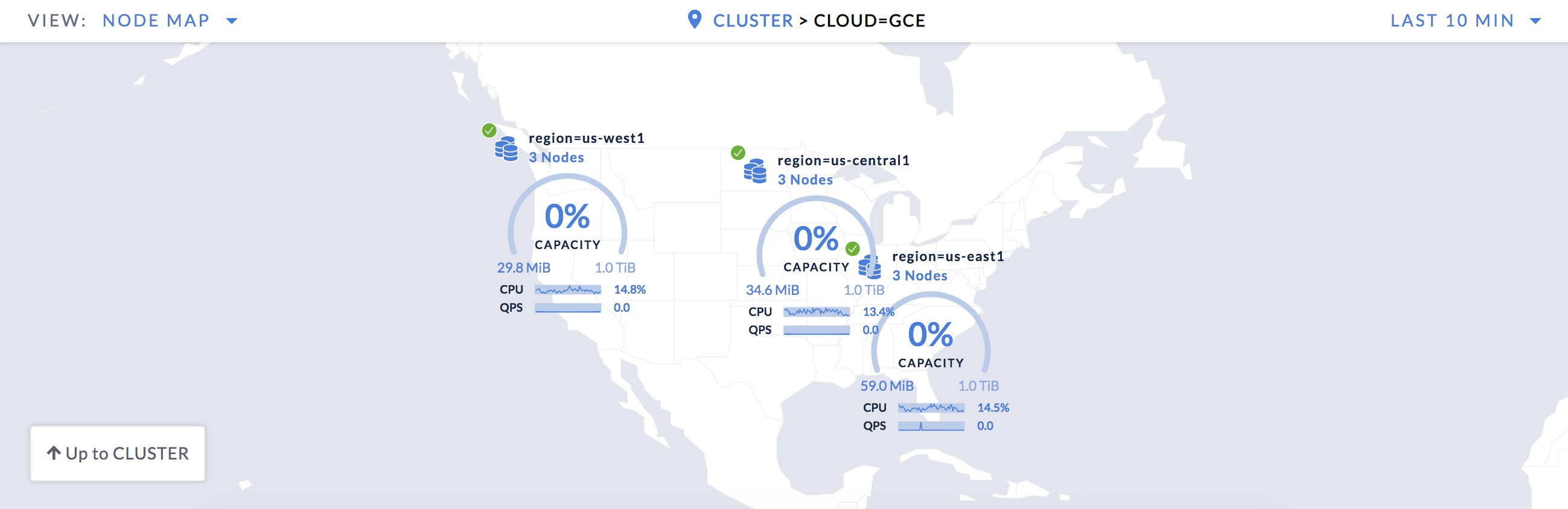

> INSERT into system.locations VALUES ('region', 'us-east1', 33.836082, -81.163727), ('region', 'us-central1', 42.032974, -93.581543), ('region', 'us-west1', 43.804133, -120.554201);Inserting these coordinates enables you to visualize your cluster on the Node Map feature of the DB Console.

Exit the SQL shell:

\q

Start MovR in US East

Be sure to use the exact version of MovR specified in the commands: movr:19.09.2. This tutorial relies on the SQL schema in this specific version.

Still on the client VM in the US East region, load the MovR schema and initial data for the cities of New York, Chicago, and Seattle, pointing at the address of the US East load balancer:

$ sudo docker run --rm cockroachdb/movr:19.09.2 \ --app-name "movr-load" \ --url "postgres://root@<address of HAProxy in US East>:26257/movr?sslmode=disable" \ load \ --num-users 100 \ --num-rides 100 \ --num-vehicles 10 \ --city "new york" \ --city "chicago" \ --city "seattle"After the Docker image downloads, you'll see data being generated for the specified cities:

... [INFO] (MainThread) initializing tables [INFO] (MainThread) loading cities ['new york', 'chicago', 'seattle'] [INFO] (MainThread) loading movr data with ~100 users, ~10 vehicles, and ~100 rides [INFO] (MainThread) Only using 3 of 5 requested threads, since we only create at most one thread per city [INFO] (Thread-1 ) Generating user data for new york... [INFO] (Thread-2 ) Generating user data for chicago... [INFO] (Thread-3 ) Generating user data for seattle... [INFO] (Thread-2 ) Generating vehicle data for chicago... [INFO] (Thread-3 ) Generating vehicle data for seattle... [INFO] (Thread-1 ) Generating vehicle data for new york... [INFO] (Thread-2 ) Generating ride data for chicago... [INFO] (Thread-3 ) Generating ride data for seattle... [INFO] (Thread-1 ) Generating ride data for new york... [INFO] (Thread-2 ) populated chicago in 9.173931 seconds [INFO] (Thread-3 ) populated seattle in 9.257723 seconds [INFO] (Thread-1 ) populated new york in 9.386243 seconds [INFO] (MainThread) populated 3 cities in 20.587325 seconds [INFO] (MainThread) - 4.954505 users/second [INFO] (MainThread) - 4.954505 rides/second [INFO] (MainThread) - 0.582883 vehicles/secondStart MovR in the US East region, representing users in New York. Be sure to point at the address of the US East load balancer:

$ sudo docker run -it --rm cockroachdb/movr:19.09.2 \ --app-name "movr-east" \ --url "postgres://root@<address of HAProxy in US East>:26257/movr?sslmode=disable" \ --num-threads=15 \ run \ --city="new york"

Start MovR in US Central

SSH to the client VM in the US Central region.

Start MovR in the US Central region, representing users in Chicago. Be sure to point at the address of the US Central load balancer:

$ sudo docker run -it --rm cockroachdb/movr:19.09.2 \ --app-name "movr-central" \ --url "postgres://root@<address of HAProxy in US Central>:26257/movr?sslmode=disable" \ --num-threads=15 \ run \ --city="chicago"

Start MovR in US West

SSH to the client VM in the US West region.

Start MovR in the US West region, representing users in Seattle. Be sure to point at the address of the US West load balancer:

$ sudo docker run -it --rm cockroachdb/movr:19.09.2 \ --app-name "movr-west" \ --url "postgres://root@<address of HAProxy in US West>:26257/movr?sslmode=disable" \ --num-threads=15 \ run \ --city="seattle"

Step 4. Access the DB Console

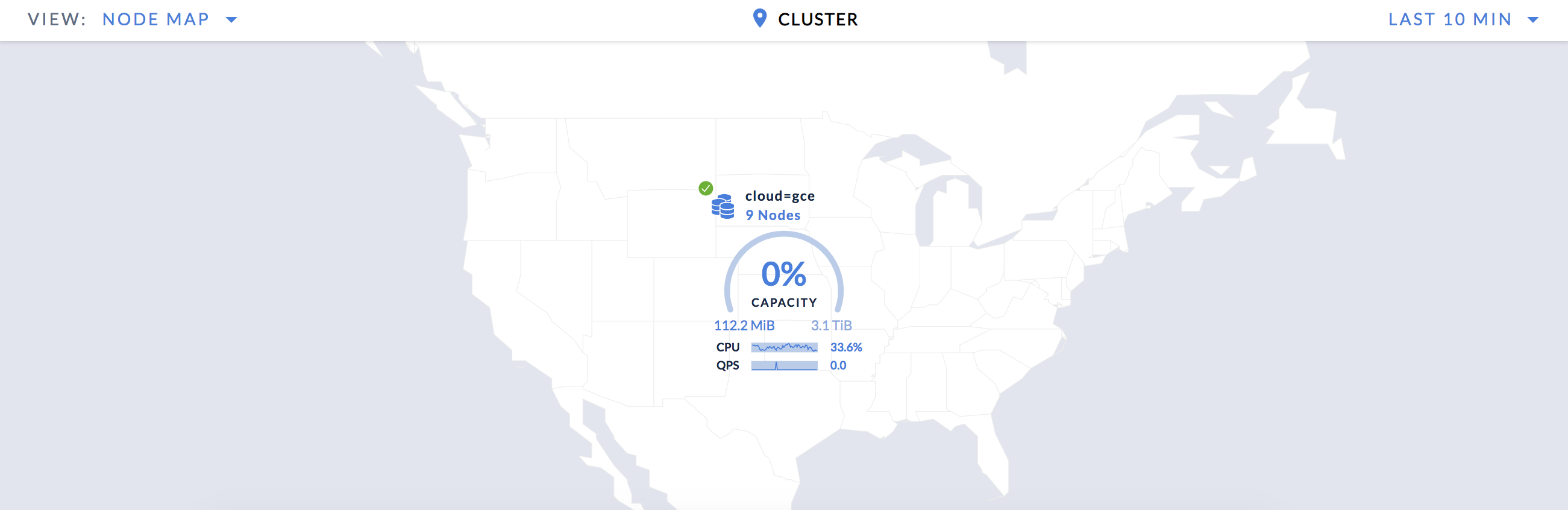

Now that you've deployed and configured your cluster, take a look at it in the DB Console:

Open a browser and go to

http://<external address of any node>:8080.On the Cluster Overview page, select View: Node Map to access the Node Map, which visualizes your CockroachDB cluster on a map of the US:

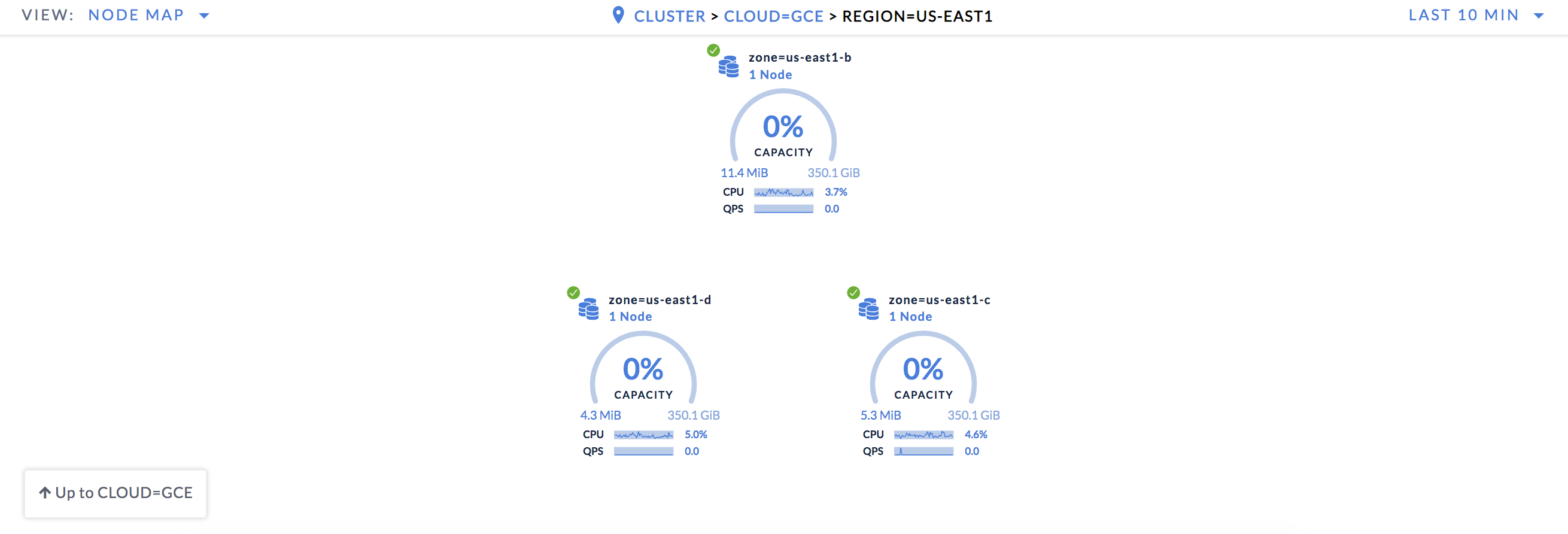

Drill down one level to see your nodes across 3 regions:

Drill into a region to see that each node is in a distinct availability zone:

Step 5. Check latency

Use the DB Console to see the effect of network latency before applying multi-region data topologies.

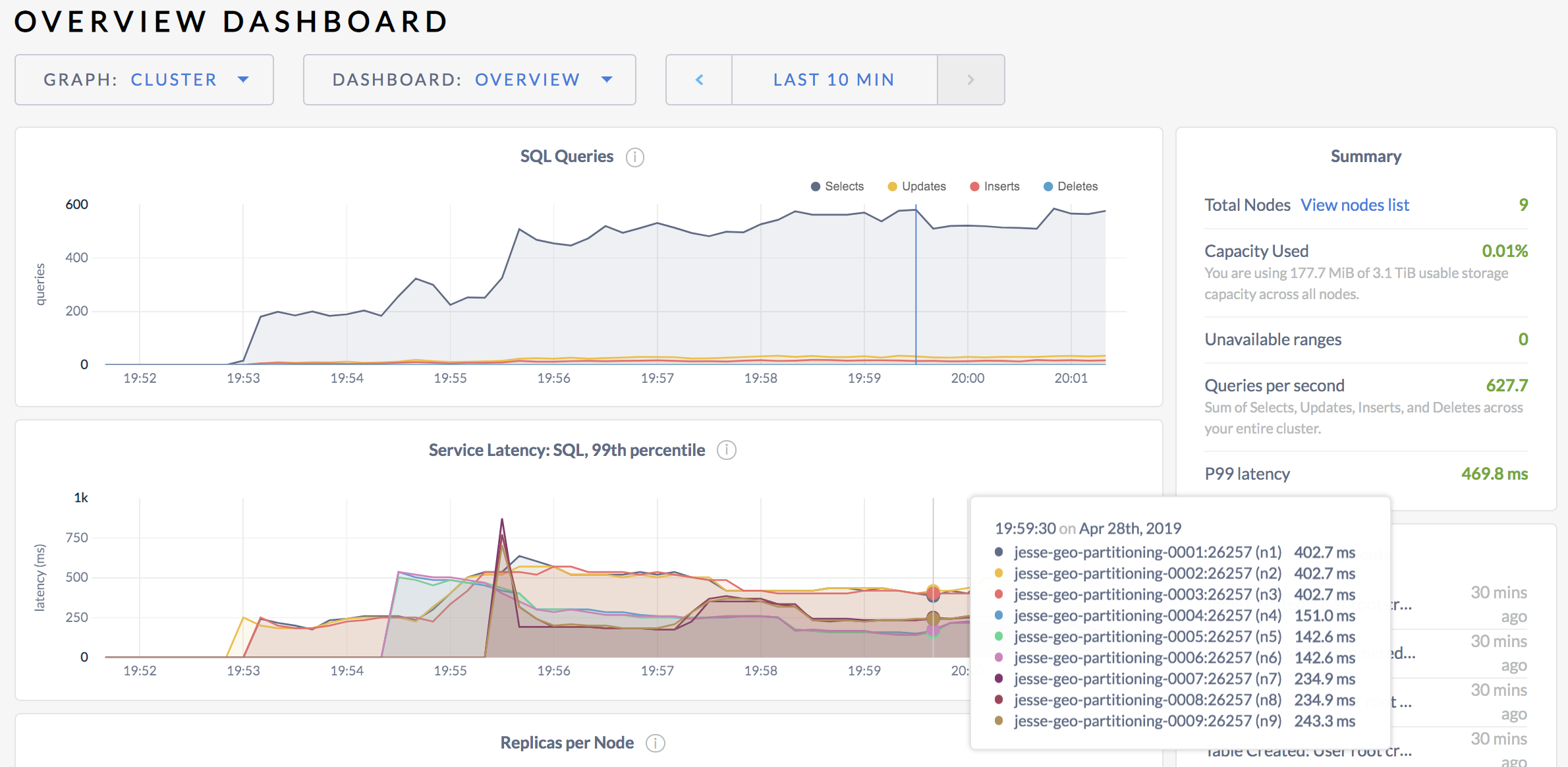

Still in the DB Console, click Metrics on the left and hover over the Service Latency: SQL, 99th percentile timeseries graph:

For each node, you'll see that the max latency of 99% of queries is in the 100s of milliseconds. To understand why SQL latency is so high, it's important to first look at how long it takes requests to physically travel between the nodes in your cluster.

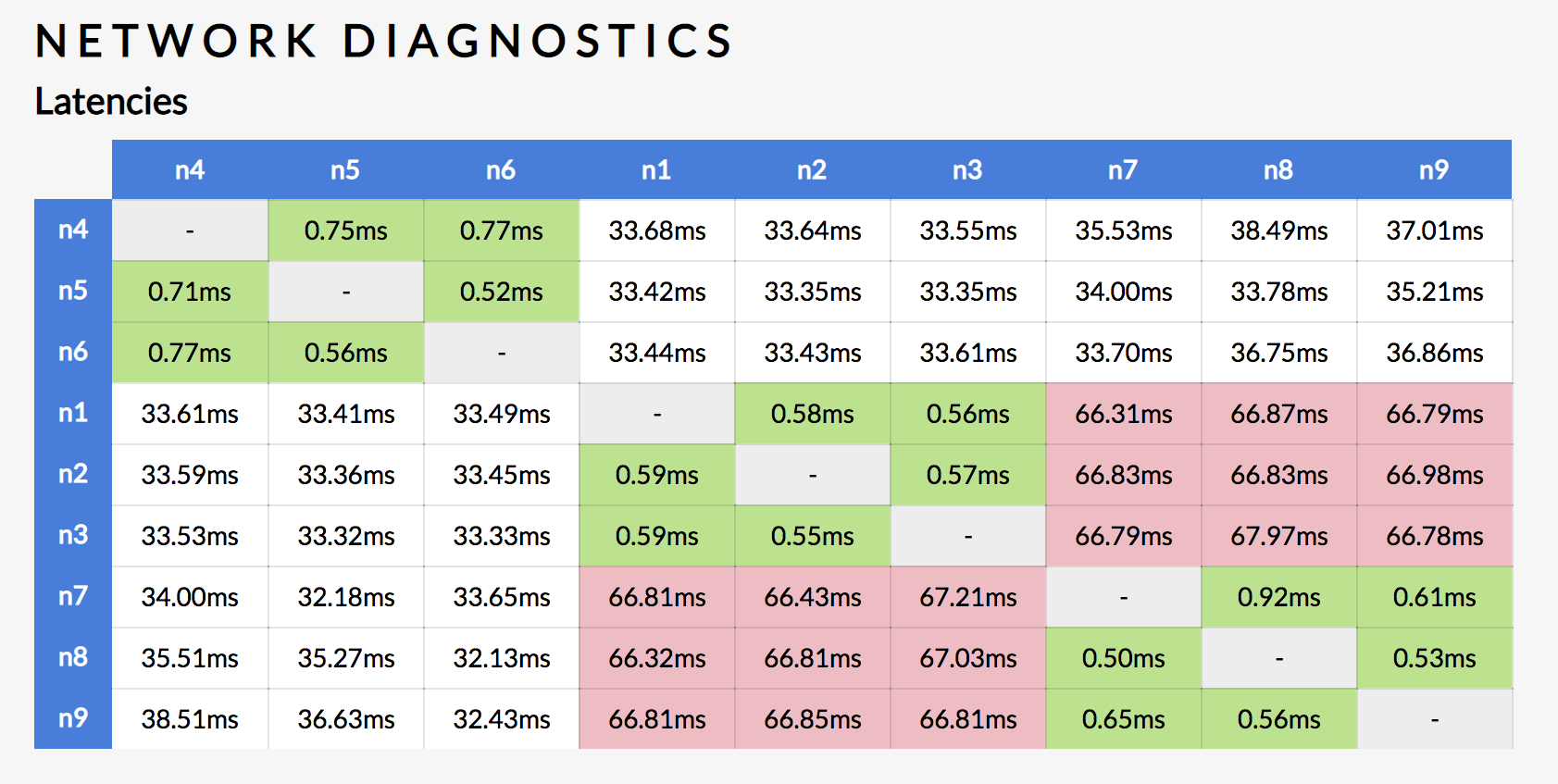

Click Network Latency in the left-hand navigation:

The Network Latency page shows the round-trip latency between any two nodes in your cluster. Here's a node/region mapping:

Nodes Region 1 - 3 us-east14 - 6 us-central17 - 9 us-west1As you can see, within a single region, round-trip latency is sub-millisecond. For example, between nodes 5 and 6 in the

us-central1region, round-trip latency is 0.56ms. However, between nodes in different regions, round-trip latency is significantly higher. For example, between node 2 inus-east1and node 7 inus-west, round-trip latency is 66.43ms.

Step 6. Check replica distribution

With network latency in mind, now use the built-in SQL shell to check the distribution of replicas. This will help us understand how SQL queries are moving between the nodes of the cluster and, thus, incurring latency.

SSH to the client VM in any region.

Use the

cockroach sqlcommand to start the built-in SQL shell, specifying the address of the HAProxy load balancer in the region:$ cockroach sql --insecure --database=movr --host=<address of HAProxy in region>In the SQL shell, use the

SHOW RANGESstatement to view the location of replicas for the tables and their secondary indexes:> SHOW RANGES FROM TABLE users; SHOW RANGES FROM TABLE vehicles; SHOW RANGES FROM INDEX vehicles_auto_index_fk_city_ref_users; SHOW RANGES FROM TABLE rides; SHOW RANGES FROM INDEX rides_auto_index_fk_city_ref_users; SHOW RANGES FROM INDEX rides_auto_index_fk_vehicle_city_ref_vehicles; SHOW RANGES FROM TABLE user_promo_codes; SHOW RANGES FROM TABLE vehicle_location_histories;start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 85 | 0.158604 | 1 | cloud=gce,region=us-east1,zone=us-east1-b | {1,6,8} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-b"} (1 row) Time: 750.045316ms start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 37 | 0.298143 | 3 | cloud=gce,region=us-east1,zone=us-east1-d | {3,6,7} | {"cloud=gce,region=us-east1,zone=us-east1-d","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-a"} (1 row) Time: 473.718371ms start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 37 | 0.302683 | 3 | cloud=gce,region=us-east1,zone=us-east1-d | {3,6,7} | {"cloud=gce,region=us-east1,zone=us-east1-d","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-a"} (1 row) Time: 2.556900719s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 39 | 0.563349 | 7 | cloud=gce,region=us-west1,zone=us-west1-a | {3,6,7} | {"cloud=gce,region=us-east1,zone=us-east1-d","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-a"} (1 row) Time: 673.337559ms start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 39 | 0.571556 | 7 | cloud=gce,region=us-west1,zone=us-west1-a | {3,6,7} | {"cloud=gce,region=us-east1,zone=us-east1-d","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-a"} (1 row) Time: 3.184113514s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 39 | 0.57792 | 7 | cloud=gce,region=us-west1,zone=us-west1-a | {3,6,7} | {"cloud=gce,region=us-east1,zone=us-east1-d","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-a"} (1 row) Time: 2.812128768s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 38 | 0.054887 | 9 | cloud=gce,region=us-west1,zone=us-west1-c | {2,6,9} | {"cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-c"} (1 row) Time: 896.010317ms start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 86 | 2.197216 | 6 | cloud=gce,region=us-central1,zone=us-central1-c | {2,6,7} | {"cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-a"} (1 row) Time: 708.643369msHere's the node/region mapping again:

Nodes Region 1 - 3 us-east14 - 6 us-central17 - 9 us-west1You'll see that most tables and indexes map to a single range, and each range has 3 replicas spread across regions with one replica identified as

lease_holder.Thinking back to how reads and writes work in CockroachDB, this tells you that many reads are leaving their region to reach the relevant leaseholder replica, and all writes are spanning regions to achieve Raft consensus. This explains the currently high latencies.

For example, based on the output above, the replicas for the

userstable are on nodes 1, 6, and 8, with the leaseholder on node 1. This means that when a user in Seattle registers for the MovR service:- A request to write a row to the

userstable goes through the load balancer in US west to a gateway node in US west. - The request is routed to the leaseholder on node 1 in US east.

- The leaseholder waits for consensus from a replica in US central or US west.

- The leaseholder returns acknowledgement to the gateway node in the US west.

- The gateway node responds to the client.

- A request to write a row to the

Step 7. Apply data topologies

Partition geo-specific tables

As mentioned earlier, all of the tables except promo_codes are geographically specific, ordered by city, and read and updated very frequently. For these tables, the most effective way to prevent the high latency resulting from cross-region operations is to apply the Geo-Partitioned Replicas data topology. In practice, you will tell CockroachDB to partition these tables and their secondary indexes by city, each partition becoming its own range of 3 replicas. You will then tell CockroachDB to pin each partition (all of its replicas) to the relevant region. This means that reads and writes on these tables will always have access to the relevant replicas in each region and, therefore, will have low, intra-region latencies.

Back in the SQL shell on one of your client VMs, use

ALTER TABLE/INDEX ... PARTITION BYstatements to define partitions bycityfor the geo-specific tables and their secondary indexes:> ALTER TABLE users PARTITION BY LIST (city) ( PARTITION new_york VALUES IN ('new york'), PARTITION chicago VALUES IN ('chicago'), PARTITION seattle VALUES IN ('seattle') );> ALTER TABLE vehicles PARTITION BY LIST (city) ( PARTITION new_york VALUES IN ('new york'), PARTITION chicago VALUES IN ('chicago'), PARTITION seattle VALUES IN ('seattle') );> ALTER INDEX vehicles_auto_index_fk_city_ref_users PARTITION BY LIST (city) ( PARTITION new_york VALUES IN ('new york'), PARTITION chicago VALUES IN ('chicago'), PARTITION seattle VALUES IN ('seattle') );> ALTER TABLE rides PARTITION BY LIST (city) ( PARTITION new_york VALUES IN ('new york'), PARTITION chicago VALUES IN ('chicago'), PARTITION seattle VALUES IN ('seattle') );> ALTER INDEX rides_auto_index_fk_city_ref_users PARTITION BY LIST (city) ( PARTITION new_york VALUES IN ('new york'), PARTITION chicago VALUES IN ('chicago'), PARTITION seattle VALUES IN ('seattle') );> ALTER INDEX rides_auto_index_fk_vehicle_city_ref_vehicles PARTITION BY LIST (vehicle_city) ( PARTITION new_york VALUES IN ('new york'), PARTITION chicago VALUES IN ('chicago'), PARTITION seattle VALUES IN ('seattle') );> ALTER TABLE user_promo_codes PARTITION BY LIST (city) ( PARTITION new_york VALUES IN ('new york'), PARTITION chicago VALUES IN ('chicago'), PARTITION seattle VALUES IN ('seattle') );> ALTER TABLE vehicle_location_histories PARTITION BY LIST (city) ( PARTITION new_york VALUES IN ('new york'), PARTITION chicago VALUES IN ('chicago'), PARTITION seattle VALUES IN ('seattle') );Use the

SHOW CREATE TABLEstatement to review the partition definition for one of the geo-specific tables:Tip:The warning at the bottom tells you that partitions are not yet applied because corresponding replication zones still need to be created.

> SHOW CREATE TABLE vehicles;table_name | create_statement +------------+-----------------------------------------------------------------------------------------------------+ vehicles | CREATE TABLE vehicles ( | id UUID NOT NULL, | city VARCHAR NOT NULL, | type VARCHAR NULL, | owner_id UUID NULL, | creation_time TIMESTAMP NULL, | status VARCHAR NULL, | current_location VARCHAR NULL, | ext JSONB NULL, | CONSTRAINT "primary" PRIMARY KEY (city ASC, id ASC), | CONSTRAINT fk_city_ref_users FOREIGN KEY (city, owner_id) REFERENCES users(city, id), | INDEX vehicles_auto_index_fk_city_ref_users (city ASC, owner_id ASC) PARTITION BY LIST (city) ( | PARTITION new_york VALUES IN (('new york')), | PARTITION chicago VALUES IN (('chicago')), | PARTITION seattle VALUES IN (('seattle')) | ), | FAMILY "primary" (id, city, type, owner_id, creation_time, status, current_location, ext) | ) PARTITION BY LIST (city) ( | PARTITION new_york VALUES IN (('new york')), | PARTITION chicago VALUES IN (('chicago')), | PARTITION seattle VALUES IN (('seattle')) | ) | -- Warning: Partitioned table with no zone configurations. (1 row)Use

ALTER PARTITION ... CONFIGURE ZONEstatements to create replication zones that pin each partition to nodes in the relevant region, using the localities specified when nodes were started:Tip:The

<table>@*syntax lets you create zone configurations for all identically named partitions of a table, saving you multiple steps.> ALTER PARTITION new_york OF INDEX movr.users@* CONFIGURE ZONE USING constraints='[+region=us-east1]'; ALTER PARTITION chicago OF INDEX movr.users@* CONFIGURE ZONE USING constraints='[+region=us-central1]'; ALTER PARTITION seattle OF INDEX movr.users@* CONFIGURE ZONE USING constraints='[+region=us-west1]';> ALTER PARTITION new_york OF INDEX movr.vehicles@* CONFIGURE ZONE USING constraints='[+region=us-east1]'; ALTER PARTITION chicago OF INDEX movr.vehicles@* CONFIGURE ZONE USING constraints='[+region=us-central1]'; ALTER PARTITION seattle OF INDEX movr.vehicles@* CONFIGURE ZONE USING constraints='[+region=us-west1]';> ALTER PARTITION new_york OF INDEX movr.rides@* CONFIGURE ZONE USING constraints='[+region=us-east1]'; ALTER PARTITION chicago OF INDEX movr.rides@* CONFIGURE ZONE USING constraints='[+region=us-central1]'; ALTER PARTITION seattle OF INDEX movr.rides@* CONFIGURE ZONE USING constraints='[+region=us-west1]';> ALTER PARTITION new_york OF INDEX movr.user_promo_codes@* CONFIGURE ZONE USING constraints='[+region=us-east1]'; ALTER PARTITION chicago OF INDEX movr.user_promo_codes@* CONFIGURE ZONE USING constraints='[+region=us-central1]'; ALTER PARTITION seattle OF INDEX movr.user_promo_codes@* CONFIGURE ZONE USING constraints='[+region=us-west1]';> ALTER PARTITION new_york OF INDEX movr.vehicle_location_histories@* CONFIGURE ZONE USING constraints='[+region=us-east1]'; ALTER PARTITION chicago OF INDEX movr.vehicle_location_histories@* CONFIGURE ZONE USING constraints='[+region=us-central1]'; ALTER PARTITION seattle OF INDEX movr.vehicle_location_histories@* CONFIGURE ZONE USING constraints='[+region=us-west1]';At this point, you can use the

SHOW CREATE TABLEstatement to confirm that partitions are in effect:> SHOW CREATE TABLE vehicles;table_name | create_statement +------------+-------------------------------------------------------------------------------------------------------------------+ vehicles | CREATE TABLE vehicles ( | id UUID NOT NULL, | city VARCHAR NOT NULL, | type VARCHAR NULL, | owner_id UUID NULL, | creation_time TIMESTAMP NULL, | status VARCHAR NULL, | current_location VARCHAR NULL, | ext JSONB NULL, | CONSTRAINT "primary" PRIMARY KEY (city ASC, id ASC), | CONSTRAINT fk_city_ref_users FOREIGN KEY (city, owner_id) REFERENCES users(city, id), | INDEX vehicles_auto_index_fk_city_ref_users (city ASC, owner_id ASC) PARTITION BY LIST (city) ( | PARTITION new_york VALUES IN (('new york')), | PARTITION chicago VALUES IN (('chicago')), | PARTITION seattle VALUES IN (('seattle')) | ), | FAMILY "primary" (id, city, type, owner_id, creation_time, status, current_location, ext) | ) PARTITION BY LIST (city) ( | PARTITION new_york VALUES IN (('new york')), | PARTITION chicago VALUES IN (('chicago')), | PARTITION seattle VALUES IN (('seattle')) | ); | ALTER PARTITION chicago OF INDEX movr.public.vehicles@primary CONFIGURE ZONE USING | constraints = '[+region=us-central1]'; | ALTER PARTITION new_york OF INDEX movr.public.vehicles@primary CONFIGURE ZONE USING | constraints = '[+region=us-east1]'; | ALTER PARTITION seattle OF INDEX movr.public.vehicles@primary CONFIGURE ZONE USING | constraints = '[+region=us-west1]'; | ALTER PARTITION chicago OF INDEX movr.public.vehicles@vehicles_auto_index_fk_city_ref_users CONFIGURE ZONE USING | constraints = '[+region=us-central1]'; | ALTER PARTITION new_york OF INDEX movr.public.vehicles@vehicles_auto_index_fk_city_ref_users CONFIGURE ZONE USING | constraints = '[+region=us-east1]'; | ALTER PARTITION seattle OF INDEX movr.public.vehicles@vehicles_auto_index_fk_city_ref_users CONFIGURE ZONE USING | constraints = '[+region=us-west1]' (1 row)In contrast to the last time you ran this statement, you can now see the commands for re-creating the replication zone for each partition of the

vehiclestable and its secondary index.The

SHOW PARTITIONSstatement is another way to confirm that partitions are in effect:> SHOW PARTITIONS FROM TABLE vehicles;database_name | table_name | partition_name | parent_partition | column_names | index_name | partition_value | zone_config | full_zone_config +---------------+------------+----------------+------------------+--------------+------------------------------------------------+-----------------+---------------------------------------+----------------------------------------+ movr | vehicles | new_york | NULL | city | vehicles@primary | ('new york') | constraints = '[+region=us-east1]' | range_min_bytes = 134217728, | | | | | | | | range_max_bytes = 536870912, | | | | | | | | gc.ttlseconds = 90000, | | | | | | | | num_replicas = 3, | | | | | | | | constraints = '[+region=us-east1]', | | | | | | | | lease_preferences = '[]' movr | vehicles | new_york | NULL | city | vehicles@vehicles_auto_index_fk_city_ref_users | ('new york') | constraints = '[+region=us-east1]' | range_min_bytes = 134217728, | | | | | | | | range_max_bytes = 536870912, | | | | | | | | gc.ttlseconds = 90000, | | | | | | | | num_replicas = 3, | | | | | | | | constraints = '[+region=us-east1]', | | | | | | | | lease_preferences = '[]' movr | vehicles | chicago | NULL | city | vehicles@primary | ('chicago') | constraints = '[+region=us-central1]' | range_min_bytes = 134217728, | | | | | | | | range_max_bytes = 536870912, | | | | | | | | gc.ttlseconds = 90000, | | | | | | | | num_replicas = 3, | | | | | | | | constraints = '[+region=us-central1]', | | | | | | | | lease_preferences = '[]' movr | vehicles | chicago | NULL | city | vehicles@vehicles_auto_index_fk_city_ref_users | ('chicago') | constraints = '[+region=us-central1]' | range_min_bytes = 134217728, | | | | | | | | range_max_bytes = 536870912, | | | | | | | | gc.ttlseconds = 90000, | | | | | | | | num_replicas = 3, | | | | | | | | constraints = '[+region=us-central1]', | | | | | | | | lease_preferences = '[]' movr | vehicles | seattle | NULL | city | vehicles@primary | ('seattle') | constraints = '[+region=us-west1]' | range_min_bytes = 134217728, | | | | | | | | range_max_bytes = 536870912, | | | | | | | | gc.ttlseconds = 90000, | | | | | | | | num_replicas = 3, | | | | | | | | constraints = '[+region=us-west1]', | | | | | | | | lease_preferences = '[]' movr | vehicles | seattle | NULL | city | vehicles@vehicles_auto_index_fk_city_ref_users | ('seattle') | constraints = '[+region=us-west1]' | range_min_bytes = 134217728, | | | | | | | | range_max_bytes = 536870912, | | | | | | | | gc.ttlseconds = 90000, | | | | | | | | num_replicas = 3, | | | | | | | | constraints = '[+region=us-west1]', | | | | | | | | lease_preferences = '[]' (6 rows)Tip:In testing, scripting, and other programmatic environments, we recommend querying thecrdb_internal.partitionsinternal table for partition information instead of using theSHOW PARTITIONSstatement. For more information, see Querying partitions programmatically.

Duplicate the reference table

In contrast to the other tables, the promo_codes table is not tied to geography, and its data is read frequently but rarely updated. This type of table is often referred to as a "reference table" or "lookup table". For this table, you'll keep read latency low by applying the Duplicate Indexes data topology. In practice, you will put the leaseholder for the table itself (also called the primary index) in one region, create two secondary indexes on the table, and tell CockroachDB to put the leaseholder for each secondary index in one of the other regions. CockroachDB's cost-based optimizer will then make sure that reads from promo_codes access the local leaseholder (either for the table itself or for one of the secondary indexes). Writes, however, will still leave the region to get consensus for the table and its secondary indexes, but writes are so rare that this will not impact overall performance.

Create two indexes on the

promo_codestable, and make them complete copies of the primary index:> CREATE INDEX promo_codes_idx_east ON promo_codes (code) STORING (description, creation_time, expiration_time, rules);> CREATE INDEX promo_codes_idx_west ON promo_codes (code) STORING (description, creation_time, expiration_time, rules);Use

ALTER TABLE/INDEX ... CONFIGURE ZONEstatements to create replication zones for the primary index and each secondary index, in each case setting a leaseholder preference telling CockroachDB to put the leaseholder for the index in a distinct region:> ALTER TABLE promo_codes CONFIGURE ZONE USING num_replicas = 3, constraints = '{"+region=us-central1": 1}', lease_preferences = '[[+region=us-central1]]';> ALTER INDEX promo_codes@promo_codes_idx_east CONFIGURE ZONE USING num_replicas = 3, constraints = '{"+region=us-east1": 1}', lease_preferences = '[[+region=us-east1]]';> ALTER INDEX promo_codes@promo_codes_idx_west CONFIGURE ZONE USING num_replicas = 3, constraints = '{"+region=us-west1": 1}', lease_preferences = '[[+region=us-west1]]';

Step 8. Re-check replica distribution

Still in the SQL shell on one of your client VMs, use the

SHOW RANGESstatement to check replica placement of the geo-specific tables after partitioning:> SELECT * FROM [SHOW RANGES FROM TABLE users] WHERE "start_key" NOT LIKE '%Prefix%'; SELECT * FROM [SHOW RANGES FROM TABLE vehicles] WHERE "start_key" NOT LIKE '%Prefix%'; SELECT * FROM [SHOW RANGES FROM INDEX vehicles_auto_index_fk_city_ref_users] WHERE "start_key" NOT LIKE '%Prefix%'; SELECT * FROM [SHOW RANGES FROM TABLE rides] WHERE "start_key" NOT LIKE '%Prefix%'; SELECT * FROM [SHOW RANGES FROM INDEX rides_auto_index_fk_city_ref_users] WHERE "start_key" NOT LIKE '%Prefix%'; SELECT * FROM [SHOW RANGES FROM INDEX rides_auto_index_fk_vehicle_city_ref_vehicles] WHERE "start_key" NOT LIKE '%Prefix%'; SELECT * FROM [SHOW RANGES FROM TABLE user_promo_codes] WHERE "start_key" NOT LIKE '%Prefix%'; SELECT * FROM [SHOW RANGES FROM TABLE vehicle_location_histories] WHERE "start_key" NOT LIKE '%Prefix%';start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-------------+-----------------------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------------------+ /"new york" | /"new york"/PrefixEnd | 105 | 0.933453 | 3 | cloud=gce,region=us-east1,zone=us-east1-d | {1,2,3} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-east1,zone=us-east1-d"} /"chicago" | /"chicago"/PrefixEnd | 107 | 0.860034 | 6 | cloud=gce,region=us-central1,zone=us-central1-c | {4,5,6} | {"cloud=gce,region=us-central1,zone=us-central1-a","cloud=gce,region=us-central1,zone=us-central1-b","cloud=gce,region=us-central1,zone=us-central1-c"} /"seattle" | /"seattle"/PrefixEnd | 109 | 0.895921 | 7 | cloud=gce,region=us-west1,zone=us-west1-a | {7,8,9} | {"cloud=gce,region=us-west1,zone=us-west1-a","cloud=gce,region=us-west1,zone=us-west1-b","cloud=gce,region=us-west1,zone=us-west1-c"} (3 rows) Time: 1.645458616s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-------------+-----------------------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------------------+ /"new york" | /"new york"/PrefixEnd | 125 | 2.11175 | 2 | cloud=gce,region=us-east1,zone=us-east1-c | {1,2,3} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-east1,zone=us-east1-d"} /"chicago" | /"chicago"/PrefixEnd | 129 | 1.9099 | 5 | cloud=gce,region=us-central1,zone=us-central1-b | {4,5,6} | {"cloud=gce,region=us-central1,zone=us-central1-a","cloud=gce,region=us-central1,zone=us-central1-b","cloud=gce,region=us-central1,zone=us-central1-c"} /"seattle" | /"seattle"/PrefixEnd | 56 | 2.04172 | 9 | cloud=gce,region=us-west1,zone=us-west1-c | {7,8,9} | {"cloud=gce,region=us-west1,zone=us-west1-a","cloud=gce,region=us-west1,zone=us-west1-b","cloud=gce,region=us-west1,zone=us-west1-c"} (3 rows) Time: 1.260863914s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-------------+-----------------------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------------------+ /"new york" | /"new york"/PrefixEnd | 127 | 0.119543 | 1 | cloud=gce,region=us-east1,zone=us-east1-b | {1,2,3} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-east1,zone=us-east1-d"} /"chicago" | /"chicago"/PrefixEnd | 130 | 0.106442 | 5 | cloud=gce,region=us-central1,zone=us-central1-b | {4,5,6} | {"cloud=gce,region=us-central1,zone=us-central1-a","cloud=gce,region=us-central1,zone=us-central1-b","cloud=gce,region=us-central1,zone=us-central1-c"} /"seattle" | /"seattle"/PrefixEnd | 46 | 0.110188 | 9 | cloud=gce,region=us-west1,zone=us-west1-c | {7,8,9} | {"cloud=gce,region=us-west1,zone=us-west1-a","cloud=gce,region=us-west1,zone=us-west1-b","cloud=gce,region=us-west1,zone=us-west1-c"} (3 rows) Time: 3.392228893s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-------------+-----------------------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------------------+ /"new york" | /"new york"/PrefixEnd | 76 | 2.498621 | 2 | cloud=gce,region=us-east1,zone=us-east1-c | {1,2,3} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-east1,zone=us-east1-d"} /"chicago" | /"chicago"/PrefixEnd | 83 | 2.243434 | 5 | cloud=gce,region=us-central1,zone=us-central1-b | {4,5,6} | {"cloud=gce,region=us-central1,zone=us-central1-a","cloud=gce,region=us-central1,zone=us-central1-b","cloud=gce,region=us-central1,zone=us-central1-c"} /"seattle" | /"seattle"/PrefixEnd | 148 | 2.39411 | 7 | cloud=gce,region=us-west1,zone=us-west1-a | {7,8,9} | {"cloud=gce,region=us-west1,zone=us-west1-a","cloud=gce,region=us-west1,zone=us-west1-b","cloud=gce,region=us-west1,zone=us-west1-c"} (3 rows) Time: 1.294584902s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-------------+-----------------------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------------------+ /"new york" | /"new york"/PrefixEnd | 78 | 0.533722 | 1 | cloud=gce,region=us-east1,zone=us-east1-b | {1,2,3} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-east1,zone=us-east1-d"} /"chicago" | /"chicago"/PrefixEnd | 82 | 0.477912 | 4 | cloud=gce,region=us-central1,zone=us-central1-a | {4,5,6} | {"cloud=gce,region=us-central1,zone=us-central1-a","cloud=gce,region=us-central1,zone=us-central1-b","cloud=gce,region=us-central1,zone=us-central1-c"} /"seattle" | /"seattle"/PrefixEnd | 149 | 0.505345 | 7 | cloud=gce,region=us-west1,zone=us-west1-a | {7,8,9} | {"cloud=gce,region=us-west1,zone=us-west1-a","cloud=gce,region=us-west1,zone=us-west1-b","cloud=gce,region=us-west1,zone=us-west1-c"} (3 rows) Time: 3.346661477s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-------------+-----------------------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------------------+ /"new york" | /"new york"/PrefixEnd | 80 | 0.61871 | 2 | cloud=gce,region=us-east1,zone=us-east1-c | {1,2,3} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-east1,zone=us-east1-d"} /"chicago" | /"chicago"/PrefixEnd | 84 | 0.547892 | 6 | cloud=gce,region=us-central1,zone=us-central1-c | {4,5,6} | {"cloud=gce,region=us-central1,zone=us-central1-a","cloud=gce,region=us-central1,zone=us-central1-b","cloud=gce,region=us-central1,zone=us-central1-c"} /"seattle" | /"seattle"/PrefixEnd | 150 | 0.579083 | 7 | cloud=gce,region=us-west1,zone=us-west1-a | {7,8,9} | {"cloud=gce,region=us-west1,zone=us-west1-a","cloud=gce,region=us-west1,zone=us-west1-b","cloud=gce,region=us-west1,zone=us-west1-c"} (3 rows) Time: 3.341758512s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-------------+-----------------------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------------------+ /"new york" | /"new york"/PrefixEnd | 133 | 0.365143 | 1 | cloud=gce,region=us-east1,zone=us-east1-b | {1,2,3} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-east1,zone=us-east1-d"} /"chicago" | /"chicago"/PrefixEnd | 135 | 0.313355 | 6 | cloud=gce,region=us-central1,zone=us-central1-c | {4,5,6} | {"cloud=gce,region=us-central1,zone=us-central1-a","cloud=gce,region=us-central1,zone=us-central1-b","cloud=gce,region=us-central1,zone=us-central1-c"} /"seattle" | /"seattle"/PrefixEnd | 137 | 0.343468 | 9 | cloud=gce,region=us-west1,zone=us-west1-c | {7,8,9} | {"cloud=gce,region=us-west1,zone=us-west1-a","cloud=gce,region=us-west1,zone=us-west1-b","cloud=gce,region=us-west1,zone=us-west1-c"} (3 rows) Time: 1.105110359s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-------------+-----------------------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------------------+ /"new york" | /"new york"/PrefixEnd | 112 | 20.852122 | 3 | cloud=gce,region=us-east1,zone=us-east1-d | {1,2,3} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-east1,zone=us-east1-c","cloud=gce,region=us-east1,zone=us-east1-d"} /"chicago" | /"chicago"/PrefixEnd | 114 | 17.631255 | 4 | cloud=gce,region=us-central1,zone=us-central1-a | {4,5,6} | {"cloud=gce,region=us-central1,zone=us-central1-a","cloud=gce,region=us-central1,zone=us-central1-b","cloud=gce,region=us-central1,zone=us-central1-c"} /"seattle" | /"seattle"/PrefixEnd | 116 | 19.677135 | 8 | cloud=gce,region=us-west1,zone=us-west1-b | {7,8,9} | {"cloud=gce,region=us-west1,zone=us-west1-a","cloud=gce,region=us-west1,zone=us-west1-b","cloud=gce,region=us-west1,zone=us-west1-c"} (3 rows) Time: 1.612425537sYou'll see that the replicas for each partition are now located on nodes in the relevant region:

- New York partitions are on nodes 1 - 3

- Chicago partitions are on nodes 4 - 6

- Seattle partitions are on nodes 7 - 9

This means that requests from users in a city no longer leave the region, thus removing all cross-region latencies.

Now use the

SHOW RANGESstatement to check replica placement of thepromo_codesreference table and indexes:> SHOW RANGES FROM TABLE promo_codes; SHOW RANGES FROM INDEX promo_codes_idx_east; SHOW RANGES FROM INDEX promo_codes_idx_west;start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 87 | 0.835044 | 6 | cloud=gce,region=us-central1,zone=us-central1-c | {1,6,9} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-c"} (1 row) Time: 517.443988ms start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 89 | 0.83622 | 1 | cloud=gce,region=us-east1,zone=us-east1-b | {1,6,9} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-c"} (1 row) Time: 2.449771429s start_key | end_key | range_id | range_size_mb | lease_holder | lease_holder_locality | replicas | replica_localities +-----------+---------+----------+---------------+--------------+-------------------------------------------+----------+---------------------------------------------------------------------------------------------------------------------------------------------+ NULL | NULL | 90 | 0.836372 | 9 | cloud=gce,region=us-west1,zone=us-west1-c | {1,6,9} | {"cloud=gce,region=us-east1,zone=us-east1-b","cloud=gce,region=us-central1,zone=us-central1-c","cloud=gce,region=us-west1,zone=us-west1-c"} (1 row) Time: 2.621930607sYou'll see that the replicas for each index are spread across regions, with the leaseholders each in a distinct region:

- The leaseholder for the

promo_codesprimary index is on node 6 in US Central - The leaseholder for the

promo_codes_idx_eastsecondary index is on node 1 in US East - The leaseholder for the

promo_codes_idx_westsecondary index is on node 9 in US West

As you'll see in a just a bit, with one leaseholder in each region, CockroachDB's cost-based optimizer will make sure that reads always access the local leaseholder, keeping reads from this table very fast.

- The leaseholder for the

Step 9. Re-check latency

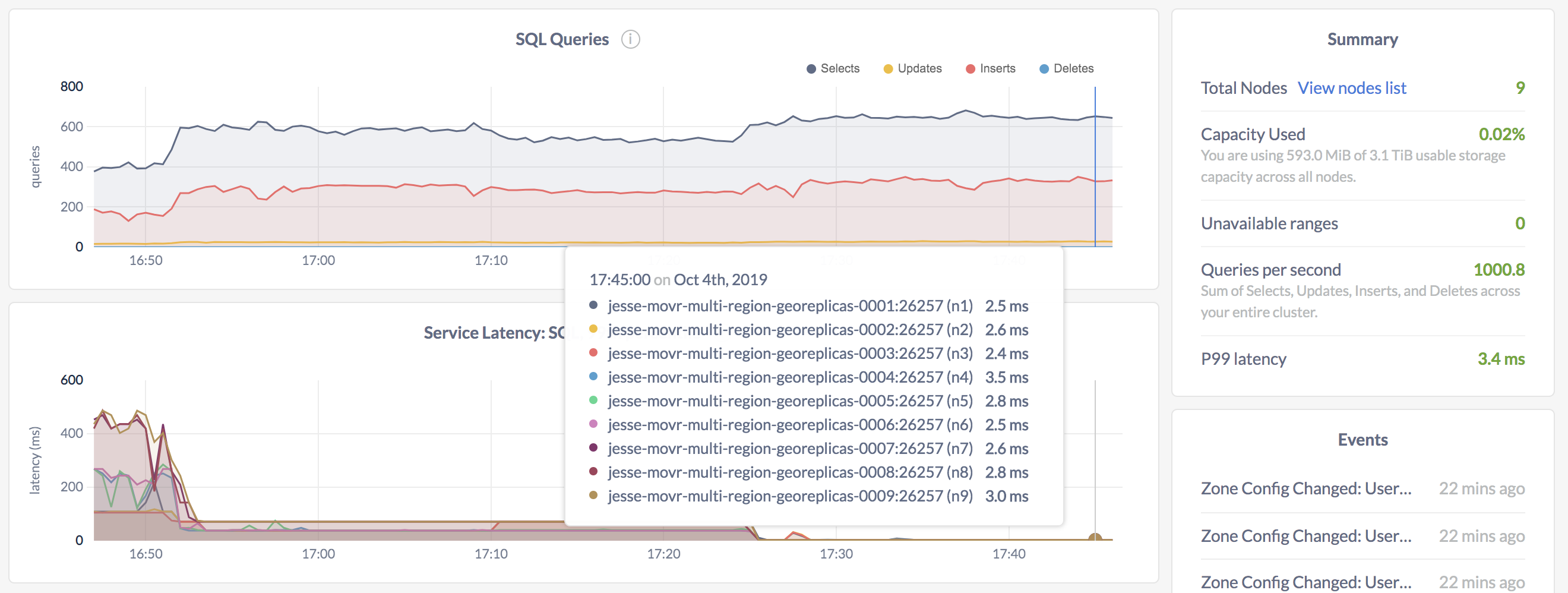

Now that you've verified that replicas are located properly, go back to the DB Console, click Metrics on the left, and hover over the Service Latency: SQL, 99th percentile timeseries graph:

For each node, you'll see that 99% of all queries are now under 4 milliseconds.

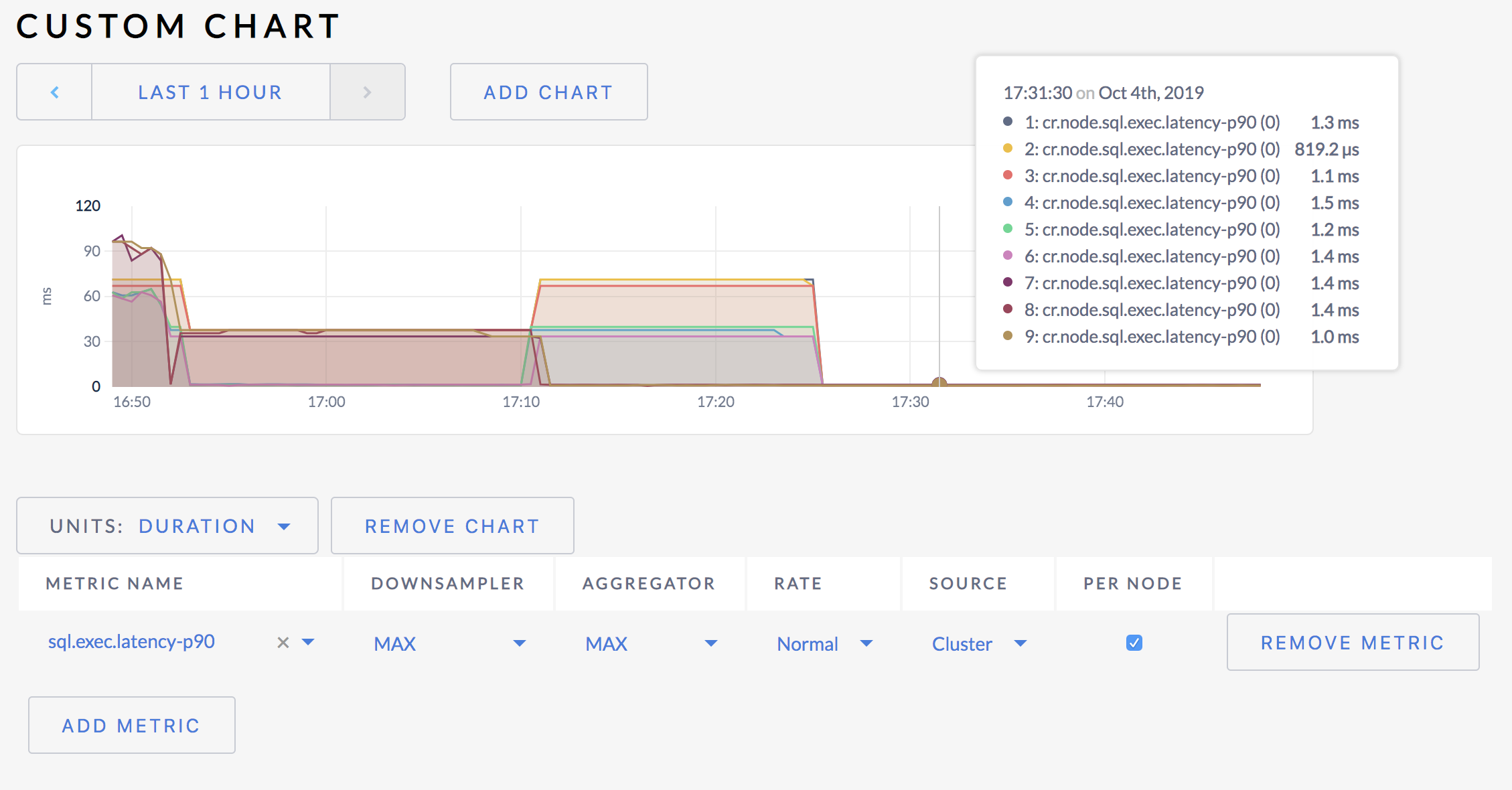

99th percentile latency can be influenced by occasional slow queries. For a more accurate sense of typical SQL latency, go to the following URL to view a custom graph for 90th percentile latency:

http://<external address of any node>:8080/#/debug/chart?charts=%5B%7B%22metrics%22%3A%5B%7B%22downsampler%22%3A3%2C%22aggregator%22%3A3%2C%22derivative%22%3A0%2C%22perNode%22%3Atrue%2C%22source%22%3A%22%22%2C%22metric%22%3A%22cr.node.sql.exec.latency-p90%22%7D%5D%2C%22axisUnits%22%3A2%7D%5D

As you can see, 90% of all SQL queries execute in less than 2 milliseconds. In some cases, latency is even sub-millisecond.

Most of the latency reduction is due to the geo-partitioned tables. However, the duplicate indexes approach for the

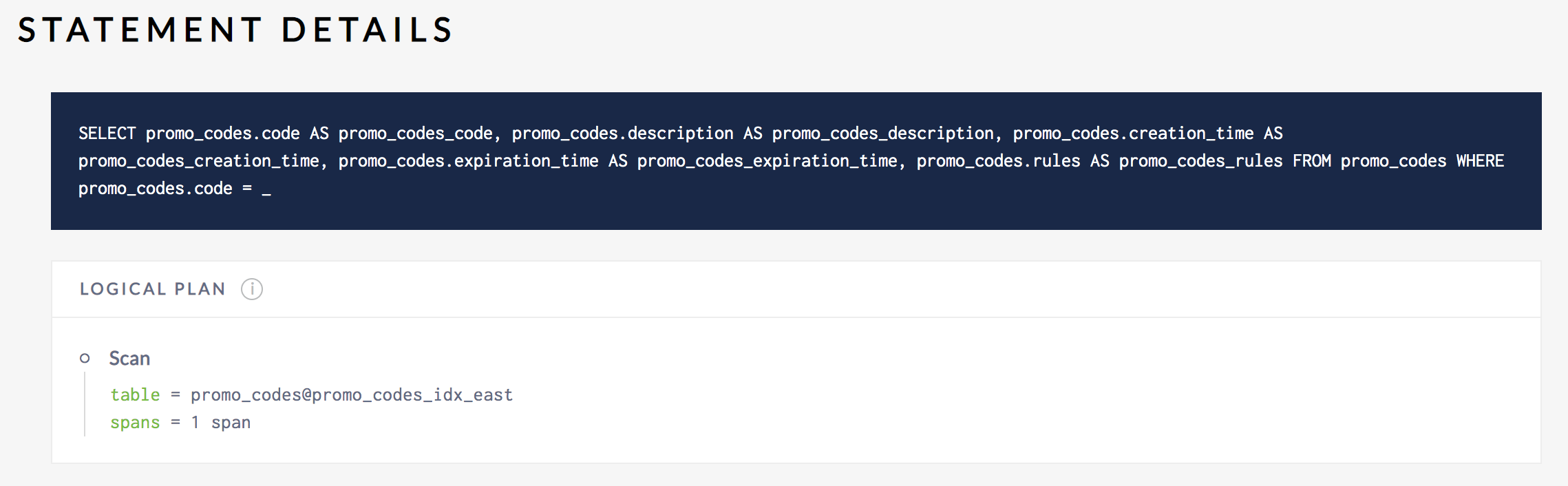

promo_codestable is also relevant. To validate that the cost-based optimizer is picking the appropriate leaseholder from reads frompromo_codesin each region, click Statements on the left, select APP > MOVR-EAST, and then click theSELECT FROM promo_codesstatement:

In the "Logical Plan" area, note the

table = promo_codes@promo_codes_idx_eastscan. This proves that the cost-based optimizer used the leaseholder for that index and, thus, didn't leave the region for the instance of MovR running in US East.To validate this behavior in the other regions, click Statements again on the left and follow the same steps for the other apps instances.

Step 10. Test resiliency

There are various resiliency levels in your cluster:

- For the geo-partitioned data, each partition is constrained to a specific region and balanced across the 3 AZs in the region, so one AZ can fail per region without interrupting access to the partitions in that region.

- For the duplicated reference data, replicas are balanced across regions, so one entire region can fail without interrupting access.

Given that most of the data in your cluster is geo-partitioned, let's focus on AZ-level failure.

SSH to the client VM in the US East region.

Use the

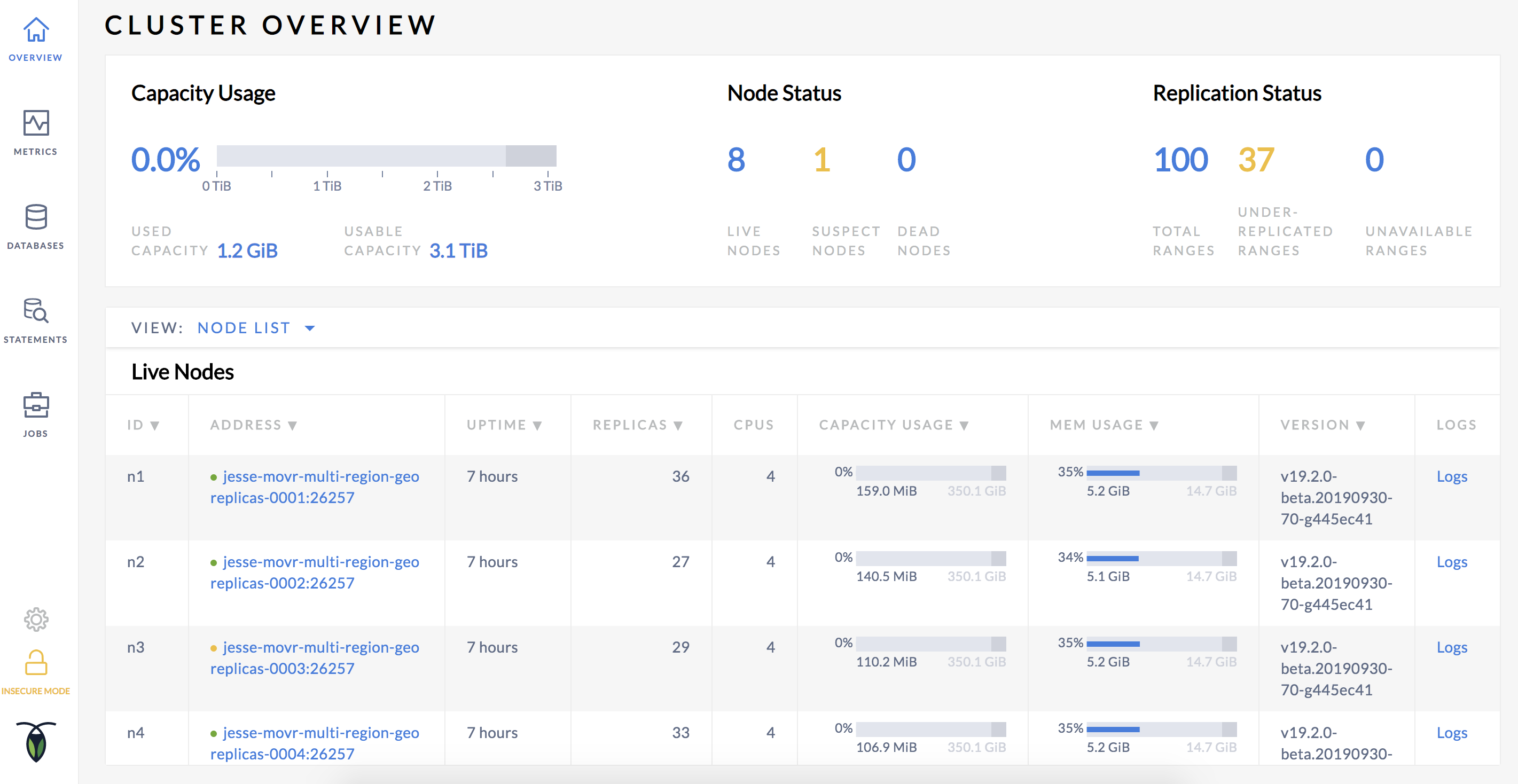

cockroach quitcommand to stop one node, effectively simulating one of the 3 AZ's failing:$ cockroach quit --insecure --host=<address of one node in US East>Back in the DB Console, click Overview and note that the cluster now considers that node "suspect":

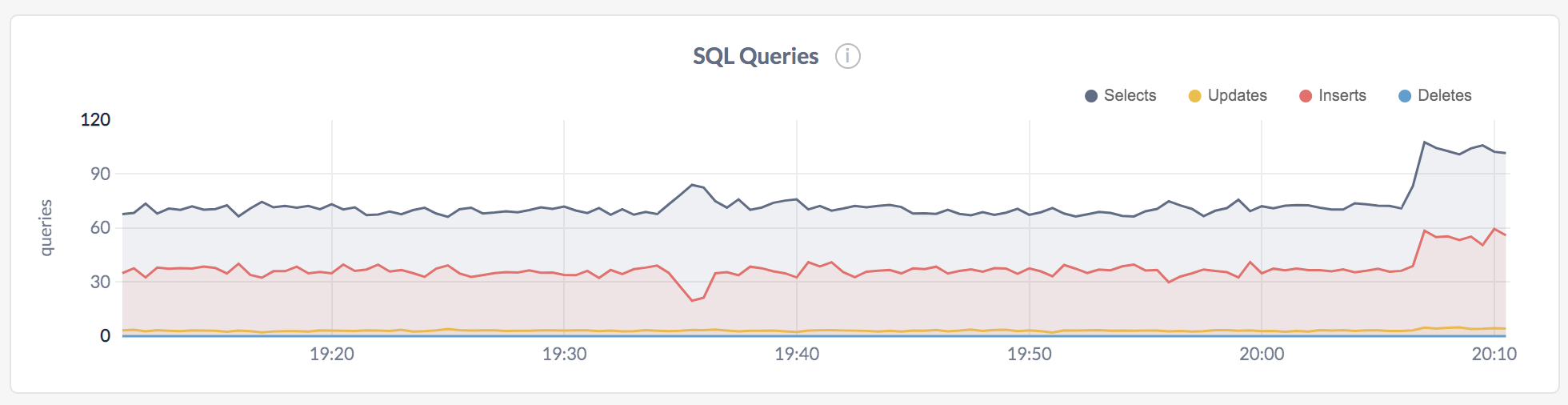

Despite the node being unavailable, the MovR instance in US East continues to make progress because the other 2 nodes, each in a distinct AZ, remain available, and all partitions in the region therefore remain available. To verify this, click Metrics, select node 1 or 2 from the Graph menu, and check that SQL traffic continues on the node:

See also

Related Topology Patterns

Related Case Studies