Update 3/4/19: This feature is out of beta! Want to learn more? Check out our webinar on migrating MySQL data to CockroachDB.

We want to make it easy for users of existing database systems to get started with CockroachDB. To that end, we’re proud to announce that CockroachDB now has beta-level support for importing MySQL database dump files: you can now import your data from the most popular open-source database to our modern, globally-distributed SQL database with a single command.

We had already done some work to enable this – since version 1.1, CockroachDB has supported importing data from tabular formats like CSV and TSV – but there were some obvious limitations to this existing functionality. Because CSV files don’t contain any schema information, users could only import one table at a time, and the target CockroachDB schema needed to be specified explicitly.

In addition to being verbose, this process also steepened the learning curve for getting started with CockroachDB, since the schema-specification step required users to be familiar with the intricacies of CockroachDB data types before loading their data.

Could there be a more direct way to migrate data to CockroachDB in bulk? Many popular database systems offer functionality to produce SQL “dump” files, which consist of long lists of SQL statements that define the schemas and populate the data for multiple tables. (CockroachDB itself can also generate files like this, via the SHOW CREATE command.)

While dump files are convenient for ad-hoc backup and restore operations within a single database system, the plethora of existing SQL implementations makes these dump files inherently proprietary. They often rely on vendor-specific SQL statements and extensions: CockroachDB or Postgres can’t directly execute the SQL commands in a dump file produced by MySQL, and vice versa. And even if all dump files used mutually-intelligible SQL syntax, transactionally running the INSERT statements in a dump file would be much slower than our existing bulk import operations.

Despite these limitations, the convenience and ubiquity of SQL dump files convinced us that it was worth the engineering effort to natively support importing them. We’ve seen a large volume of customer interest in migrating from MySQL — for example, the Chinese internet giant Baidu moved critical application data for a billion users from MySQL to CockroachDB, and now stores 40 TB of data in CockroachDB — so we prioritized building MySQL import for CockroachDB 2.1.

Using MySQL Import

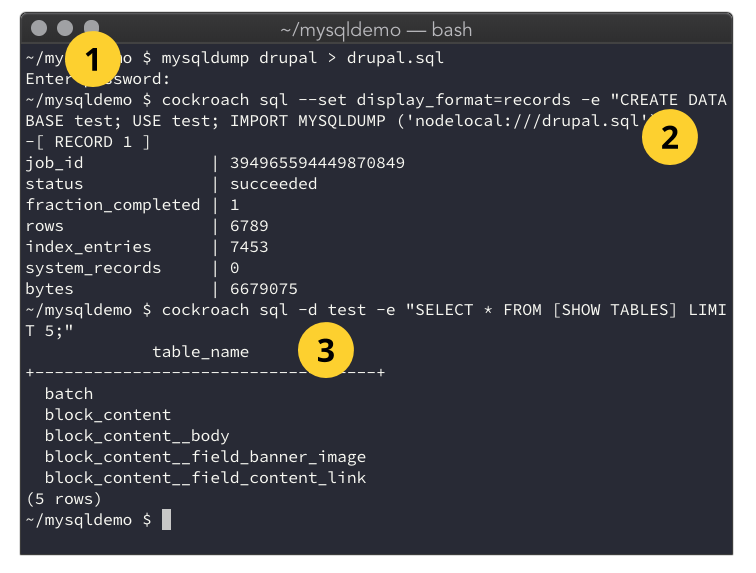

Here’s what a real-world migration from MySQL to CockroachDB looks like, in which the data from a Drupal demo site running on MySQL is migrated to CockroachDB with a single SQL statement:

- Export your data from MySQL to a dump file using the mysqldump utility

- Execute an IMPORT MYSQLDUMP statement in CockroachDB

- Verify that the imported tables from the MySQL dump are now present in CockroachDB

Beneath this simple process is a great deal of complex engineering:

- First, we need to parse the SQL statements in the file. Because CockroachDB’s SQL dialect adapts the syntax and semantics of Postgres, our parser doesn’t natively understand the MySQL dialect. Fortunately, the Vitess project provides an open-source MySQL parser that we were able to adapt to meet our needs.

- Once the input file is parsed, CockroachDB needs to translate the input table definitions and data types to its own data types. We researched the existing data and sequence types supported by MySQL and mapped them to the closest appropriate CockroachDB data types.

- After each table’s data types are determined, the actual table data needs to be written to CockroachDB. We adapted our existing CSV import system to add entire tables at once without the performance penalty of executing multiple logical INSERT statements. CockroachDB first samples the input SQL dump file to determine the approximate distribution of rows across the keyspace. It then generates the appropriate key-value pairs to store the data, and distributes them to the database nodes that will store them.

Gotchas with MySQL Import

Importing data from SQL dump files is currently in beta — while we’re generally confident in our implementation of this functionality, the complexity of MySQL’s grammar means that there are going to be some issues. Known issues that we intend to address prior to the general-availability release of this functionality include:

-

CockroachDB can’t import MySQL tables that define fulltext indexes (#29625).

- Workaround: Remove the fulltext index definition from the schema in the dump file.

-

CockroachDB can’t import certain invalid date values supported by MySQL (#29298).

- Workaround: Update MySQL records with date fields containing zeros to use NULL or a different sentinel value; change column definitions that specify a default zero date to be nullable.

-

SQL dump import performance is lower than CSV import performance, because reading a single large input file isn’t yet parallelized across cores or nodes.

These issues aside, we’re confident that MySQL dump import will greatly simplify the process of getting started with CockroachDB. Follow the instructions in our MySQL migration guide to try it yourself — and please file a bug report if you run into any issues importing your data.