Free distributed SQL database

CockroachDB Serverless is free. Spin up a cluster today!

Start instantlySo you’ve spun up a free CockroachDB cluster, and now you’ve got a next-generation distributed SQL database. That’s great!

Now, how do you actually get your data into it?

Thankfully, there are lots of ways to get your data into CockroachDB. So many, in fact, that we can’t actually cover all of them. In this post, we’ll take a look at some of the most common ways to get your data into CockroachDB, whether you’re working with a database dump from something like MySQL or PostgreSQL, or you’ve just got a CSV you exported from Excel.

Here’s a quick outline of the post so that you can skip to the sections most relevant to you:

Migrating from a Postgres database

Migrating from other databases

Migrating from a Postgres database

If we’re migrating from one database to another, we’ll be starting by getting what we need out of our old database, and getting our new CockroachDB cluster set up to import that schema and all of the data. Let’s walk through how to make that happen.

Step 0: Preparing your database dump files

If we haven’t already, we’ll need to do a dump from the old database. We’ll be using the CockroachDB schema conversion tool to make the migration process easier, so the best way to approach this is to actually generate two dump files:

- A schema-only dump

- A data-only dump

Documentation on how to generate these dump files from Postgres using pg_dump is here, but in short, we can run the following command to generate a data-only dump:

pg_dump [connection-option...] [option...] [dbname] -a

And the following command to generate a schema-only dump:

pg_dump [connection-option...] [option...] [dbname] -s

Dumps can be output in script or archive file formats. Scripts are plain-text SQL files, and which we can easily make into the .sql files we’ll need for subsequent steps. Archive files (.dump) can be converted using pg_restore like so:

pg_restore -f /path/file.sql /path/file.dump

At the end of this step, we should have two .sql files:

- one containing the old database’s schema

- one containing the actual data

Once those are ready, we’ll move on to the next step: converting and migrating the schema to CockroachDB.

Step 1: Importing and converting the schema

To do this, we’ll start from our clusters page and click on the specific cluster we’d like to migrate to.

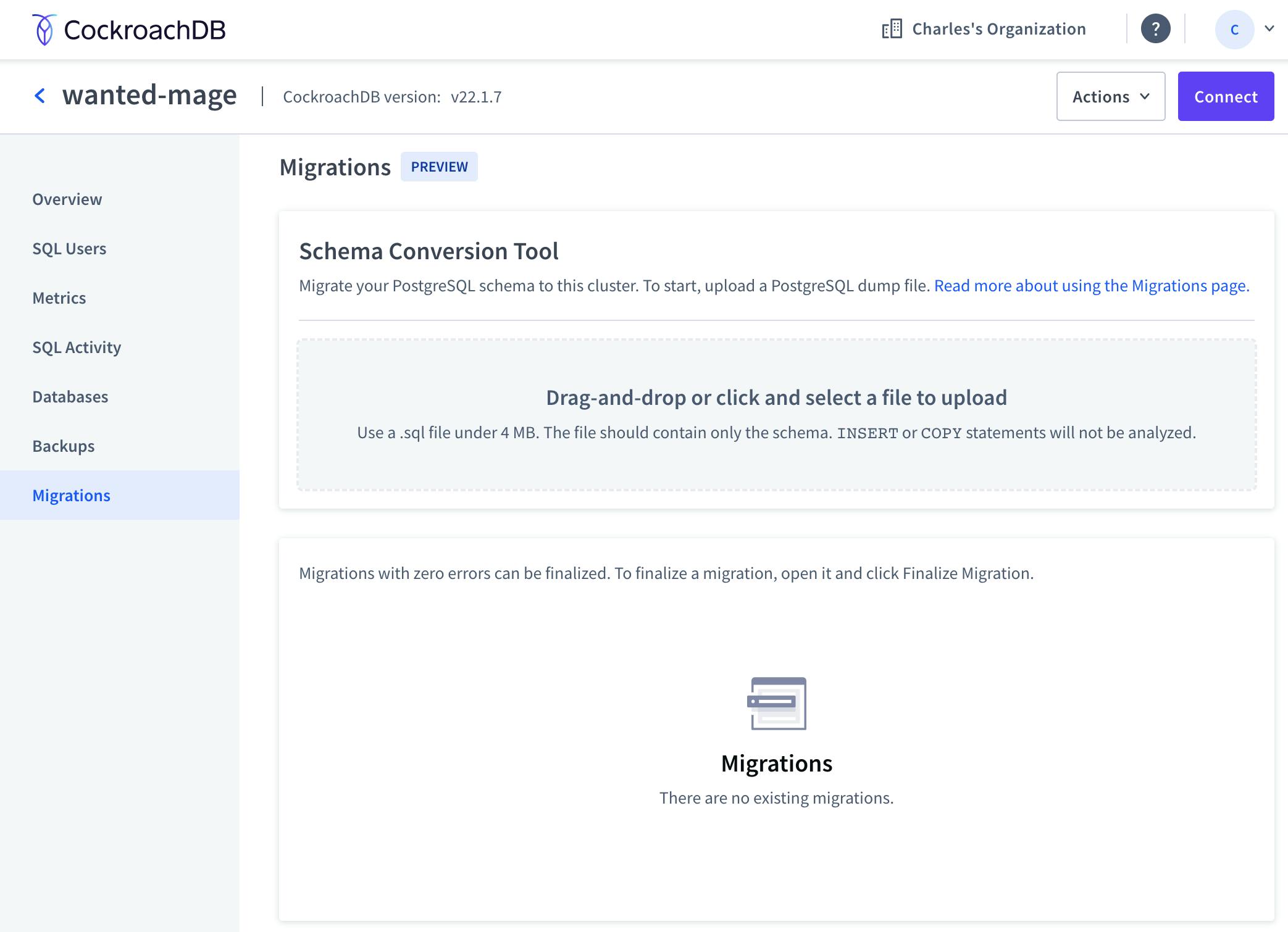

Once we’re on the page for the cluster, there will be a “Migrations” tab in the left-hand navigation menu. Clicking that will bring us to the migrations page, which looks like this:

On this page, we can simply drag and drop our .sql file into the gray upload box to begin the migration.

Note that file uploads are limited to 4 MB. If you’re working with a large database, your file may be larger than this. But don’t worry, you can still import your database!

PostgreSQL allows for the export of schema-only database dumps (see the Postgres documentation for details on how to do this). This should result in a much smaller .sql file that contains only the database schema; we can use the schema conversion tool to import that. The schema conversion tool doesn’t process INSERT or COPY statements anyway, so we’ll be getting our data into the database separately in step 2, but we need to move the schema over first.

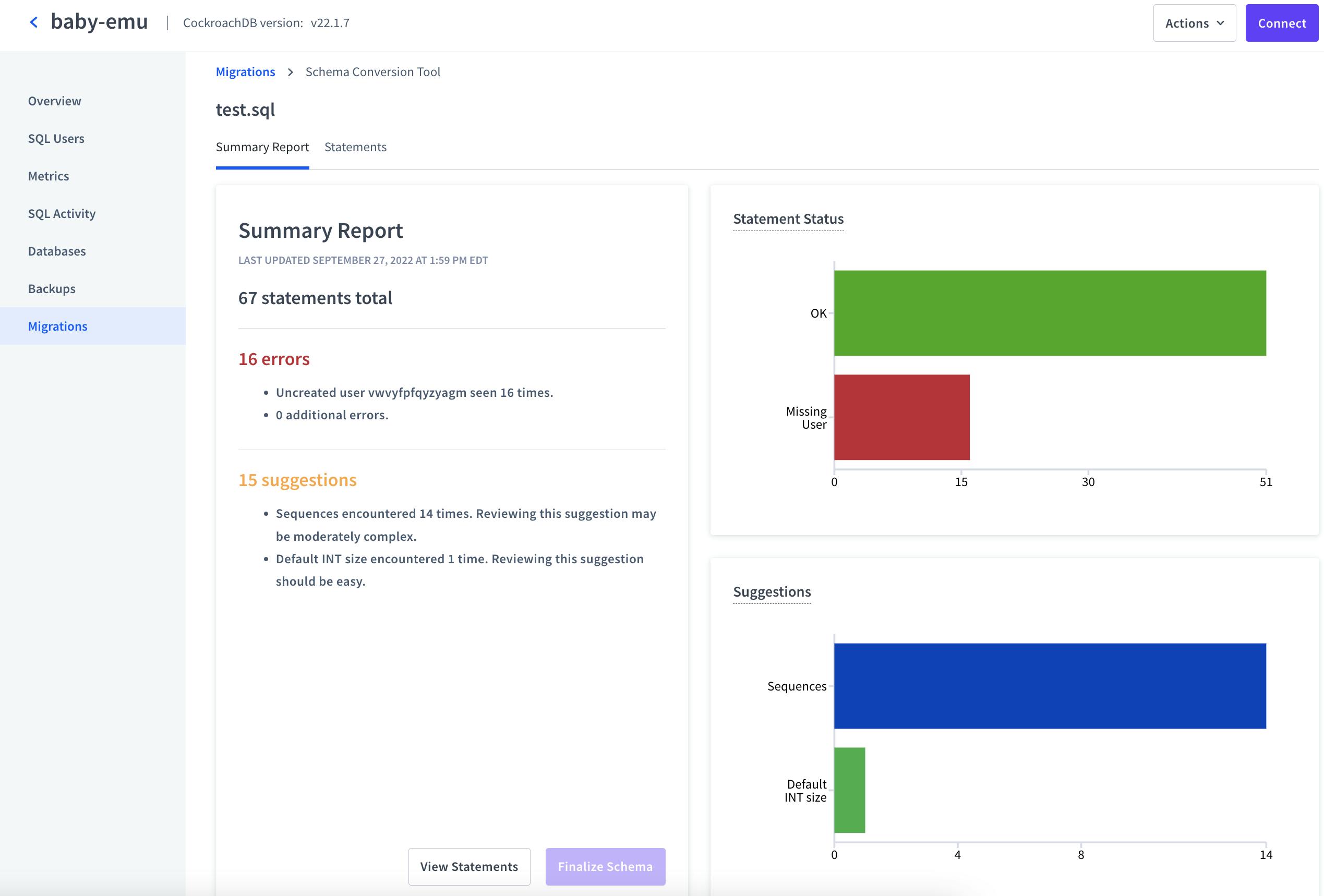

When we’ve uploaded our .sql file, the schema conversion tool will take a little time to process it, and then we’ll be taken to a page that looks like this:

As every database schema is different, each migration will produce different results, but this page will always display two things:

- The number of errors. Errors must be resolved before we can finalize the migration.

- The number of suggestions. These are issues we may want to address for a variety of reasons, but we won’t need to address them to finalize the migration.

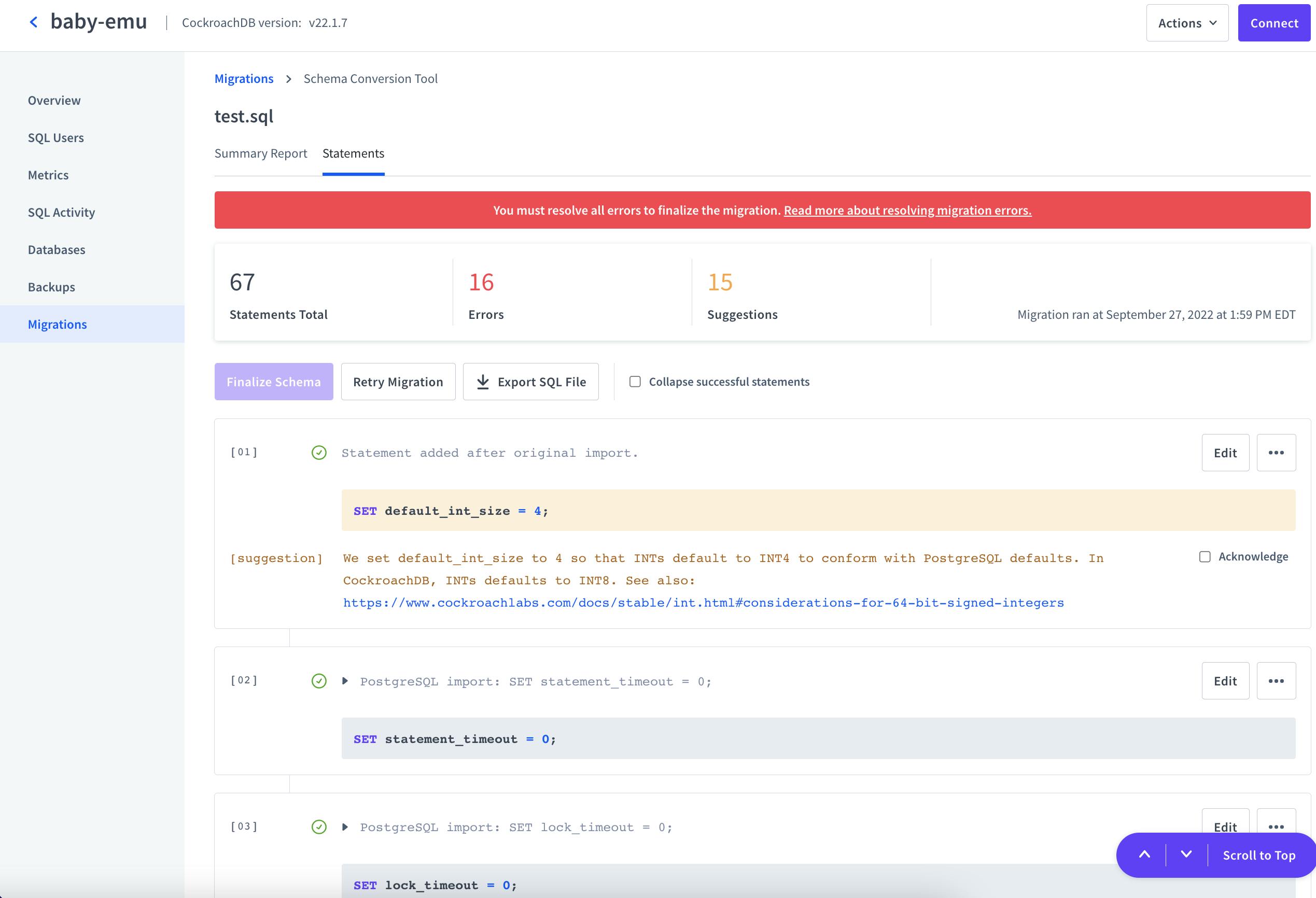

From here, we can click on the “Statements” tab at the top or the “View Statements” button at the bottom, and we’ll get a page like this:

This page walks through each statement we may want to address, and we can adjust them directly on this page by hitting the edit button.

At this point, we can’t provide step-by-step instructions because the statements on this page will be different for every database. However, the schema conversion tool should make it clear what needs to be done, and facilitate making those changes.

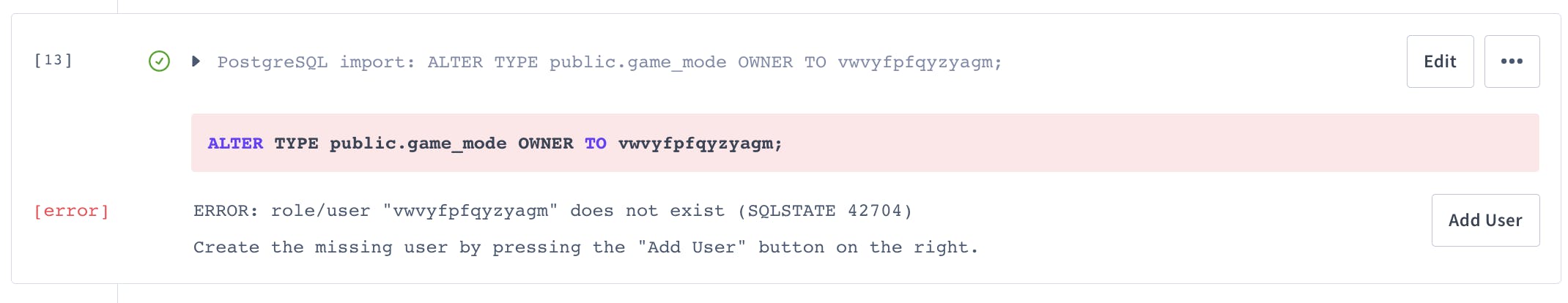

For example, in these screenshots we’ve been migrating a real database dumped from an application that had been using Heroku Postgres, and the only error we’ve encountered is that that database had a user that doesn’t yet exist in our CockroachDB cluster.

If we click “Add User”, we’ll be prompted to generate a password for the missing user, and once we’ve done this, all of the errors will be cleared.

Once the errors have all been dealt with, we can scroll back to the top of the page and hit “Retry Migration.” The migration will be processed again, and with all of the errors cleared, we’ll then be able to click the “Finalize Schema” button.

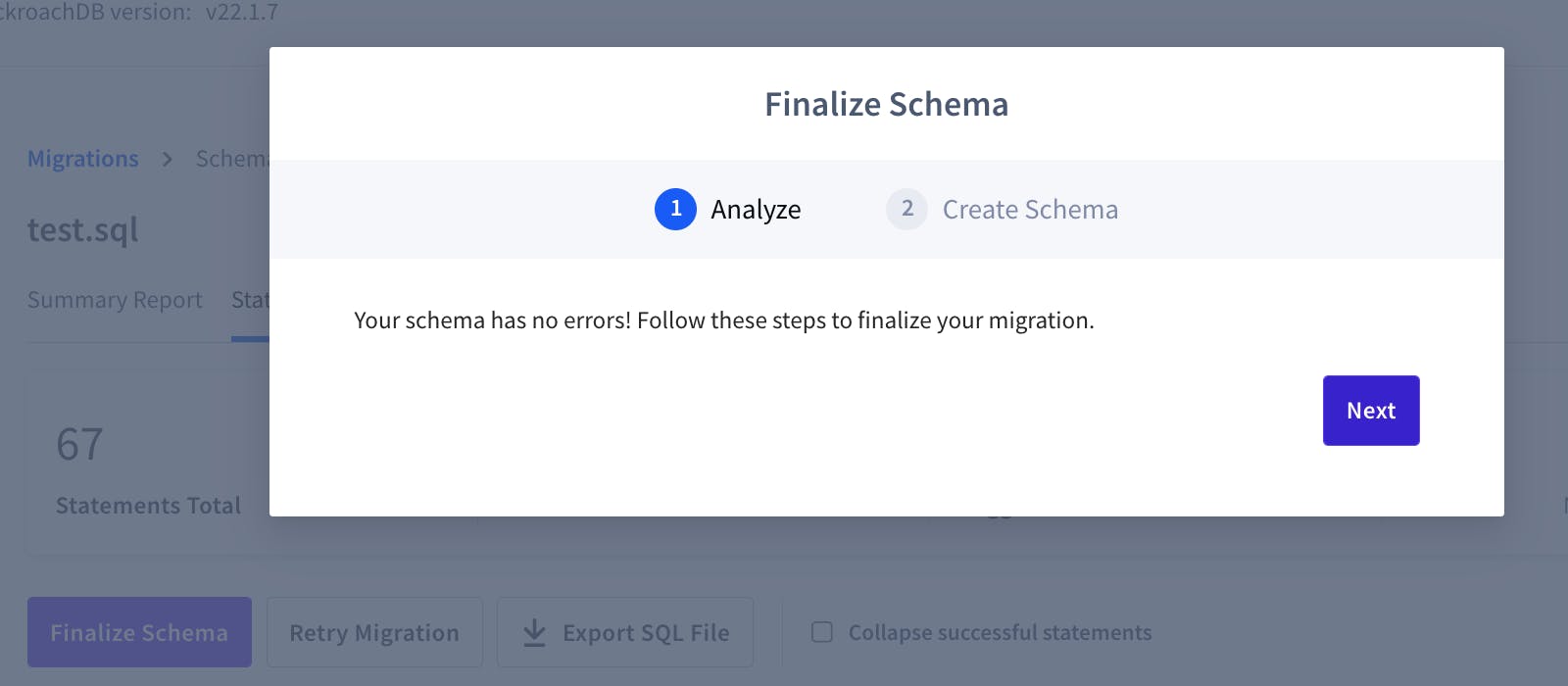

We’ll then be prompted with a window confirming the schema has no errors:

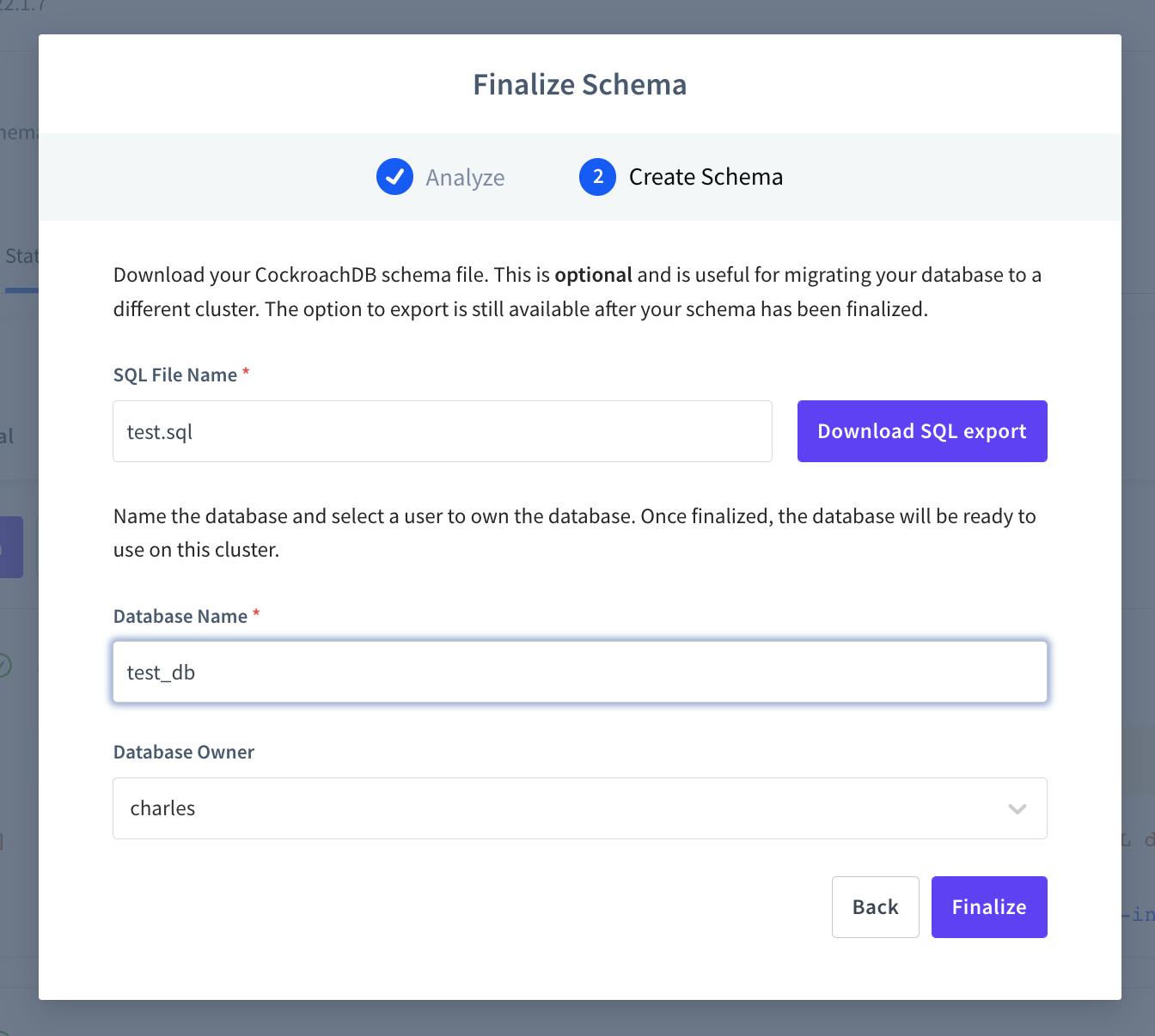

Click next, and we’ll get another window prompting us to give our new database a name (in this case, test_db) and specify a user who owns the database. Note that we can also download the edited .sql file (i.e., a version of our Postgres database dump that has the errors resolved and any other changes we made on the Statements page) if we want. This is optional, and this file will still be available after finalizing the schema:

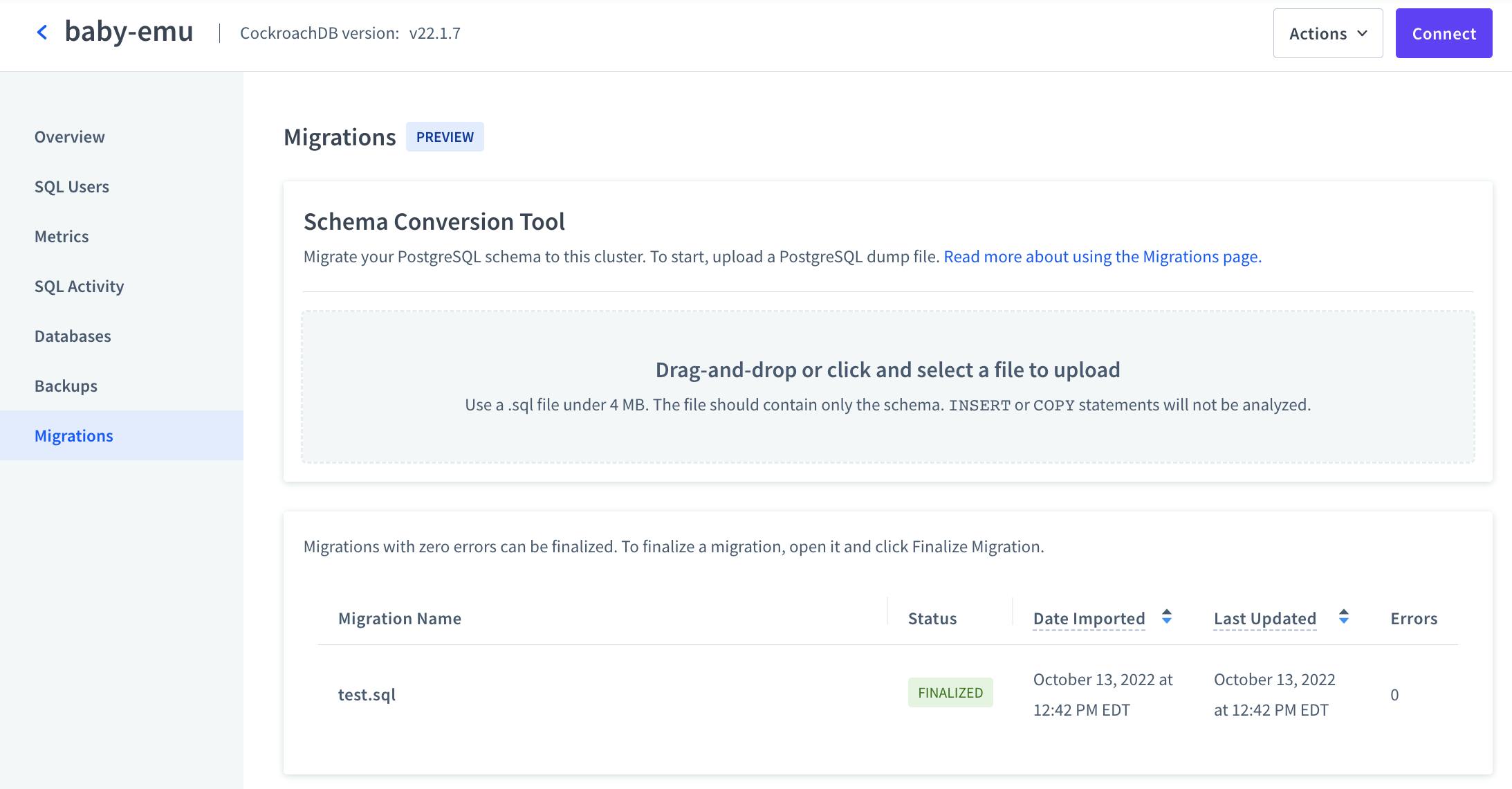

Clicking “Finalize” will return us to the statements page, but we can click the “Migrations” tab in the left-side navigation to return to the main migrations page and confirm that the migration has finalized:

Note the value FINALIZED in the Status column in the image above.

To further confirm the migration has been completed, we can click on “Databases” in the left-side navigation menu, and we’ll see that test_db is now there and has the correct number of tables listed.

However, if we actually query the database, we’ll see that our tables don’t have any actual data. This is because the schema migration tool doesn’t interpret INSERT or COPY statements. Now that our schema’s set up properly, let’s get that data in there, too!

Step 2: Importing the data

There are a number of ways to approach importing your data. We will outline some of the most common below.

Option 1: use the IMPORT command

When working with database dumps from Postgres or MySQL, we can use the IMPORT command in CockroachDB. (For other source database types, we can use AWS DMS or try other approaches such as importing the data from a .sql file).

Precisely how we import the .sql file containing our data will depend on where it is stored. Below, we’ll run through the basic commands for importing a .sql file from Postgres or MySQL from each of the three major cloud storage providers. Remember that in addition to replacing all of the account and access parameters in the commands below, we must also replace the .sql filenames with the name of our actual .sql file.

These commands can be executed from within the CockroachDB SQL shell or any other tool that can connect to your serverless cluster and execute SQL statements.

If stored in Amazon S3

For Postgres files:

IMPORT PGDUMP 's3://{BUCKET NAME}/{customers.sql}?AWS_ACCESS_KEY_ID={ACCESS KEY}&AWS_SECRET_ACCESS_KEY={SECRET ACCESS KEY}'

WITH ignore_unsupported_statements;

For MySQL files:

IMPORT MYSQLDUMP 's3://{BUCKET NAME}/{employees-full.sql}?AWS_ACCESS_KEY_ID={ACCESS KEY}&AWS_SECRET_ACCESS_KEY={SECRET ACCESS KEY}';

If stored in Google Cloud Storage

For Postgres files:

IMPORT PGDUMP 'gs://{BUCKET NAME}/{employees.sql}?AUTH=specified&CREDENTIALS={ENCODED KEY}'

WITH ignore_unsupported_statements;

For MySQL files:

IMPORT MYSQLDUMP 'gs://{BUCKET NAME}/{employees.sql}?AUTH=specified&CREDENTIALS={ENCODED KEY}';

If stored in Azure Storage

For Postgres files:

IMPORT PGDUMP 'azure://{CONTAINER NAME}/{employees.sql}?AZURE_ACCOUNT_NAME={ACCOUNT NAME}&AZURE_ACCOUNT_KEY={ENCODED KEY}'

WITH ignore_unsupported_statements;

For MySQL files:

IMPORT MYSQLDUMP 'azure://{CONTAINER NAME}/{employees.sql}?AZURE_ACCOUNT_NAME={ACCOUNT NAME}&AZURE_ACCOUNT_KEY={ENCODED KEY}';

Option 2: use AWS DMS

When migrating from an existing, publicly-hosted database containing application data such as MySQL, Oracle, or PostgreSQL, we can use AWS DMS. This option is more involved, but is a good choice for important migration projects because it can be configured to generate logs, which will assist in troubleshooting migration issues if they do occur.

For a full walkthrough of how to migrate from a database to CockroachDB using AWS DMS, please refer to our documentation. The AWS documentation for AWS DMS may also be helpful.

Option 3: import local files using the SQL shell

We can also import locally-stored data such as Postgres and MySQL dumps, or other .sql files containing data in COPY or INSERT statements directly to our CockroachDB cluster using the shell. (Note that this is recommended only for small databases of about 15 MB or less. For larger databases, use the IMPORT command or use AWS DMS).

This approach requires installing the CockroachDB SQL client, if you haven’t already done so. Hit the “Connect” button from the page of any cluster to quickly access the command for downloading and installing the client if you haven’t already.

Below, is an example of what this command looks like, but you should navigate to your CockroachDB cloud account on the web and hit the “Connect” button from a cluster page to get the correct command, as the latest version of the client may have changed by the time you’re reading this post.

curl https://binaries.cockroachdb.com/cockroach-v22.1.8.darwin-10.9-amd64.tgz | tar -xz; sudo cp -i cockroach-v22.1.8.darwin-10.9-amd64/cockroach /usr/local/bin/

Once that’s installed, importing from a local file is pretty straightforward. While there are various ways to approach this task, the simplest is probably to use the SQL client we just installed in the command line to pipe our .sql file into the database like so:

cockroach sql --url "cluster connection string" < filepath/file.sql

In the command above, we’ll need to replace "cluster connection string" with our unique connection string. This command will execute the statements .sql file inside the cluster and database specified by the connection string.

Note that when you copy a connection string from the CockroachDB cloud connection window, it will connect to the database defaultdb in that cluster. Generally, our schema will be set up on a different database – such as the test_db we created earlier in this article – so we must remember to replace defaultdb in the connection string with the name of the specific database we’re importing our data to. If completing this step results in a “relation ___ does not exist” SQLSTATE: 42P01 error, this is the most likely culprit.

Option 4: third-party tools

It’s worth noting that there are a variety of additional ways to get data into CockroachDB using third-party tools. For example, if we’ve got a database dump from Postgres we’d like to import, and we have psql installed, we can run a version of the following command from the command line:

psql 'your-connection-string-here' -f /path/file.sql

For this to work, we’ll need to replace your-connection-string-here with our connection string (inside single quotes) and /path/file.sql with whatever the correct file path is for the .sql file.

However, we generally recommend using the CockroachDB MOLT schema conversion tool rather than this sort of approach, as it will highlight errors and issues with the imported schema and facilitate making quick fixes. Using an approach like the psql one detailed above will require more manual adjustments.

Step 3: Test and update your application

Although it probably goes without saying, we’ll say it anyway: after any migration or data import, you should check to ensure that all of your data looks as expected, and applications linked to the new database are functioning as expected.

Because distributed SQL databases like CockroachDB work differently from traditional SQL databases, it’s also important to test performance. Reviewing CockroachDB’s SQL performance best practices will help ensure that you’re getting the most out of your new database.

Migrating from other databases

It’s possible to migrate from almost any kind of database to CockroachDB. However, the steps will vary depending on the specific database type and schema you’re coming from. A complete guide to migrating from every possible database is outside the scope of this post, but in general, the process will look similar to migrating from Postgres, although it may require a bit more manual work.

Step 0: Export a dump from your database and create a .sql file

At a minimum, you’ll need to export all of your data. It may or may not be helpful to separately export your schema as well.

Step 1: Convert and set up your schema

The CockroachDB schema migration tool currently only works with Postgres databases, so migrating schema from other relational databases to CockroachDB will require manually creating all of the relevant schema objects in CockroachDB.

Step 2: Importing the data

Refer to step 2 in the previous section for instructions on how to import your actual data to CockroachDB once your schema is all set up – this step of the process is the same whether you’re coming from Postgres or another database such as MySQL, Oracle, MariaDB, Microsoft SQL Server, MongoDB, etc. The approaches outlined there, including using IMPORT, using AWS DMS, using local .sql files, etc. will often be viable.

Importing data from CSV or Avro files

CockroachDB also supports importing data from a variety of other filetypes, including CSV and Avro files. The process for this is similar to the approaches outlined above, and generally consists of three steps:

Step 2a: export and prepare the data

Precisely how we execute this step will depend on where the data is coming from, but we do need to make sure that it conforms with some specific requirements, which can be found in the links below:

Step 2b: host the files somewhere the cluster can access them

There are a variety of options here, including cloud storage, creating a local file server, or uploading a userfile.

Step 2c: import the data

For both CSVs and Avro files, importing the data consists of two parts.

First, create a table in your database with a schema that matches the data in file you’re importing.

Second, run an import command that pulls the data from the file. Below are examples of what this command would look like for CSV and Avro files stored in an AWS bucket:

CSV:

IMPORT INTO employees (emp_no, birth_date, first_name, last_name, gender, hire_date)

CSV DATA ( 'https://s3-us-west-1.amazonaws.com/cockroachdb-movr/datasets/employees-db/csv/employees.csv.gz'

);

Avro:

IMPORT INTO employees (emp_no, birth_date, first_name, last_name, gender, hire_date)

AVRO DATA ( 's3://[bucket-placeholder]/userdata1.avro?AWS_ACCESS_KEY_ID=[placeholder]&AWS_SECRET_ACCESS_KEY=[placeholder]'

);

For more details and additional options, see our documentation on importing CSV files and importing Avro files.

Step 3: Test and update your application

Although it probably goes without saying, we’ll say it anyway: after any migration or data import, you should check to ensure that all of your data looks as expected, and applications linked to the new database are functioning as expected.

Because distributed SQL databases like CockroachDB work differently from traditional SQL databases, it’s also important to test performance. Reviewing CockroachDB’s SQL performance best practices will help ensure that you’re getting the most out of your new database.

Learn about how to get the best performance out of CockroachDB with free courses on Cockroach University.