At Cockroach Labs, we're focused on making data easy for our customers. CockroachDB is designed as a vendor-agnostic, cloud-native database for transactional workloads. We offer a number of benefits over traditional relational databases including serializable isolation, online schema changes, and high availability fault-tolerance. Today, we want to demonstrate another CockroachDB differentiator: multi-region support for global scale.

In this blog post, we introduce Follower reads, a key feature for supporting multi-region reads with low latency when your use case can accept stale data.

To introduce Follower reads, we built a demo global application called Wikifeedia, with the entirety of the source code available for you to play along with. To allow you to see the application in action, we’re hosting the app using CockroachDB Dedicated for the next six months.

Wikifeedia Overview

Wikifeedia is an application built on top of the public APIs from Wikipedia. It shows users a globally sorted index of content based on the most reviewed content in each language for the previous day. Since its target audience is global, it needs to be accessible from anywhere in the world, with low latency. But, like many news aggregations, the content isn’t changing from second to second. As such, it can tolerate slightly stale data.

The application is hosted on Google Cloud Kubernetes Engine, while the underlying database is hosted using our own Cockroach Cloud managed service offering. As a side note, this application has become a personal favorite of ours as we find ourselves eagerly checking out Wikifeedia to determine what’s trending on Wikipedia in any given day.

Optimizing Wikifeedia for Low Latency

Low database latency is critical to providing a low latency application experience around the world. But it’s not the only factor at play. Here are a couple other ways we initially optimized Wikifeedia to provide low latency.

CockroachDB WebUI for Wikifeedia

HTTP Caching

Every time a browser loads Wikifeedia, it downloads static assets (e.g., HTML, CSS, JavaScript, and images) to display content. Static assets use a significant amount of data and take a lot of time to load. Slow page times can frustrate users, and cause them to abandon their attempt to load a page if latency is too high. This can be problematic as we want our users to see a crisp and fast Wikifeedia.

In addition to providing fast response times to Wikifeedia users, we want to minimize the number of requests the server receives. We can address both of these concerns by employing browser caching to store files in the user’s browser. Cached files load faster, and they obviate the need for more server requests when the data is already present in the browser. Of course, this doesn’t apply to a user’s first visit, but it can dramatically improve response times for repeat users. Any modern web application should partner HTTP caching with good database management to reduce latency and load time.

Furthermore, by setting the appropriate caching headers, service writers can utilize CDNs to cache and serve static assets with low latency. Utilizing CDNs for static assets is a good first step when looking at reducing latency for global web applications. Check out this MDN Guide on how to set cache control headers to effectively utilize CDNs and browser caches.

Global Load Balancing

It is also critical to consider routing when deploying global applications; a simple deployment using round-robin DNS over global application server IP addresses would suffer from intermittent poor performance for all users rather than the reliable, good performance for some users in the single-region deployment. To solve this problem we recommend using a global load balancer. With Google’s Cloud Load Balancer, for example, you can reserve a single IP address that uses anycast to route to a nearby point-of-presence, and then route to the lowest latency backend. An alternative might be to use GeoDNS to attempt to resolve a nearby application server based on the requesting location’s AWS Route 53.

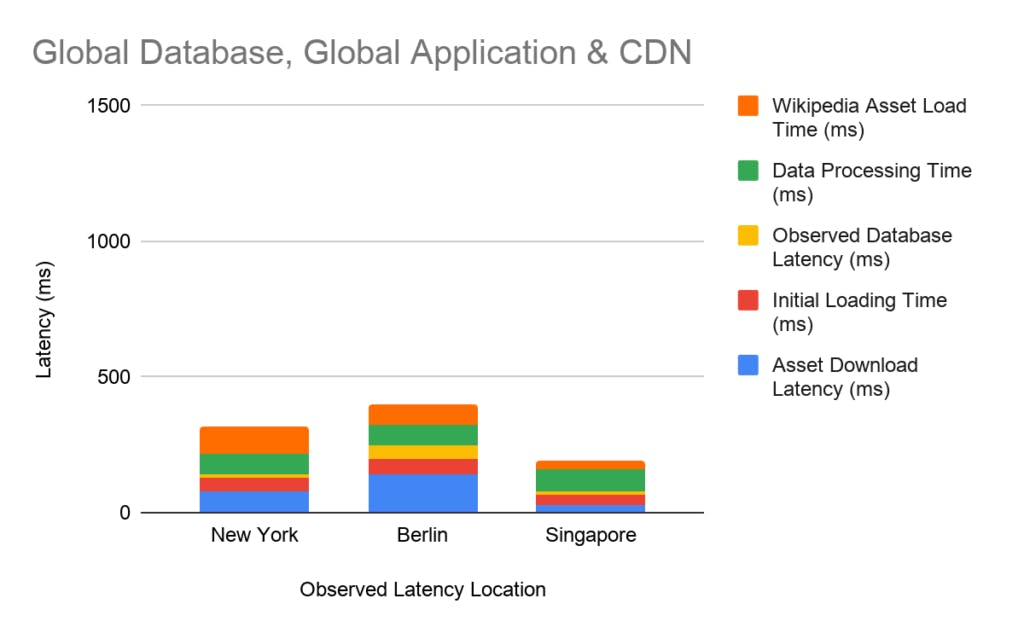

Reducing Latency: Performance Analysis

Over the next few sections we’ll explore example latency profiles observed loading the application from different locations (i.e., New York, Berlin, and Singapore) with different deployment methodologies (i.e., US east-only deployment, global deployment without Follower reads, and global deployment with Follower reads). All of the measurement was performed using the Uptrends Free Website Speed Test.

Deployment 1: US East Deployment

For our first deployment of Wikifeedia, we deployed both the database and application in a single region. In this initial deployment strategy, Wikifeedia’s database is deployed in AWS us-east-1 (Northern Virginia), and its application servers are in GCP us-east4 (i.e., Northern Virginia). Note that the application servers are in GCP and the database servers are in AWS. This unconventional, cross-cloud deployment topology is not recommended, but was utilized to help dog-food AWS cluster creation while only having access to a GCP Kubernetes cluster. To reduce complexity, we recommend staying strictly within either cloud as they both offer global load balancing and nodes in each of the regions.

This deployment provides excellent latency for users in New York, but increasingly unpalatable latencies for users further away from US East, like those in Berlin and Singapore.

The first thing to notice is that the latency is dominated by the time taken to download the static assets for the page. These static assets are comprised of an index.html page which is less than 2kB and CSS and JavaScript assets which are collectively less than 400kB. The high latency between Singapore and the application server in Northern Virginia (i.e., GCP us-east4) has severe costs both while fetching the static assets and while fetching the list of top articles, but the cost of fetching the assets dominates.

Deployment 2: Global Application Deployment, No Follower reads

For the next iteration, we deployed Wikifeedia globally to reduce the latency from clients to application servers. For this strategy, we deployed two additional data centers in Europe. The application servers are in GCP europe-west1 (i.e., Belgium) and the database is in AWS eu-west-1 (i.e., Ireland), and Asia (i.e., Singapore), with application servers in GCP asia-southeast1 (i.e., Singapore) and database in AWS ap-southeast-1 (i.e., Singapore). By deploying the application servers nearer to the client, we dramatically reduce the loading time for the static assets. We can see that this global deployment strategy dramatically improves the user experience.

In this configuration, we demonstrate using application servers near the client with a single database in the US. In practice, there are database nodes near each application server, but the leaseholder is set to remain in the US so the Asia and Europe nodes always need to communicate over these high-latency links. Notice how the database call now dominates the latency.

What can we do about that? Make the database global, and use Follower reads!

Deployment 3: Global Application with Follower reads

In the final deployment model, we adopt a new feature in CockroachDB called Follower reads in our business logic. This allows each of the replicas to serve low-latency reads. Here we can see that the distance from the client to the application server and the distance from the application server to the database node contributes to our latency variation! For users in Singapore, this represents an 8x latency improvement over our first deployment.

Let’s look a bit deeper at the Singapore data via the Uptrends report above. Without Follower reads, users in Singapore observe a latency of ~430 ms (0.08 to 0.55) retrieving the top articles from the application server.

However, if we deploy a global database with Follower reads Singaporean users can end-to-end query latency closer to 20 ms.

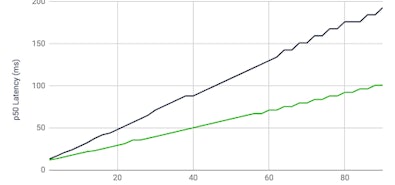

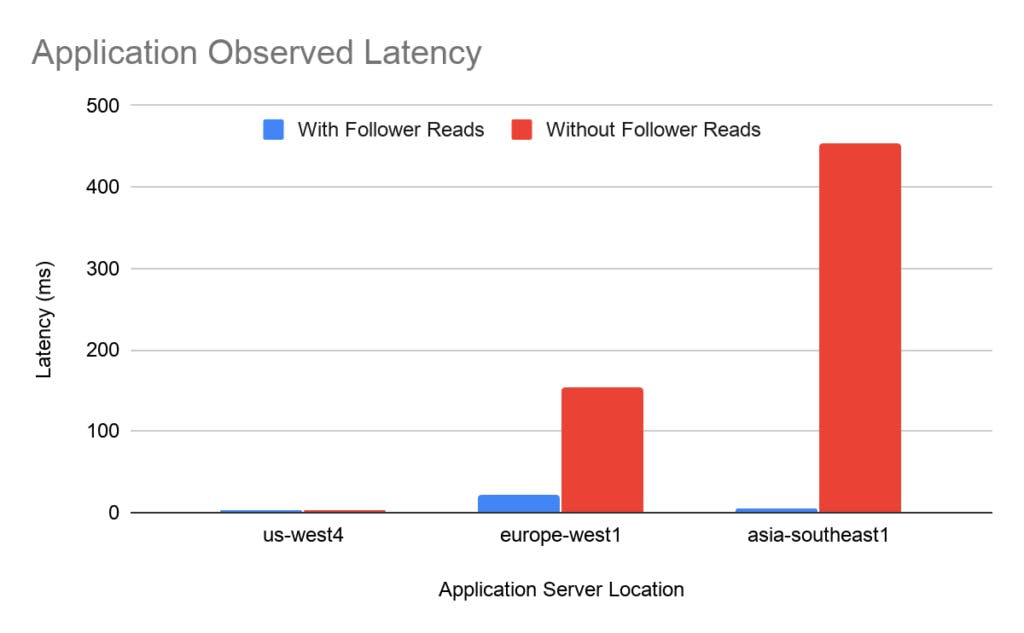

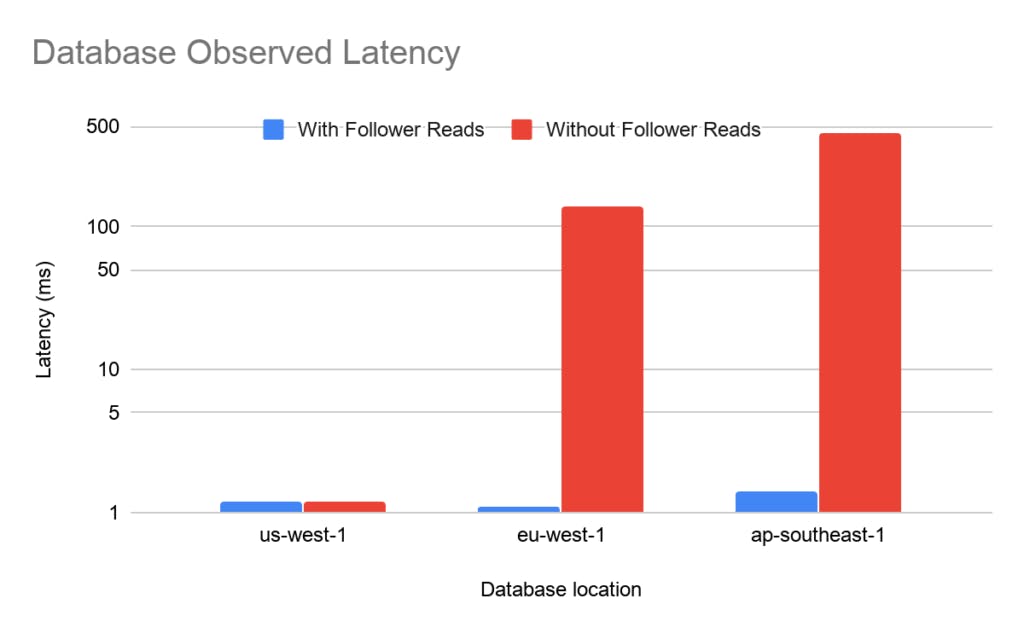

Database Latency Compared

As we mentioned above, the database latency is only part of the total picture. But it is an important part! We’ve pulled out the database latency into a standalone chart comparing latency between a database only deployed in US-East and a global database. The below charts show the time observed by the application servers and the database nodes to fetch the data.

One point of interest is the latency observed by the European application server. This is due to the relatively high latency between the application server in Belgium and the database server in Ireland. All of the latencies between the application servers and database nodes are several milliseconds due primarily to the cross-cloud deployment. The difference between 500ms and 20 milliseconds makes a real difference to users, the difference between 20ms and 2ms matters less. A global application deployment needs a global database deployment.

You can try out Wikifeedia yourself by visiting https://wikifeedia.crdb.io. As a side note, this application has become a personal favorite of ours as we find ourselves eagerly checking out Wikifeedia to determine what’s trending on Wikipedia in any given day.

Follower Reads Overview

You might be wondering, how does CockroachDB enable low-latency reads anywhere in the world for this globally sorted content? How did we hit ~1 ms reads in a global cluster?

The answer is using our enterprise Follower reads feature which we introduced in version 19.1.

Follower reads are a mechanism to let any replica of a range serve a read request, but are only available for read queries that are sufficiently in the past, i.e., using AS OF SYSTEM TIME.

In widely distributed deployments, using Follower reads can reduce the latency of read operations (which can also increase throughput) by letting the replica closest to the gateway serve the request, instead of forcing the gateway to communicate with the leaseholder, which could be geographically distant. While this approach may not be suitable for all use cases, it’s ideal for serving data from indexes which are populated by background tasks.

In our topology pattern guidance, we explain that:

“Using this pattern, you configure your application to use the Follower reads feature by adding an AS OF SYSTEM TIME clause when reading from the table. This tells CockroachDB to read slightly historical data (at least 48 seconds in the past) from the closest replica so as to avoid being routed to the leaseholder, which may be in an entirely different region. Writes, however, will still leave the region to get consensus for the table.”

Let’s dive deeper into the data to see how Follower reads work in practice with Wikifeedia.

Wikifeedia & Follower Reads

Let’s evaluate the latency of Wikifeedia with the following query:

SELECT

project, article, title, thumbnail_url, image_url, abstract, article_url, daily_views

FROM

articles

WHERE

project = $1

ORDER BY

daily_views DESC

LIMIT

$2;

This query selects the top $2 articles from project $1. The home page would have values (“en”, 10). Once again, to demonstrate latency anywhere in the world, we’ll use the Uptrends Website Speed Test. Uptrends allow us to simulate users access the Wikifeedia from many geographies including New York, Berlin, and Singapore.

First, let’s start with a baseline of latency on Wikifeedia without Follower reads. Here are the results of Wikifeedia when we do not serve Follower reads as seen in our native CockroachDB WebUI:

With this enhancement to our SQL, we can enable Follower reads.

--- before

+++ after

@@ -3,3 +3,3 @@

FROM

- articles

+ articles AS OF SYSTEM TIME experimental_follower_read_timestamp()

WHERE

We’ve added AS OF SYSTEM TIME and experimental_follower_read_timestamp() to specify to the system that it is appropriate to serve a historical read in exchange for improving latency.

As a result of enabling this feature, we can see the latencies in nodes 2 and 3 drop from 137 ms and 450 ms to 1.8 ms and 2.0 ms respectively!

Conclusion

All global applications should use both a global application deployment (including a global CDN) and a globally deployed database. Further, CockroachDB’s Follower reads pattern is a good choice for global deployments with tables with the following requirements:

- Read latency must be low, but write latency can be higher.

- Reads can be historical.

- Rows in the table and all latency-sensitive queries cannot be tied to specific geographies (e.g., a reference table).

- Table data must remain available during a region failure.

Also, remember that Follower reads are just one tool in our multi-region toolkit. They can actually be used in conjunction with many of CockroachDB’s other multi-region features. We’ve previously written about What Global Data Actually Looks Like and How to Leverage Geo-partitioning. We’ve even provided a step-by-step tutorial on How to Implement Geo-partitioning. Consult our topology guide to learn more about how you can tailor CockroachDB to better support your multi-region use case or contact sales for personalized support.