Automation tools like HashiCorp Terraform solve an important problem: they remove the burden of manual processes like the creation of cloud infrastructure or the deployment of applications. Terraform does this by allowing you to define the desired state of your infrastructure or application, and when you run the software, will talk to the relevant APIs of the requested service provider. It will then make the desired changes to deploy or amend the required infrastructure to deliver the desired outcome (this is called Infrastructure as Code (IaC)).

Basically, Terraform makes it possible for you to treat your servers, databases, networks, and other infrastructure like software. This code can help you configure and deploy these infrastructure components quickly and consistently.

IaC helps you automate the infrastructure deployment process in a repeatable, consistent manner, which has many benefits including:

- Speed and simplicity

- Configuration consistency

- Minimization of risk

- Increased efficiency in software development

- Cost savings

Terraform is a mature product and is already widely adopted. As a result many teams will already be using this to deploy many key elements of their infrastructure stack. In this blog post we have taken the newly released CockroachDB Cloud Terraform provider along with the AWS EKS module and deployed them both in a basic working example.

How to deploy CockroachDB Dedicated with Terraform

If you want to follow along you will need to install a few things to get started. All of the code for this working example is held in a git repository, to be able to clone this repository you will need the Git CLI installed. Next, as this is a blog about Terraform you will need to have this on your computer as well, this blog was built using version 1.3.1. In order to create resources within Cockroach Cloud an API key is required. This can be created via the Cockroach Cloud UI. To test the connectivity between the application Kubernetes cluster running in AWS you will need the AWS CLI and kubectl.

List of required prerequisites:

- Git CLI

- Terraform (=>1.3.1)

- Cockroach Cloud API Key

- AWS CLI (For KubeConfig Auth)

- kubectl

Step 1: Clone the git repository

git clone https://github.com/cockroachlabs-field/cockroach-dedicated-terraform-example.git

Step 2: Grab your API key

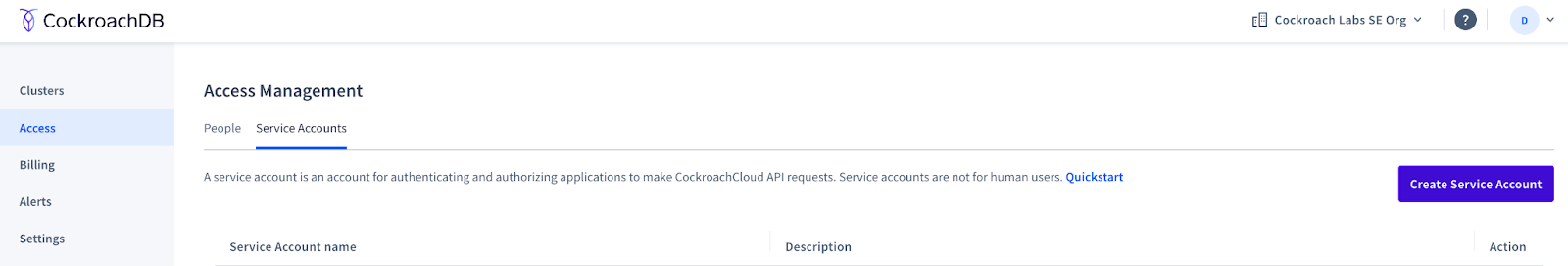

Before we get into the Terraform code and the resources it creates we need to add the Cockroach API key as a local environment variable. You can create one of these in the Cockroach Cloud UI by firstly adding a service account, which then allows you to generate a key associated with it.

Once a service account is created and an API key generated we export that into our environment for Terraform to use.

export COCKROACH_API_KEY=<YOUR_API_KEY>

Step 3: Prepare your variables

The new CockroachDB Cloud Terraform provider requires the presence of the environment variable to be able to create the required resources within the cloud platform. Now let’s take a closer look at the Terraform code itself.

Within the repository there is a file called terraform.tfvars.example. This file is an example of the variables that you can pass to the Terraform run to define the configuration. Make a copy of this file and call it terraform.tfvars then update the values as required for your own deployment. As this is just an example, we’re just going to create this file locally and store the variables in plain text. For production environments these values should not be checked into the code repository and if using CI/CD should be passed in using pipeline secrets or stored in a third party tool such as Vault.

cluster_name = "dsmbcrdbtftexample"

sql_user_name = "mbds"

sql_user_password ="ThisIsASuperSafePassword"

cloud_provider = "AWS"

cloud_provider_region = ["eu-west-2"]

cluster_nodes = "3"

storage_gib = "150"

machine_type = "m5.xlarge"

cidr_ip = "10.10.0.0"

cidr_mask = "16"

eks_cluster_name = "ds-mb-cockroach-tf-example"

region = "eu-west-2"

vpc_name = "example-vpc"

The Terraform code itself is split into two modules. The first module creates the required AWS infrastructure for the Kubernetes cluster, this is where we intend to run our application in the future. We are not going to focus here for today’s blog, we are going to focus on the CockroachDB Dedicated Cluster module. In the main.tf for this module there are three resources that will be created when this code is executed.

resource "cockroach_cluster" "cockroach" {

name = var.cluster_name

cloud_provider = var.cloud_provider

dedicated = {

storage_gib = var.storage_gib

machine_type = var.machine_type

}

regions = [

for r in var.cloud_provider_region : {

name = r,

node_count = var.cluster_nodes

}

]

}

This first resource is the cluster itself, within this resource you are able to define some basic information about your cluster. For example the cluster name, which cloud provider you would like to use along with the hardware configuration. The next resource is where we have white listed the NAT Gateways IP address of our AWS resources so this is allowed to access the Cockroach database.

resource "cockroach_allow_list" "cockroach" {

depends_on = [

cockroach_cluster.cockroach

]

name = var.allow_list_name

cidr_ip = var.ngw_ip

cidr_mask = "32"

ui = true

sql = true

id = cockroach_cluster.cockroach.id

}

The third and final resource in this simple example is the creation of a SQL User and Password.

resource "cockroach_sql_user" "cockroach" {

name = var.sql_user_name

password = var.sql_user_password

id = cockroach_cluster.cockroach.id

}

Step 4: Initialize your Terraform

To prepare our directory containing the terraform code we have just cloned from the GitHub repository we must run terraform init. By running this command it prepares our directory with all the required components that are defined in our code. For example downloading any Terraform providers or modules that are externally hosted. In this blog that will be the CockroachDB Cloud provider.

terraform init

Step 5: Check your planned outcome

Now we are able to move to the next stage which is to run a terraform plan. The terraform plan command creates an execution plan, which lets you preview the changes that Terraform plans to make to your infrastructure. When Terraform creates a plan it:

- Reads the current state of any already-existing remote objects to make sure that the Terraform state is up-to-date.

- Compares the current configuration to the prior state and notes any differences.

- Proposes a set of change actions that should, if applied, make the remote objects match the configuration.

terraform plan

The plan command alone does not actually carry out the proposed changes. You can use this command to check whether the proposed changes match what you expected before you apply the changes or share your changes with your team for broader review.

If Terraform detects that no changes are needed to resource instances or to root module output values, terraform plan will report that no actions need to be taken.

Step 6: Deploy your infrastructure

To build our cluster there is one final step to complete. Once we are happy with our terraform plan and the resources that it is going to create, then terraform apply can be executed.

terraform apply -auto-approve

This will create all the resources that are defined in the terraform code in the repository. It will now take a number of minutes as Terraform instructs the APIs of AWS and Cockroach Cloud.

Validate your infrastructure

After a while (up to 30 mins) depending on the time of day. Your cluster will become visible in the Cockroach Cloud UI where you can observe and monitor the database.

IMPORTANT - An important thing to remember is that the cluster is now being managed by Terraform and changes should be avoided in the UI.

We are now going to perform a simple network connectivity test to prove communication between the AWS EKS Kubernetes cluster and the cluster running in Cockroach.

Retrieve the CockroachDB Connection string and the DB Console URL from the Cockroach Cloud UI for your newly created cluster. (Currently the provider does not output the connection string) You can find this by navigating to the newly created cluster and clicking connect on the top right hand side of the browser.

During the execution of Terraform apply, a kubeconfig file was generated in order to access the EKS cluster we’ve set up, in order to validate it has provisioned correctly we need to set up Kubectl to use the generated kubeconfig file.

cd modules/aws_infra/

export KUBECONFIG=kubeconfig

In order to validate the connection to the Kubernetes cluster and to ensure everything is running we can run the below command, this will output all of the pods running in the entirety of the cluster.

kubectl get pods --all-namespaces

Once we’ve validated that the Kubernetes cluster is alive and working we want to leverage the cluster in order to test the network connectivity between it and the Cockroach Cluster. If this test passes, we’ve validated that applications would be able to talk to the database when deployed.

These two commands will simply run an Alpine Linux container in the default namespace which has curl installed, you can replace the strings here with the connection strings obtained from the Cockroach Cloud UI, the database itself is listening on port 26257 and the UI is listening on 8080.

kubectl run -it network-test-1--image=alpine/curl:3.14 --restart=Never -- curl -vk dsmbcrdbtftexample-6q9.aws-eu-west-2.cockroachlabs.cloud:26257

kubectl run -it network-test-2 --image=alpine/curl:3.14 --restart=Never -- curl -vk admin-dsmbcrdbtftexample-6q9.cockroachlabs.cloud:8080/health

Upon the completion of these two commands you will see a similar output to the below screenshot, a 200 OK status will be printed when checking the health endpoint of the UI.

Database as code

Public cloud providers have very mature offerings when it comes to IaC, allowing DevOps teams to manage their infrastructure as code using market leading tools like Terraform, bringing all the benefits mentioned above. If SaaS providers like Cockroach Labs want these same teams to move their workload to alternative technologies like CockroachDB then they need to dovetail into existing processes. This means delivering the tooling needed to manage a deployment of the database as code.

This is just the first iteration of the CockroachDB Cloud Terraform provider and we are looking forward to additional capabilities being added in the future to make the experience more complete. If you would like to see any specific feature please reach out and let us know!