See CDC in action!

Webinar: How Netflix unlocks analytical use cases with CockroachDB's Change Data Capture

Watch now ->We’re excited to announce an integration that brings together two cloud-native powerhouses: CockroachDB and Confluent. Businesses can now seamlessly connect the distributed SQL capabilities of CockroachDB with the real-time event streaming features of Confluent using change data capture. Whether it’s real-time analytics, event-driven architectures, or significantly simplified data migrations, this integration is your ticket to a more streamlined, efficient, and powerful data architecture.

Cockroach and Confluent: A Match Made in (Data) Heaven

Using Confluent and Cockroach together creates leverage in your data architecture in five significant areas:

1. Reliability

The distributed nature of CockroachDB and Kafka ensures that your applications remain resilient against failures, providing a truly uninterrupted service. Distributed data stores mean that if one node goes down, service can continue to function without any hiccups.

2. Scalability

Confluent Cloud and Cockroach Cloud help you to future proof your data architecture. They are both built for native horizontal scalability. As your data streams and datasets grow, you won’t need to worry about infrastructure or scalability issues.

3. Cloud-Native Synergy

The true magic happens when you leverage Confluent Cloud and CockroachDB Cloud together. Both platforms offer fully managed platforms, meaning less time tinkering and more time innovating.The cloud-native architecture of both platforms ensures auto-scaling and resilience. Using them together as-a-service removes operational complexity and the burden of maintenance

4. Global Reach

Cockroach Cloud offers geo-replicated clusters, and when combined with Confluent’s global presence, you get a solution that’s truly global.

5. Cost Efficiency

Both services offer pay-as-you go pricing models. So, you only pay for what you use, driving down costs and ensuring optimal resource utilization.

Integrate Cockroach Cloud with Confluent Cloud in just 3 steps

In just three steps, you can set up a resilient, enterprise-grade data pipeline from your CockroachDB cluster to Confluent Cloud, using CRDB’s built-in data streaming and bulk export capabilities.

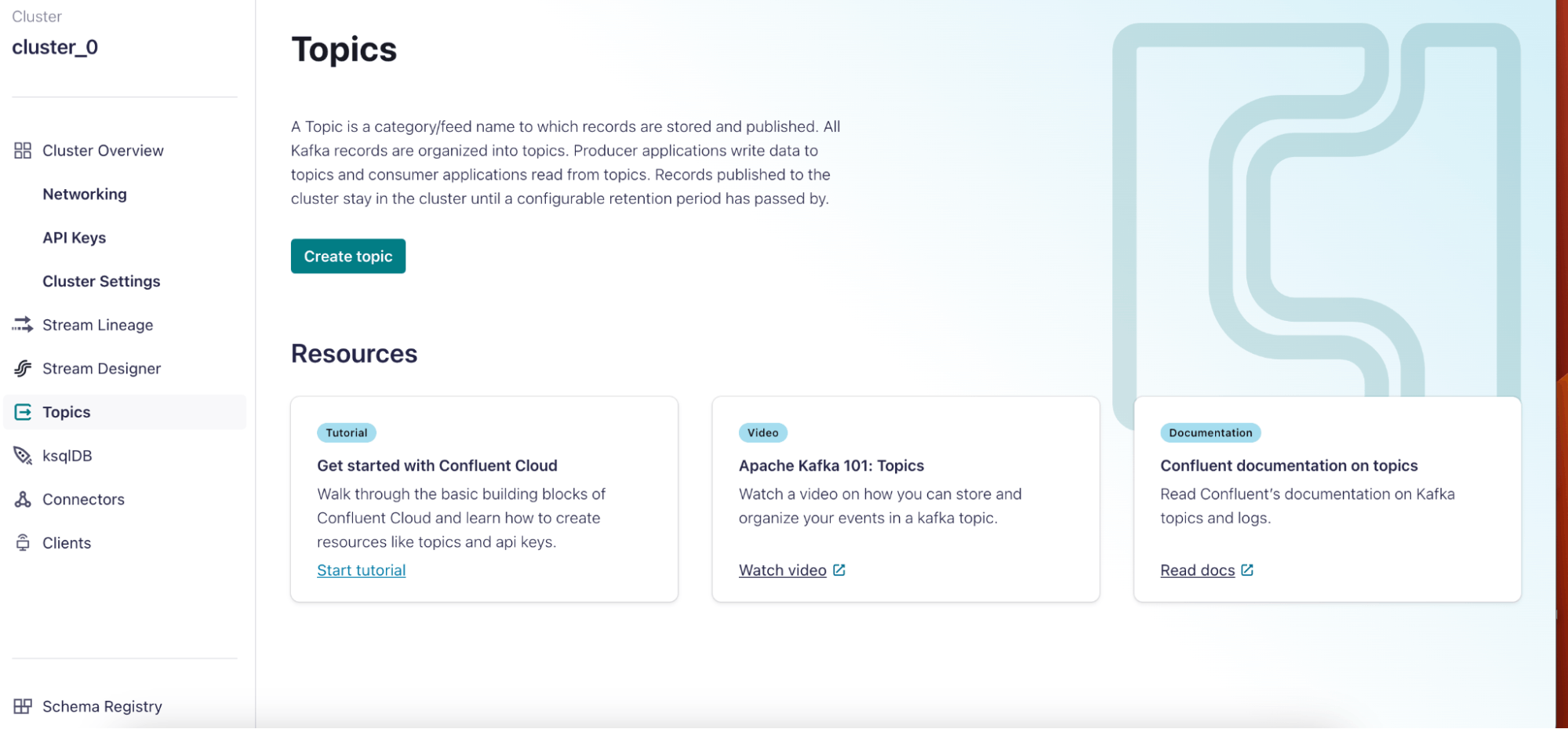

1. Create a topic from the Confluent Cloud console:

Go to the Confluent Cloud Console -> Your Cluster -> Topics -> Create Topic.

This is the topic we will send our changefeed messages to. You can choose a name and number of partitions for your topic. You can also choose more advanced configuration options for your topic such as the retention time of your messages.

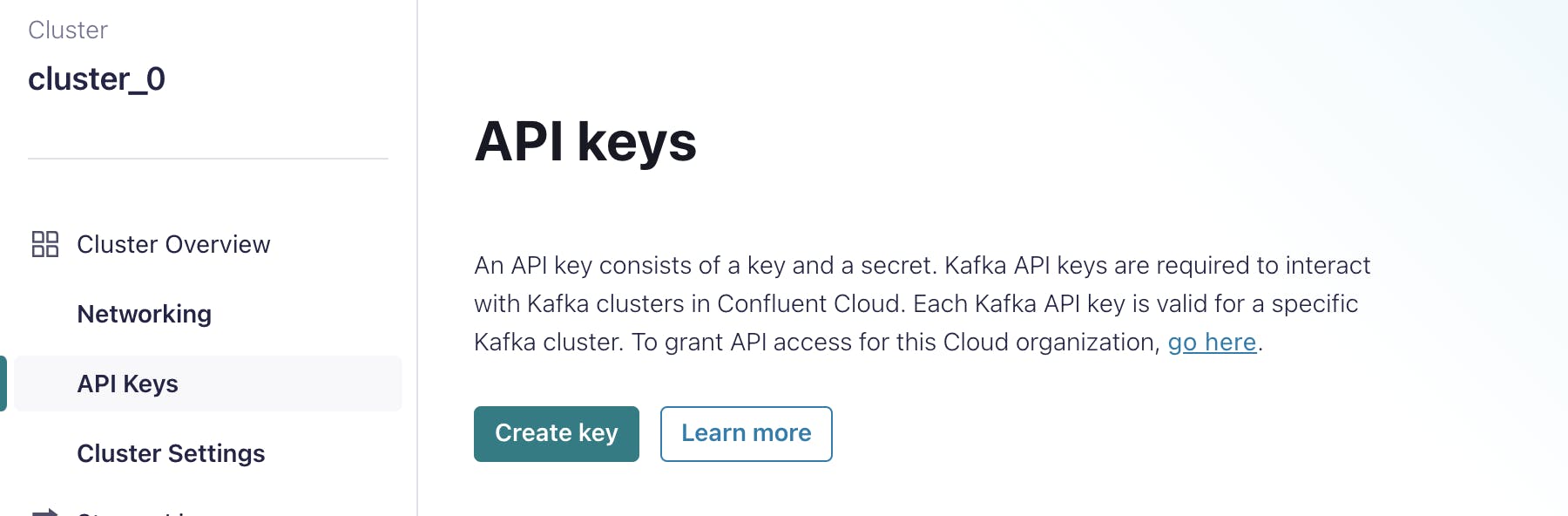

2. Get Connection Information for your Confluent Cloud Cluster

Next, to connect to your cluster, you need to get the endpoint and authentication information for the cluster.

First step is to create an API key for the cluster. Copy the key and secret information to be used later.

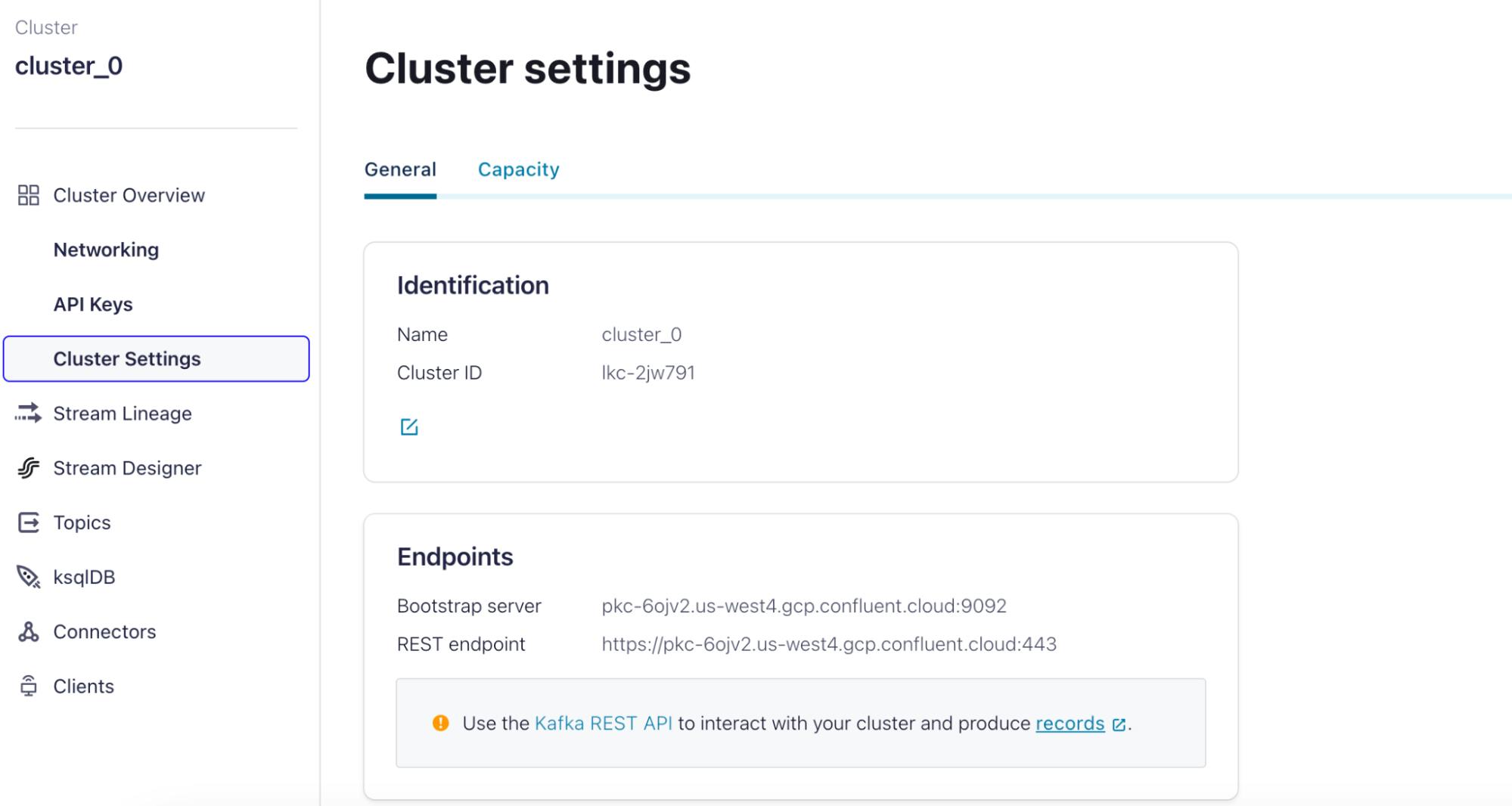

Next, copy the “Bootstrap server endpoint” from the cluster settings page:

You’re now ready to stream data into your Confluent Cloud Cluster!

3. Create changefeed into Confluent Cloud

Go to the SQL shell for your CockroachDB cluster and issue a CREATE CHANGEFEED SQL statement. Your statement should look like this:

CREATE CHANGEFEED FOR TABLE [your tables] INTO

'confluent-cloud://[bootstrap server endpoint]?api_key=[your

key]&api_secret=[your secret]&topic=[your topic name]'

After you create the changefeed, you can add additional configuration options.

That’s it. You’re done.

The integration of CockroachDB with Confluent, powered by changefeeds, opens doors to endless possibilities. With the cloud advantages of both platforms at your fingertips, you’re geared up for unparalleled efficiency, scalability, and reliability - without the headaches of deploying and managing your own cloud software.

Happy Streaming!