DoorDash reduced feature store costs by 75%.

Here's how.How do you keep databases available and performant at million-QPS scale?

That’s the kind of question that keeps enterprise engineers up at night. And while the right database software can make it easier, achieving high availability at scale is never easy.

At CockroachDB’s recent customer conference RoachFest, Michael Czabator (a software engineer with DoorDash’s storage infrastructure team) spoke about how DoorDash leverages CockroachDB to meet its availability goals at massive scale.

What does CockroachDB at DoorDash look like?

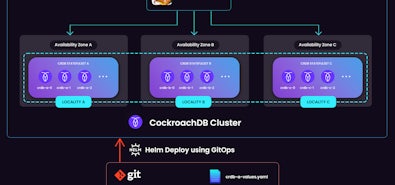

Czabator and the DoorDash storage team run CockroachDB as a fully abstracted service for the company’s engineering organization.

DoorDash’s usage of CockroachDB is growing quickly – the amount of data stored, the size of the largest cluster, and the number of changefeeds have all more than doubled within the past year, and that trend is expected to continue. But here’s a snapshot of the scale DoorDash is operating CockroachDB at right now:

- About 1.2 million queries per second at daily peak hours.

- About 2,300 total nodes spread across 300+ clusters.

- About 1.9 petabytes of data on disk.

- Close to 900 changefeeds.

The largest CockroachDB cluster running is typically 280 TB in size (but has peaked above 600 TB), with a single table that is 122 TB.

How have the engineers at DoorDash made that happen? Here are three guiding principles for operating databases at massive scale from DoorDash’s storage infrastructure team:

Lessons from DoorDash’s storage infrastructure experts

Don’t be a gatekeeper

DoorDash’s storage infrastructure team serves the company’s other engineering teams, who require databases for a wide range of use cases. At a smaller scale, this could be a somewhat manual process, but at DoorDash’s scale, this approach would result in the storage team becoming a gatekeeping bottleneck that held back other engineering projects. Instead, they’re building increasingly powerful self-serve offerings for internal use. These self-serve tools enable DoorDash engineers to leverage CockroachDB and perform tasks like schema management, user management, index operations, changefeed creation, query optimization, etc., while still ensuring safety and security via the guardrails the storage team has created.

For example, while CockroachDB comes with a full suite of observability tools, DoorDash built an internal tool called metrics-explorer to help engineers visualize query performance data as a time series. Users can look at various SQL-related metrics for their databases over time, and clicking on a specific query in metrics-explorer opens CockroachDB’s UI, which provides more granular query-specific detail to help troubleshoot potential issues with query performance.

Automate

Just as the storage team works to make using CockroachDB easier for their internal customers, they also work to make the operation and management of so many CockroachDB clusters easier for themselves. Using Argo workflows, they have automated a number of tasks including: repaving, version upgrades, scaling up and down, operation monitoring, user and settings management, and regular health checks.

Automating these processes saves a massive amount of time – roughly 80% of what would otherwise be the engineering workload, Czabator estimates. But it comes with another major benefit too: incident reduction.

Automating these processes means they’re executed the same way every time. In contrast, “if you have a human involved, we’ve seen cases where things can get messed up,” Czabator says. Leveraging automation allows them to reduce the amount of time spent on day-to-day management and operations tasks while also increasing their performance and availability by reducing the chances that human error can lead to performance issues or downtime.

More clusters doesn’t have to mean more hours

One of DoorDash’s core values is “1% better every day.” On the storage team, one of the main ways this has manifested itself is through a regular incident review process – any time an issues triggers an alarm or a page, it also triggers a postgame review after the immediate issue has been resolved with the goal of answering a single question: how do we prevent this from occurring again?

Those reviews generally lead to minor changes, not massive system overhauls. But this kind of regular incremental improvement pays massive dividends over time. In 2023 at DoorDash, Czabator says, the number of pages/critical alerts is actually trending down despite the fact that usage of CockroachDB has grown significantly during that same period.

In some cases, DoorDash has also worked directly with Cockroach Labs to make improvements to the database itself. For example, after DoorDash noticed that many of its 2023 incidents were caused by full table scans, Cockroach Labs introduced guardrails and a full table scan “threshold” that allowed DoorDash more granular control over where and when full table scans are enabled.

The outcome of all of this continuing-improvement work is that DoorDash has been able to significantly scale up its CockroachDB usage without having to scale up the number of hours the storage team dedicates to keeping things running.

More on how DoorDash uses CockroachDB at scale

Check out Michael Czabator’s full RoachFest presentation below to learn more about DoorDash and how they’re using CockroachDB at scale: