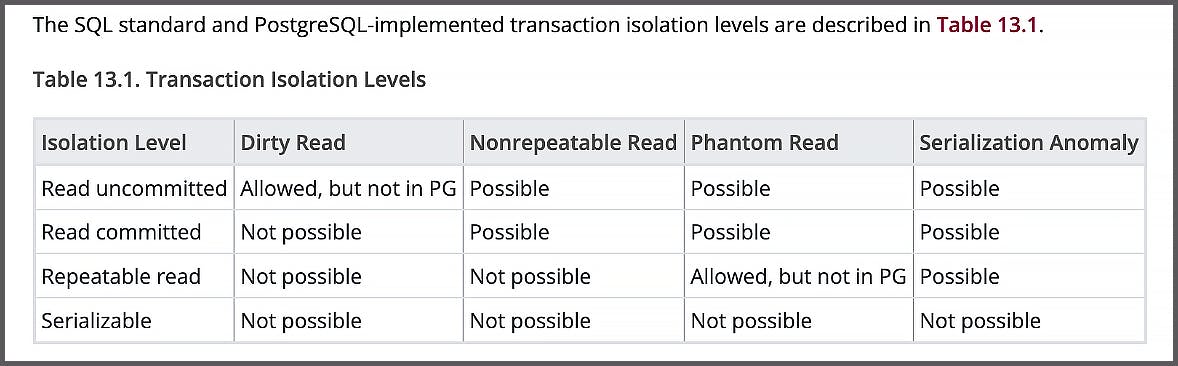

If you’ve ever read the section on transaction isolation in your database documentation, you’ve probably seen some version of this table:

This particular anomaly table is from PostgreSQL 16, but most other databases have something similar in their documentation. For a long time CockroachDB was an exception: prior to version 23.2, CRDB only supported a single isolation level (SERIALIZABLE) so we didn’t need any comparison tables.

Now that CockroachDB 23.2 supports both READ COMMITTED and SERIALIZABLE isolation, though, we need to provide guidance to help our users choose the right transaction isolation level for their applications. Somewhere in our docs we will dutifully inform you that READ COMMITTED permits the nonrepeatable and phantom read anomalies, but that’s actually not the most important thing you should know about them.

The really important information is what these different isolation levels mean for the design and implementation of your app.

What’s wrong with the anomaly table?

For one thing, it’s incomplete. The ANSI SQL standard defined three anomalies in 1992, but since then database researchers have identified and cataloged many more (see, for example, A Critique of ANSI SQL Isolation Levels (Berenson et al, 1995) or Weak Consistency: A Generalized Theory and Optimistic Implementations for Distributed Transactions (Adya, 1999)). But simply expanding the table with new anomalies quickly becomes overwhelming, and while the detailed analysis is useful for database implementers, it’s not very helpful to application developers.

The truth is that no one thinks about their application in terms of isolation anomalies. No one can say This application can tolerate phantom reads but not write skew, so REPEATABLE READ is the best isolation level for it. In practice most people just run with the defaults until they hit a problem, then they consider moving up or down one step. But you don’t have to give up just because anomaly analysis is hard. There are other ways to make this decision.

What does isolation mean for my code?

Your choice of isolation level will have an effect on your code: a correct application written for READ COMMITTED will look a little different from a correct application written for SERIALIZABLE.

In lower isolation levels like READ COMMITTED, it is important to use explicit locks (such as the FOR SHARE and FOR UPDATE clauses on SELECT statements) to ensure that two transactions do not incorrectly overwrite each other’s data. Application developers must pay attention to locking because failure to use explicit locks can cause data bugs, while over-using locks can hurt performance and introduce some of the downsides of higher isolation levels.

In higher isolation levels such as SERIALIZABLE, the major downside is that sometimes two transactions can interfere with each other in such a way that they cannot both be allowed to proceed without violating the isolation guarantees, so one of them must be aborted and retried. To keep this from becoming a user-visible error, the application must contain a retry loop to catch the error and repeat the transaction. This is often a very intrusive change, especially in applications that were originally designed to run at lower isolation levels.

How about an example?

If you run multiple copies of the following transaction at REPEATABLE READ (in PostgreSQL), some of them may return transaction retry errors (with the error code 40001 serialization_failure, even though the isolation level is not SERIALIZABLE).

def transfer(db_conn, from_account, to_account, amount):

with db_conn.transaction() as txn:

balance = txn.execute("SELECT balance FROM accounts WHERE id=?",

from_account).fetchone()[0]

if balance < amount:

return

txn.execute("UPDATE accounts SET balance=balance-? WHERE id=?",

amount, from_account)

txn.execute("UPDATE accounts SET balance=balance+? WHERE id=?",

amount, to_account)

This type of retry error depends on the isolation level: it occurs in REPEATABLE READ and SERIALIZABLE isolation, but not in READ COMMITTED, because READ COMMITTED does not guarantee that the UPDATE statement at line 7 sees the same balance as in the SELECT at line 3 (This is a bug that should be addressed by adding the FOR UPDATE clause to the SELECT, although that will increase the risk of deadlock).

A transaction retry error is returned by the database to indicate that the transaction in progress is unable to proceed and commit, but it may be able to commit if it is re-executed from the beginning. The retry error is necessary because of the way that SQL transactions effectively mix logic between SQL and the application language. The database knows all of the SQL statements executed, but it doesn’t know what happened in the python** if** block in lines 5-6, so it has no choice but to raise the error to the application to let it retry.

Not all causes of retry errors are directly related to the isolation level. Some, such as deadlocks, can occur in any isolation level. In most applications, deadlocks are rare enough that developers just let the transaction fail instead of adding retry loops around every transaction that might fail.

In a distributed database like CockroachDB, hardware, software, or network faults can also cause retry errors, as well as data movement for load balancing. Interestingly, READ COMMITTED isolation can also prevent many of these errors, because it allows earlier non-locking SELECT statements to be disregarded: even if the current statement needs to be retried, in READ COMMITTED we never need to go back and retry older statements and can generally handle everything on the server side without returning an error to the client.

(In the preview version of READ COMMITTED in CockroachDB 23.2, internal load balancing operations can still cause retry errors in READ COMMITTED. This will be fixed before READ COMMITTED becomes generally available in 24.1).

How to choose an isolation level

CockroachDB 23.2 supports SERIALIZABLE and READ COMMITTED isolation levels.

SERIALIZABLE is the highest level of isolation and is the default in CockroachDB. It prevents all isolation anomalies but it is also the most conservative in detecting potential interference between transactions and therefore produces more transaction retry errors.

READ COMMITTED is a weaker isolation level that allows a transaction to see different versions of the data in different statements. It is the default in most databases because it minimizes blocking and interference between transactions, leading to more predictable performance, but it requires some care with explicit locking.

SERIALIZABLE is a good choice if:

- Data accuracy is paramount and you don’t want to risk anomalies due to missing FOR UPDATE locks.

- You are able to use abstractions in your application that let you avoid repeating the retry loop code throughout the app.

- You have a retry loop at another level that obviates the need for retries in the app server. For example, a mobile app will often retry failed API calls to cover for flaky networks, and this retry can also cover issues related to serializability.

READ COMMITTED is a good choice if:

- You are migrating an application that was originally developed for a different database that used this isolation level.

- It is difficult to modify the application to handle transaction retry errors

- Consistently low latency is important for the application and full transaction retries are too expensive.

Conclusion

The practical differences between the various transaction isolation levels is not which data anomalies they prevent or allow, but the impact they have on application development.

Using low isolation levels safely requires careful use of explicit locks, while high isolation levels may require applications to be retried to prevent anomalies. Applying this decision framework will help you choose the right isolation level for your application.