Once you've installed CockroachDB, it's simple to run a secure multi-node cluster locally, using TLS certificates to encrypt network communication.

To try CockroachDB Cloud instead of running CockroachDB yourself, refer to the Cloud Quickstart.

Before you begin

- Make sure you have already installed CockroachDB.

- For quick SQL testing or app development, consider running a single-node cluster instead.

- Note that running multiple nodes on a single host is useful for testing CockroachDB, but it's not suitable for production. To run a physically distributed cluster, see Manual Deployment or Orchestrated Deployment, and review the Production Checklist.

Step 1. Generate certificates

You can use either cockroach cert commands or openssl commands to generate security certificates. This section features the cockroach cert commands.

Create two directories:

$ mkdir certs my-safe-directoryDirectory Description certsYou'll generate your CA certificate and all node and client certificates and keys in this directory. my-safe-directoryYou'll generate your CA key in this directory and then reference the key when generating node and client certificates. Create the CA (Certificate Authority) certificate and key pair:

$ cockroach cert create-ca \ --certs-dir=certs \ --ca-key=my-safe-directory/ca.keyCreate the certificate and key pair for your nodes:

$ cockroach cert create-node \ localhost \ $(hostname) \ --certs-dir=certs \ --ca-key=my-safe-directory/ca.keyBecause you're running a local cluster and all nodes use the same hostname (

localhost), you only need a single node certificate. Note that this is different than running a production cluster, where you would need to generate a certificate and key for each node, issued to all common names and IP addresses you might use to refer to the node as well as to any load balancer instances.Create a client certificate and key pair for the

rootuser:$ cockroach cert create-client \ root \ --certs-dir=certs \ --ca-key=my-safe-directory/ca.key

Step 2. Start the cluster

Use the

cockroach startcommand to start the first node:$ cockroach start \ --certs-dir=certs \ --store=node1 \ --listen-addr=localhost:26257 \ --http-addr=localhost:8080 \ --join=localhost:26257,localhost:26258,localhost:26259 \ --backgroundYou'll see a message like the following:

* * INFO: initial startup completed. * Node will now attempt to join a running cluster, or wait for `cockroach init`. * Client connections will be accepted after this completes successfully. * Check the log file(s) for progress. *Take a moment to understand the flags you used:

- The

--certs-dirdirectory points to the directory holding certificates and keys. - Since this is a purely local cluster,

--listen-addr=localhost:26257and--http-addr=localhost:8080tell the node to listen only onlocalhost, with port26257used for internal and client traffic and port8080used for HTTP requests from the Admin UI. - The

--storeflag indicates the location where the node's data and logs are stored. The

--joinflag specifies the addresses and ports of the nodes that will initially comprise your cluster. You'll use this exact--joinflag when starting other nodes as well.For a cluster in a single region, set 3-5

--joinaddresses. Each starting node will attempt to contact one of the join hosts. In case a join host cannot be reached, the node will try another address on the list until it can join the gossip network.The

--backgroundflag starts thecockroachprocess in the background so you can continue using the same terminal for other operations.

- The

Start two more nodes:

$ cockroach start \ --certs-dir=certs \ --store=node2 \ --listen-addr=localhost:26258 \ --http-addr=localhost:8081 \ --join=localhost:26257,localhost:26258,localhost:26259 \ --background$ cockroach start \ --certs-dir=certs \ --store=node3 \ --listen-addr=localhost:26259 \ --http-addr=localhost:8082 \ --join=localhost:26257,localhost:26258,localhost:26259 \ --backgroundThese commands are the same as before but with unique

--store,--listen-addr, and--http-addrflags.Use the

cockroach initcommand to perform a one-time initialization of the cluster, sending the request to any node:$ cockroach init --certs-dir=certs --host=localhost:26257You'll see the following message:

Cluster successfully initializedAt this point, each node also prints helpful startup details to its log. For example, the following command retrieves node 1's startup details:

$ grep 'node starting' node1/logs/cockroach.log -A 11The output will look something like this:

CockroachDB node starting at build: CCL v20.1.17 @ 2021-05-17 00:00:00 (go1.12.6) webui: https://localhost:8080 sql: postgresql://root@localhost:26257?sslcert=certs%2Fclient.root.crt&sslkey=certs%2Fclient.root.key&sslmode=verify-full&sslrootcert=certs%2Fca.crt RPC client flags: cockroach <client cmd> --host=localhost:26257 --certs-dir=certs logs: /Users/<username>/node1/logs temp dir: /Users/<username>/node1/cockroach-temp966687937 external I/O path: /Users/<username>/node1/extern store[0]: path=/Users/<username>/node1 status: initialized new cluster clusterID: b2537de3-166f-42c4-aae1-742e094b8349 nodeID: 1

Step 3. Use the built-in SQL client

Now that your cluster is live, you can use any node as a SQL gateway. To test this out, let's use CockroachDB's built-in SQL client.

Run the

cockroach sqlcommand against node 1:$ cockroach sql --certs-dir=certs --host=localhost:26257Run some basic CockroachDB SQL statements:

> CREATE DATABASE bank;> CREATE TABLE bank.accounts (id INT PRIMARY KEY, balance DECIMAL);> INSERT INTO bank.accounts VALUES (1, 1000.50);> SELECT * FROM bank.accounts;id | balance +----+---------+ 1 | 1000.50 (1 row)Now exit the SQL shell on node 1 and open a new shell on node 2:

> \q$ cockroach sql --certs-dir=certs --host=localhost:26258Note:In a real deployment, all nodes would likely use the default port

26257, and so you wouldn't need to set the port portion of--host.Run the same

SELECTquery as before:> SELECT * FROM bank.accounts;id | balance +----+---------+ 1 | 1000.50 (1 row)As you can see, node 1 and node 2 behaved identically as SQL gateways.

Now create a user with a password, which you will need to access the Admin UI:

> CREATE USER max WITH PASSWORD 'roach';Exit the SQL shell on node 2:

> \q

Step 4. Run a sample workload

CockroachDB also comes with a number of built-in workloads for simulating client traffic. Let's run the workload based on CockroachDB's sample vehicle-sharing application, MovR.

Load the initial dataset:

$ cockroach workload init movr \ 'postgresql://root@localhost:26257?sslcert=certs%2Fclient.root.crt&sslkey=certs%2Fclient.root.key&sslmode=verify-full&sslrootcert=certs%2Fca.crt'I190926 16:50:35.663708 1 workload/workloadsql/dataload.go:135 imported users (0s, 50 rows) I190926 16:50:35.682583 1 workload/workloadsql/dataload.go:135 imported vehicles (0s, 15 rows) I190926 16:50:35.769572 1 workload/workloadsql/dataload.go:135 imported rides (0s, 500 rows) I190926 16:50:35.836619 1 workload/workloadsql/dataload.go:135 imported vehicle_location_histories (0s, 1000 rows) I190926 16:50:35.915498 1 workload/workloadsql/dataload.go:135 imported promo_codes (0s, 1000 rows)Run the workload for 5 minutes:

$ cockroach workload run movr \ --duration=5m \ 'postgresql://root@localhost:26257?sslcert=certs%2Fclient.root.crt&sslkey=certs%2Fclient.root.key&sslmode=verify-full&sslrootcert=certs%2Fca.crt'

Step 5. Access the Admin UI

The CockroachDB Admin UI gives you insight into the overall health of your cluster as well as the performance of the client workload.

On secure clusters, certain pages of the Admin UI can only be accessed by

adminusers.Run the

cockroach sqlcommand against node 1:$ cockroach sql --certs-dir=certs --host=localhost:26257Assign

maxto theadminrole (you only need to do this once):> GRANT admin TO max;Exit the SQL shell:

> \qGo to https://localhost:8080. Note that your browser will consider the CockroachDB-created certificate invalid; you'll need to click through a warning message to get to the UI.

Note:If you are using Google Chrome, and you are getting an error about not being able to reach

localhostbecause its certificate has been revoked, go to chrome://flags/#allow-insecure-localhost, enable "Allow invalid certificates for resources loaded from localhost", and then restart the browser. Enabling this Chrome feature degrades security for all sites running onlocalhost, not just CockroachDB's Admin UI, so be sure to enable the feature only temporarily.Log in with the username and password you created earlier (

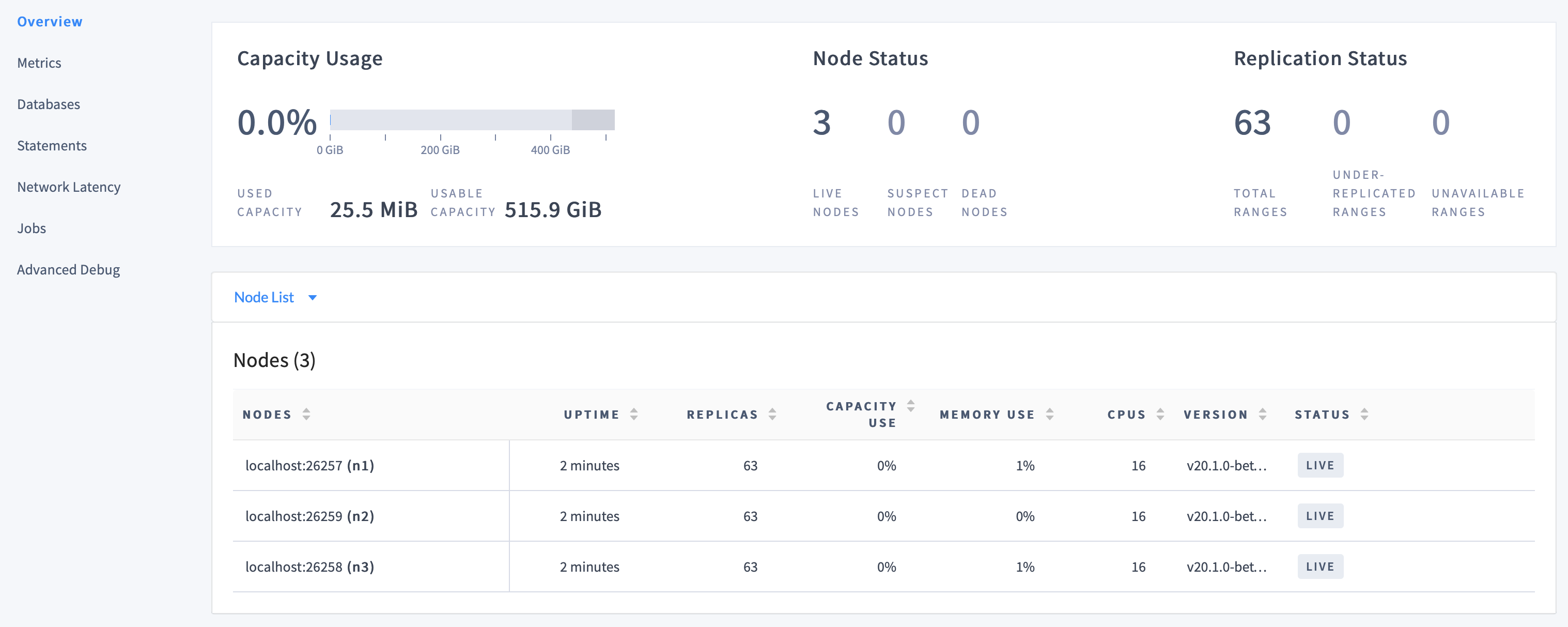

max/roach).On the Cluster Overview, notice that three nodes are live, with an identical replica count on each node:

This demonstrates CockroachDB's automated replication of data via the Raft consensus protocol.

Note:Capacity metrics can be incorrect when running multiple nodes on a single machine. For more details, see this limitation.

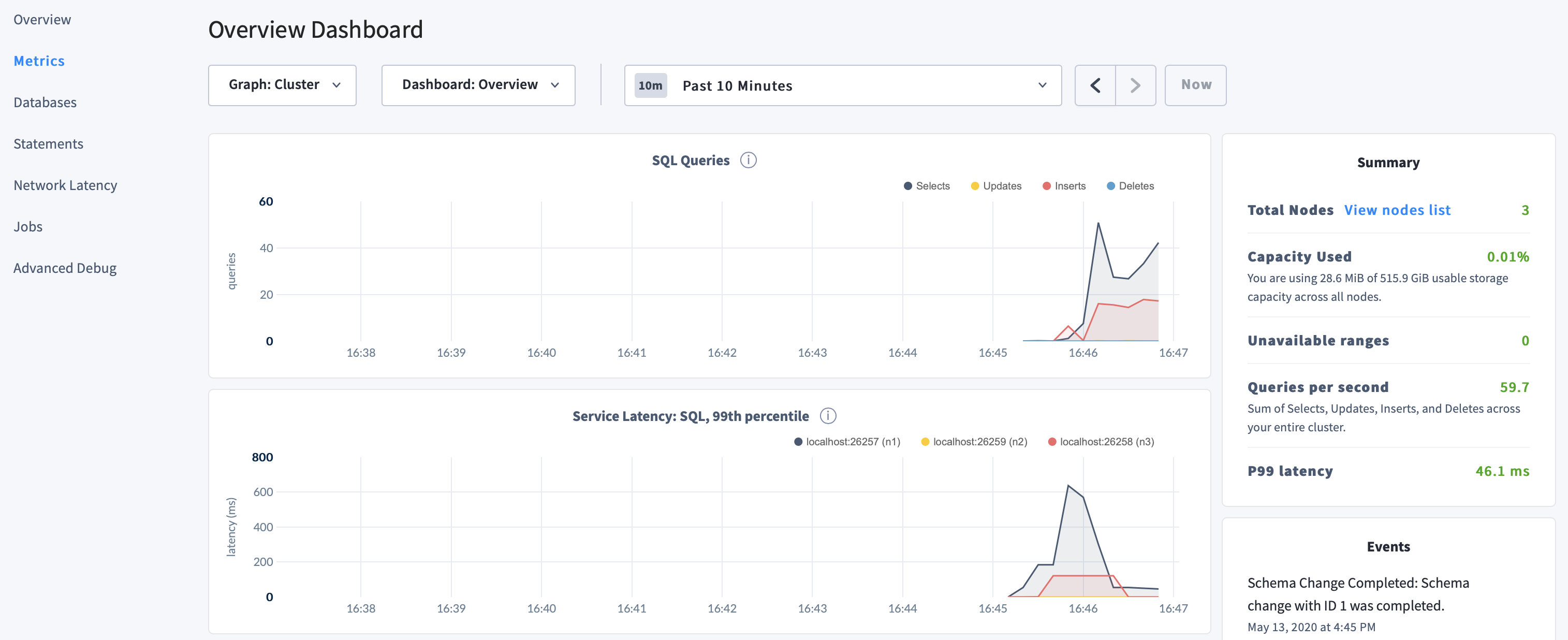

Click Metrics to access a variety of time series dashboards, including graphs of SQL queries and service latency over time:

Use the Databases, Statements, and Jobs pages to view details about your databases and tables, to assess the performance of specific queries, and to monitor the status of long-running operations like schema changes, respectively.

Step 6. Simulate node failure

In a new terminal, run the

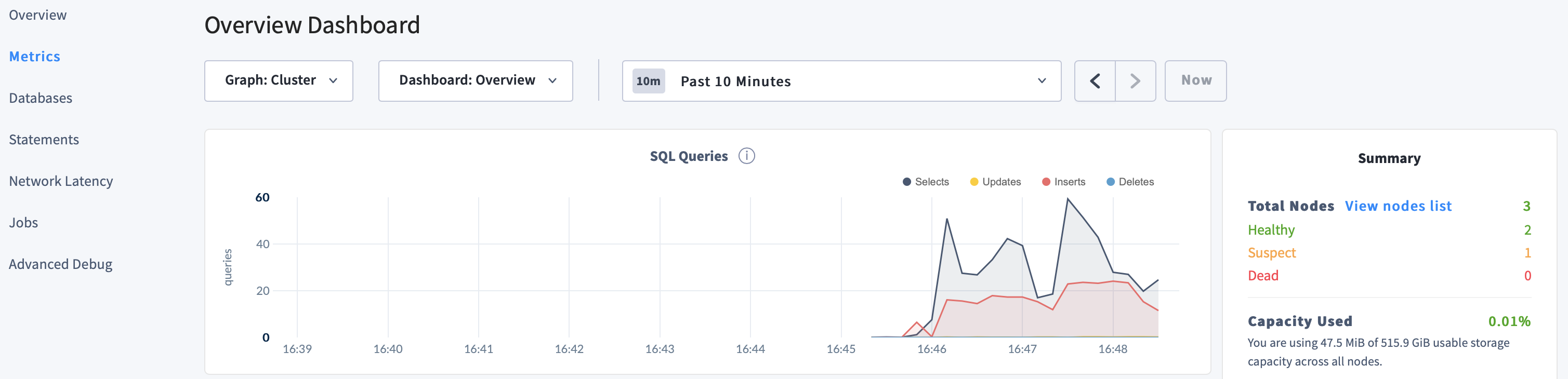

cockroach quitcommand against a node to simulate a node failure:$ cockroach quit --certs-dir=certs --host=localhost:26259Back in the Admin UI, despite one node being "suspect", notice the continued SQL traffic:

This demonstrates CockroachDB's use of the Raft consensus protocol to maintain availability and consistency in the face of failure; as long as a majority of replicas remain online, the cluster and client traffic continue uninterrupted.

Restart node 3:

$ cockroach start \ --certs-dir=certs \ --store=node3 \ --listen-addr=localhost:26259 \ --http-addr=localhost:8082 \ --join=localhost:26257,localhost:26258,localhost:26259 \ --background

Step 7. Scale the cluster

Adding capacity is as simple as starting more nodes with cockroach start.

Start 2 more nodes:

$ cockroach start \ --certs-dir=certs \ --store=node4 \ --listen-addr=localhost:26260 \ --http-addr=localhost:8083 \ --join=localhost:26257,localhost:26258,localhost:26259 \ --background$ cockroach start \ --certs-dir=certs \ --store=node5 \ --listen-addr=localhost:26261 \ --http-addr=localhost:8084 \ --join=localhost:26257,localhost:26258,localhost:26259 \ --backgroundAgain, these commands are the same as before but with unique

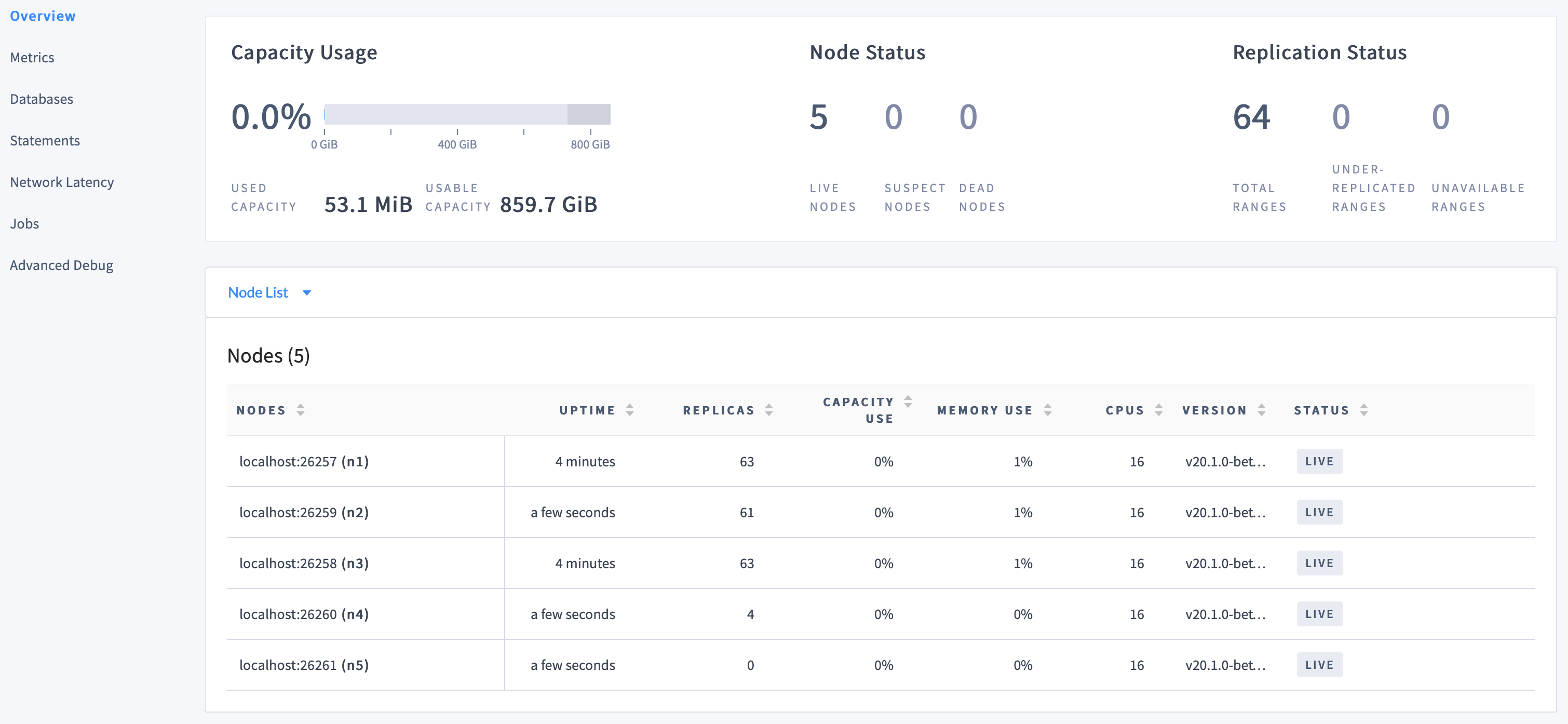

--store,--listen-addr, and--http-addrflags.Back on the Cluster Overview in the Admin UI, you'll now see 5 nodes listed:

At first, the replica count will be lower for nodes 4 and 5. Very soon, however, you'll see those numbers even out across all nodes, indicating that data is being automatically rebalanced to utilize the additional capacity of the new nodes.

Step 8. Stop the cluster

When you're done with your test cluster, use the

cockroach quitcommand to gracefully shut down each node.$ cockroach quit --certs-dir=certs --host=localhost:26257$ cockroach quit --certs-dir=certs --host=localhost:26258$ cockroach quit --certs-dir=certs --host=localhost:26259Note:For nodes 4 and 5, the shutdown process will take longer (about a minute each) and will eventually force the nodes to stop. This is because, with only 2 of 5 nodes left, a majority of replicas are not available, and so the cluster is no longer operational.

$ cockroach quit --certs-dir=certs --host=localhost:26260$ cockroach quit --certs-dir=certs --host=localhost:26261To restart the cluster at a later time, run the same

cockroach startcommands as earlier from the directory containing the nodes' data stores.If you do not plan to restart the cluster, you may want to remove the nodes' data stores and the certificate directories:

$ rm -rf node1 node2 node3 node4 node5 certs my-safe-directory

What's next?

- Learn more about CockroachDB SQL and the built-in SQL client

- Install the client driver for your preferred language

- Build an app with CockroachDB

- Further explore CockroachDB capabilities like fault tolerance and automated repair, geo-partitioning, serializable transactions, and JSON support