A node shutdown terminates the cockroach process on the node.

There are two ways to handle node shutdown:

To temporarily stop a node and restart it later, drain the node and terminate the

cockroachprocess. This is done when upgrading the cluster version or performing cluster maintenance (e.g., upgrading system software). With a drain, the data stored on the node is preserved, and will be reused if the node restarts within a reasonable timeframe. There is little node-to-node traffic involved, which makes a drain lightweight.To permanently remove the node from the cluster, drain and then decommission the node prior to terminating the

cockroachprocess. This is done when scaling down a cluster or reacting to hardware failures. With a decommission, the data is moved out of the node. Replica rebalancing creates network traffic throughout the cluster, which makes a decommission heavyweight.

This guidance applies to manual deployments. If you have a Kubernetes deployment, terminating the cockroach process is handled through the Kubernetes pods. The kubectl drain command is used for routine cluster maintenance. For details on this command, see the Kubernetes documentation.

Also see our documentation for cluster upgrades and cluster scaling on Kubernetes.

This page describes:

- The details of the node shutdown sequence.

- How to prepare for graceful shutdown by implementing connection retry logic and coordinating load balancer, process manager, and cluster settings.

- How to perform node shutdown by draining or decommissioning the node.

Node shutdown sequence

When a node is temporarily stopped, the following stages occur in sequence:

When a node is permanently removed, the following stages occur in sequence:

Decommissioning

To avoid disruptions in query performance, you should manually drain before decommissioning. For more information, see Perform node shutdown.

An operator initiates the decommissioning process on the node.

The node's is_decommissioning field is set to true and its membership status is set to decommissioning, which causes its replicas to be rebalanced to other nodes.

The node's /health?ready=1 endpoint continues to consider the node "ready" so that the node can function as a gateway to route SQL client connections to relevant data.

Draining

An operator initiates the draining process on the node. Draining a node disconnects clients after active queries are completed, and transfers any range leases and Raft leaderships to other nodes, but does not move replicas or data off of the node.

After all replicas on a decommissioning node are rebalanced, the node is automatically drained.

Node drain consists of the following consecutive phases:

Unready phase: The node's

/health?ready=1endpoint returns an HTTP503 Service Unavailableresponse code, which causes load balancers and connection managers to reroute traffic to other nodes. This phase completes when the fixed duration set byserver.shutdown.drain_waitis reached.SQL drain phase: All active transactions and statements for which the node is a gateway are allowed to complete, and CockroachDB closes the SQL client connections immediately afterward. After this phase completes, CockroachDB closes all remaining SQL client connections to the node. This phase completes either when all transactions have been processed or the maximum duration set by

server.shutdown.query_waitis reached.DistSQL drain phase: All distributed statements initiated on other gateway nodes are allowed to complete, and DistSQL requests from other nodes are no longer accepted. This phase completes either when all transactions have been processed or the maximum duration set by

server.shutdown.query_waitis reached.Lease transfer phase: The node's

is_drainingfield is set totrue, which removes the node as a candidate for replica rebalancing, lease transfers, and query planning. Any range leases or Raft leaderships must be transferred to other nodes. This phase completes when all range leases and Raft leaderships have been transferred.Since all range replicas were already removed from the node during the decommissioning stage, this step immediately resolves.

When draining manually, if the above steps have not completed after 10 minutes by default, node draining will stop and must be restarted to continue. For more information, see Drain timeout.

Status change

After decommissioning and draining are both complete, the node membership changes from decommissioning to decommissioned.

This node is now cut off from communicating with the rest of the cluster. A client attempting to connect to a decommissioned node and run a query will get an error.

At this point, the cockroach process is still running. It is only stopped by process termination.

Process termination

An operator terminates the node process.

After draining completes, the node process is automatically terminated (unless the node was manually drained).

A node process termination stops the cockroach process on the node. The node will stop updating its liveness record.

If the node then stays offline for the duration set by server.time_until_store_dead (5 minutes by default), the cluster considers the node "dead" and starts to rebalance its range replicas onto other nodes.

If the node is brought back online, its remaining range replicas will determine whether or not they are still valid members of replica groups. If a range replica is still valid and any data in its range has changed, it will receive updates from another replica in the group. If a range replica is no longer valid, it will be removed from the node.

A node that stays offline for the duration set by the server.time_until_store_dead cluster setting (5 minutes by default) is usually considered "dead" by the cluster. However, a decommissioned node retains decommissioned status.

CockroachDB's node shutdown behavior does not match any of the PostgreSQL server shutdown modes.

Prepare for graceful shutdown

Each of the node shutdown steps is performed in order, with each step commencing once the previous step has completed. However, because some steps can be interrupted, it's best to ensure that all steps complete gracefully.

Before you perform node shutdown, review the following prerequisites to graceful shutdown:

- Configure your load balancer to monitor node health.

- Review and adjust cluster settings and drain timeout as needed for your deployment.

- Implement connection retry logic to handle closed connections.

- Configure the termination grace period of your process manager or orchestration system.

- Ensure that the size and replication factor of your cluster are sufficient to handle decommissioning.

Load balancing

Your load balancer should use the /health?ready=1 endpoint to actively monitor node health and direct SQL client connections away from draining nodes.

To handle node shutdown effectively, the load balancer must be given enough time by the server.shutdown.drain_wait duration.

Cluster settings

server.shutdown.drain_wait

server.shutdown.drain_wait sets a fixed duration for the "unready phase" of node drain. Because a load balancer reroutes connections to non-draining nodes within this duration (0s by default), this setting should be coordinated with the load balancer settings.

Increase server.shutdown.drain_wait so that your load balancer is able to make adjustments before this phase times out. Because the drain process waits unconditionally for the server.shutdown.drain_wait duration, do not set this value too high.

For example, HAProxy uses the default settings inter 2000 fall 3 when checking server health. This means that HAProxy considers a node to be down (and temporarily removes the server from the pool) after 3 unsuccessful health checks being run at intervals of 2000 milliseconds. To ensure HAProxy can run 3 consecutive checks before timeout, set server.shutdown.drain_wait to 8s or greater:

SET CLUSTER SETTING server.shutdown.drain_wait = '8s';

server.shutdown.query_wait

server.shutdown.query_wait sets the maximum duration for the "SQL drain phase" and the maximum duration for the "DistSQL drain phase" of node drain. Active local and distributed queries must complete, in turn, within this duration (10s by default).

Ensure that server.shutdown.query_wait is greater than:

- The longest possible transaction in the workload that is expected to complete successfully.

- The

sql.defaults.idle_in_transaction_session_timeoutcluster setting, which controls the duration a session is permitted to idle in a transaction before the session is terminated (0sby default). - The

sql.defaults.statement_timeoutcluster setting, which controls the duration a query is permitted to run before it is canceled (0sby default).

server.shutdown.query_wait defines the upper bound of the duration, meaning that node drain proceeds to the next phase as soon as the last open transaction completes.

If there are still open transactions on the draining node when the server closes its connections, you will encounter errors. Your application should handle these errors with a connection retry loop.

server.shutdown.lease_transfer_wait

In the "lease transfer phase" of node drain, the server attempts to transfer all range leases and Raft leaderships from the draining node. server.shutdown.lease_transfer_wait sets the maximum duration of each iteration of this attempt (5s by default). Because this phase does not exit until all transfers are completed, changing this value only affects the frequency at which drain progress messages are printed.

In most cases, the default value is suitable. Do not set server.shutdown.lease_transfer_wait to a value lower than 5s. In this case, leases can fail to transfer and node drain will not be able to complete.

Since decommissioning a node rebalances all of its range replicas onto other nodes, no replicas will remain on the node by the time draining begins. Therefore, no iterations occur during this phase. This setting can be left alone.

The sum of server.shutdown.drain_wait, server.shutdown.query_wait times two, and server.shutdown.lease_transfer_wait should not be greater than the configured drain timeout.

server.time_until_store_dead

server.time_until_store_dead sets the duration after which a node is considered "dead" and its data is rebalanced to other nodes (5m0s by default). In the node shutdown sequence, this follows process termination.

Before temporarily stopping nodes for planned maintenance (e.g., upgrading system software), if you expect any nodes to be offline for longer than 5 minutes, you can prevent the cluster from unnecessarily moving data off the nodes by increasing server.time_until_store_dead to match the estimated maintenance window:

During this window, the cluster has reduced ability to tolerate another node failure. Be aware that increasing this value therefore reduces fault tolerance.

SET CLUSTER SETTING server.time_until_store_dead = '15m0s';

After completing the maintenance work and restarting the nodes, you would then change the setting back to its default:

RESET CLUSTER SETTING server.time_until_store_dead;

Drain timeout

When draining manually with cockroach node drain, all drain phases must be completed within the duration of --drain-wait (10m by default) or the drain will stop. This can be observed with an ERROR: drain timeout message in the terminal output. To continue the drain, re-initiate the command.

A very long drain may indicate an anomaly, and you should manually inspect the server to determine what blocks the drain.

--drain-wait sets the timeout for all draining phases and is not related to the server.shutdown.drain_wait cluster setting, which configures the "unready phase" of draining. The value of --drain-wait should be greater than the sum of server.shutdown.drain_wait, server.shutdown.query_wait times two, and server.shutdown.lease_transfer_wait.

Connection retry loop

At the end of the "SQL drain phase" of node drain, the server forcibly closes all SQL client connections to the node. If any open transactions were interrupted or not admitted by the server because of the connection closure, they will fail with either a generic TCP-level client error or one of the following errors:

57P01 server is shutting downindicates that the server is not accepting new transactions on existing connections.08006 An I/O error occurred while sending to the backendindicates that the current connection was broken (closed by the server).

These errors are an expected occurrence during node shutdown. To be resilient to such errors, your application should use a reconnection and retry loop to reissue transactions that were open when a connection was closed or the server stopped accepting transactions. This allows procedures such as rolling upgrades to complete without interrupting your service.

Upon receiving a connection error, your application must handle the result of a previously open transaction as unknown.

A connection retry loop should:

- Close the current connection.

- Open a new connection. This will be routed to a non-draining node.

- Reissue the transaction on the new connection.

- Repeat while the connection error persists and the retry count has not exceeded a configured maximum.

Termination grace period

On production deployments, a process manager or orchestration system can disrupt graceful node shutdown if its termination grace period is too short.

If the cockroach process has not terminated at the end of the grace period, a SIGKILL signal is sent to perform a "hard" shutdown that bypasses CockroachDB's node shutdown logic and forcibly terminates the process. This can corrupt log files and, in certain edge cases, can result in temporary data unavailability, latency spikes, uncertainty errors, ambiguous commit errors, or query timeouts. When decommissioning, a hard shutdown will leave ranges under-replicated and vulnerable to another node failure until up-replication completes, which could cause loss of quorum.

When using

systemdto run CockroachDB as a service, set the termination grace period withTimeoutStopSecsetting in the service file.When using Kubernetes to orchestrate CockroachDB, set the termination grace period with

terminationGracePeriodSecondsin the StatefulSet manifest.

To determine an appropriate termination grace period:

Run

cockroach node drainwith--drain-waitand observe the amount of time it takes node drain to successfully complete.On Kubernetes deployments, it is helpful to set

terminationGracePeriodSecondsto be 5 seconds longer than the configured drain timeout. This allows Kubernetes to remove a pod only after node drain has completed.In general, we recommend setting the termination grace period between 5 and 10 minutes. If a node requires more than 10 minutes to drain successfully, this may indicate a technical issue such as inadequate cluster sizing.

Increasing the termination grace period does not increase the duration of a node shutdown. However, the termination grace period should not be excessively long, in case an underlying hardware or software issue causes node shutdown to become "stuck".

Size and replication factor

Before decommissioning a node, make sure other nodes are available to take over the range replicas from the node. If fewer nodes are available than the replication factor, CockroachDB will automatically reduce the replication factor (for example, from 5 to 3) to try to allow the decommission to succeed. However, the replication factor will not be reduced lower than 3. If three nodes are not available, the decommissioning process will hang indefinitely until nodes are added or you update the zone configurations to use a replication factor of 1.

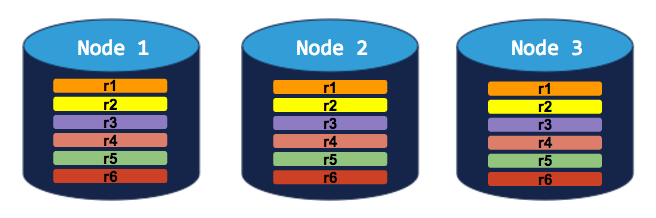

3-node cluster with 3-way replication

In this scenario, each range is replicated 3 times, with each replica on a different node:

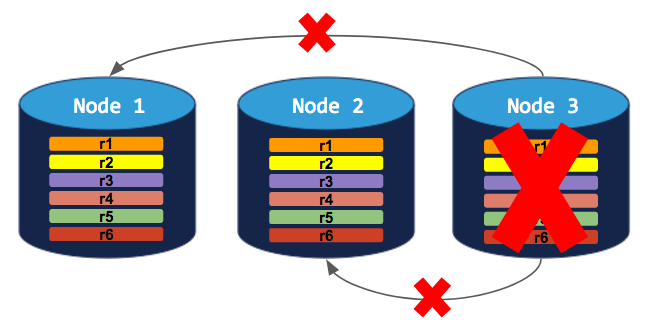

If you try to decommission a node, the process will hang indefinitely because the cluster cannot move the decommissioning node's replicas to the other 2 nodes, which already have a replica of each range:

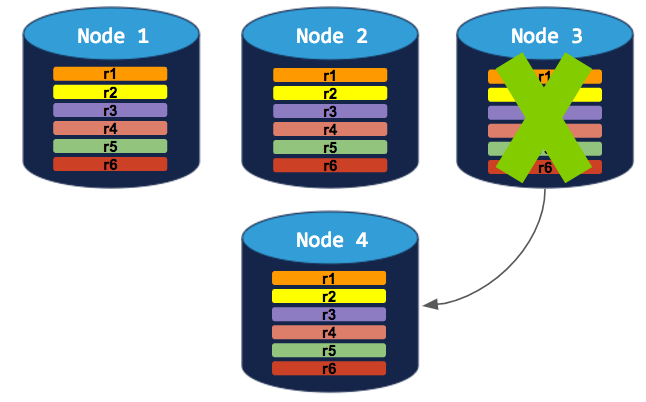

To successfully decommission a node in this cluster, you need to add a 4th node. The decommissioning process can then complete:

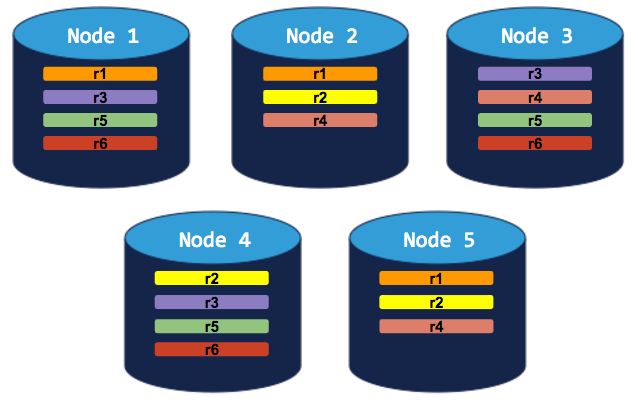

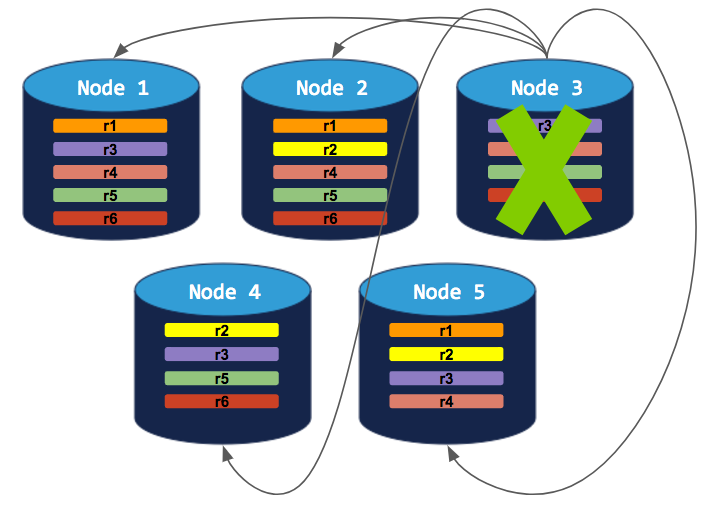

5-node cluster with 3-way replication

In this scenario, like in the scenario above, each range is replicated 3 times, with each replica on a different node:

If you decommission a node, the process will run successfully because the cluster will be able to move the node's replicas to other nodes without doubling up any range replicas:

Perform node shutdown

After preparing for graceful shutdown, do the following to temporarily stop a node. This both drains the node and terminates the cockroach process.

After preparing for graceful shutdown, do the following to permanently remove a node.

This guidance applies to manual deployments. If you have a Kubernetes deployment, terminating the cockroach process is handled through the Kubernetes pods. The kubectl drain command is used for routine cluster maintenance. For details on this command, see the Kubernetes documentation.

Also see our documentation for cluster upgrades and cluster scaling on Kubernetes.

Drain the node

Although draining automatically follows decommissioning, you should first run cockroach node drain to manually drain the node of active queries, SQL client connections, and leases before decommissioning. This prevents possible disruptions in query performance. For specific instructions, see the example.

Decommission the node

Run cockroach node decommission to decommission the node and rebalance its range replicas. For specific instructions and additional guidelines, see the example.

Do not terminate the node process, delete the storage volume, or remove the VM before a decommissioning node has changed its membership status to decommissioned. Prematurely terminating the process will prevent the node from rebalancing all of its range replicas onto other nodes gracefully, cause transient query errors in client applications, and leave the remaining ranges under-replicated and vulnerable to loss of quorum if another node goes down.

Terminate the node process

Drain the node and terminate the node process

To drain the node without process termination, see Drain a node manually.

We do not recommend sending SIGKILL to perform a "hard" shutdown, which bypasses CockroachDB's node shutdown logic and forcibly terminates the process. This can corrupt log files and, in certain edge cases, can result in temporary data unavailability, latency spikes, uncertainty errors, ambiguous commit errors, or query timeouts. When decommissioning, a hard shutdown will leave ranges under-replicated and vulnerable to another node failure, causing quorum loss in the window before up-replication completes.

On production deployments, use the process manager to send

SIGTERMto the process.- For example, with

systemd, runsystemctl stop {systemd config filename}.

- For example, with

When using CockroachDB for local testing:

- When running a server on the foreground, use

ctrl-cin the terminal to sendSIGINTto the process. - When running with the

--backgroundflag, usepkill,kill, or look up the process ID withps -ef | grep cockroach | grep -v grepand then runkill -TERM {process ID}.

- When running a server on the foreground, use

Monitor shutdown progress

OPS

During node shutdown, progress messages are generated in the OPS logging channel. The frequency of these messages is configured with server.shutdown.lease_transfer_wait. By default, the OPS logs output to a cockroach.log file.

Node decommission progress is reported in node_decommissioning and node_decommissioned events:

grep 'decommission' node1/logs/cockroach.log

I220211 02:14:30.906726 13931 1@util/log/event_log.go:32 ⋮ [-] 1064 ={"Timestamp":1644545670665746000,"EventType":"node_decommissioning","RequestingNodeID":1,"TargetNodeID":4}

I220211 02:14:31.288715 13931 1@util/log/event_log.go:32 ⋮ [-] 1067 ={"Timestamp":1644545670665746000,"EventType":"node_decommissioning","RequestingNodeID":1,"TargetNodeID":5}

I220211 02:16:39.093251 21514 1@util/log/event_log.go:32 ⋮ [-] 1680 ={"Timestamp":1644545798928274000,"EventType":"node_decommissioned","RequestingNodeID":1,"TargetNodeID":4}

I220211 02:16:39.656225 21514 1@util/log/event_log.go:32 ⋮ [-] 1681 ={"Timestamp":1644545798928274000,"EventType":"node_decommissioned","RequestingNodeID":1,"TargetNodeID":5}

Node drain progress is reported in unstructured log messages:

grep 'drain' node1/logs/cockroach.log

I220202 20:51:21.654349 2867 1@server/drain.go:210 ⋮ [n1,server drain process] 299 drain remaining: 15

I220202 20:51:21.654425 2867 1@server/drain.go:212 ⋮ [n1,server drain process] 300 drain details: descriptor leases: 7, liveness record: 1, range lease iterations: 7

I220202 20:51:23.052931 2867 1@server/drain.go:210 ⋮ [n1,server drain process] 309 drain remaining: 1

I220202 20:51:23.053217 2867 1@server/drain.go:212 ⋮ [n1,server drain process] 310 drain details: range lease iterations: 1

W220202 20:51:23.772264 681 sql/stmtdiagnostics/statement_diagnostics.go:162 ⋮ [n1] 313 error polling for statement diagnostics requests: ‹stmt-diag-poll›: cannot acquire lease when draining

E220202 20:51:23.800288 685 jobs/registry.go:715 ⋮ [n1] 314 error expiring job sessions: ‹expire-sessions›: cannot acquire lease when draining

E220202 20:51:23.819957 685 jobs/registry.go:723 ⋮ [n1] 315 failed to serve pause and cancel requests: could not query jobs table: ‹cancel/pause-requested›: cannot acquire lease when draining

I220202 20:51:24.672435 2867 1@server/drain.go:210 ⋮ [n1,server drain process] 320 drain remaining: 0

I220202 20:51:24.984089 1 1@cli/start.go:868 ⋮ [n1] 332 server drained and shutdown completed

cockroach node status

Draining status is reflected in the cockroach node status --decommission output:

cockroach node status --decommission --certs-dir=certs --host={address of any live node}

id | address | sql_address | build | started_at | updated_at | locality | is_available | is_live | gossiped_replicas | is_decommissioning | membership | is_draining

-----+-----------------+-----------------+-------------------------+----------------------------+----------------------------+----------+--------------+---------+-------------------+--------------------+----------------+--------------

1 | localhost:26257 | localhost:26257 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:55.07734 | 2022-02-11 02:17:28.202777 | | true | true | 73 | false | active | true

2 | localhost:26258 | localhost:26258 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.203535 | 2022-02-11 02:17:29.465841 | | true | true | 73 | false | active | false

3 | localhost:26259 | localhost:26259 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.406667 | 2022-02-11 02:17:29.588486 | | true | true | 73 | false | active | false

(3 rows)

is_draining == true indicates that the node is either undergoing or has completed the draining process.

Draining and decommissioning statuses are reflected in the cockroach node status --decommission output:

cockroach node status --decommission --certs-dir=certs --host={address of any live node}

id | address | sql_address | build | started_at | updated_at | locality | is_available | is_live | gossiped_replicas | is_decommissioning | membership | is_draining

-----+-----------------+-----------------+-------------------------+----------------------------+----------------------------+----------+--------------+---------+-------------------+--------------------+----------------+--------------

1 | localhost:26257 | localhost:26257 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:55.07734 | 2022-02-11 02:17:28.202777 | | true | true | 73 | false | active | false

2 | localhost:26258 | localhost:26258 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.203535 | 2022-02-11 02:17:29.465841 | | true | true | 73 | false | active | false

3 | localhost:26259 | localhost:26259 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.406667 | 2022-02-11 02:17:29.588486 | | true | true | 73 | false | active | false

4 | localhost:26260 | localhost:26260 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.914003 | 2022-02-11 02:16:39.032709 | | false | false | 0 | true | decommissioned | true

5 | localhost:26261 | localhost:26261 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:57.613508 | 2022-02-11 02:16:39.615783 | | false | false | 0 | true | decommissioned | true

(5 rows)

is_draining == trueindicates that the node is either undergoing or has completed the draining process.is_decommissioning == trueindicates that the node is either undergoing or has completed the decommissioning process.- When a node completes decommissioning, its

membershipstatus changes fromdecommissioningtodecommissioned.

stderr

When CockroachDB receives a signal to drain and terminate the node process, this message is printed to stderr:

When CockroachDB receives a signal to terminate the node process, this message is printed to stderr:

initiating graceful shutdown of server

After the cockroach process has stopped, this message is printed to stderr:

server drained and shutdown completed

Examples

These examples assume that you have already prepared for a graceful node shutdown.

Stop and restart a node

To drain and shut down a node that was started in the foreground with cockroach start:

Press

ctrl-cin the terminal where the node is running.initiating graceful shutdown of server server drained and shutdown completedFilter the logs for draining progress messages. By default, the

OPSlogs output to acockroach.logfile:grep 'drain' node1/logs/cockroach.logI220202 20:51:21.654349 2867 1@server/drain.go:210 ⋮ [n1,server drain process] 299 drain remaining: 15 I220202 20:51:21.654425 2867 1@server/drain.go:212 ⋮ [n1,server drain process] 300 drain details: descriptor leases: 7, liveness record: 1, range lease iterations: 7 I220202 20:51:23.052931 2867 1@server/drain.go:210 ⋮ [n1,server drain process] 309 drain remaining: 1 I220202 20:51:23.053217 2867 1@server/drain.go:212 ⋮ [n1,server drain process] 310 drain details: range lease iterations: 1 W220202 20:51:23.772264 681 sql/stmtdiagnostics/statement_diagnostics.go:162 ⋮ [n1] 313 error polling for statement diagnostics requests: ‹stmt-diag-poll›: cannot acquire lease when draining E220202 20:51:23.800288 685 jobs/registry.go:715 ⋮ [n1] 314 error expiring job sessions: ‹expire-sessions›: cannot acquire lease when draining E220202 20:51:23.819957 685 jobs/registry.go:723 ⋮ [n1] 315 failed to serve pause and cancel requests: could not query jobs table: ‹cancel/pause-requested›: cannot acquire lease when draining I220202 20:51:24.672435 2867 1@server/drain.go:210 ⋮ [n1,server drain process] 320 drain remaining: 0 I220202 20:51:24.984089 1 1@cli/start.go:868 ⋮ [n1] 332 server drained and shutdown completedThe

server drained and shutdown completedmessage indicates that thecockroachprocess has stopped.Start the node to have it rejoin the cluster.

Re-run the

cockroach startcommand that you used to start the node initially. For example:cockroach start \ --certs-dir=certs \ --store=node1 \ --listen-addr=localhost:26257 \ --http-addr=localhost:8080 \ --join=localhost:26257,localhost:26258,localhost:26259 \CockroachDB node starting at 2022-02-11 06:25:24.922474 +0000 UTC (took 5.1s) build: CCL v21.2.0-791-ga0f0df7927 @ 2022/02/02 20:08:24 (go1.17.6) webui: https://localhost:8080 sql: postgresql://root@localhost:26257/defaultdb?sslcert=certs%2Fclient.root.crt&sslkey=certs%2Fclient.root.key&sslmode=verify-full&sslrootcert=certs%2Fca.crt sql (JDBC): jdbc:postgresql://localhost:26257/defaultdb?sslcert=certs%2Fclient.root.crt&sslkey=certs%2Fclient.root.key&sslmode=verify-full&sslrootcert=certs%2Fca.crt&user=root RPC client flags: cockroach <client cmd> --host=localhost:26257 --certs-dir=certs logs: /Users/ryankuo/node1/logs temp dir: /Users/ryankuo/node1/cockroach-temp2906330099 external I/O path: /Users/ryankuo/node1/extern store[0]: path=/Users/ryankuo/node1 storage engine: pebble clusterID: b2b33385-bc77-4670-a7c8-79d79967bdd0 status: restarted pre-existing node nodeID: 1

Drain a node manually

You can use cockroach node drain to drain a node separately from decommissioning the node or terminating the node process.

Run the

cockroach node draincommand, specifying the address of the node to drain (and optionally a custom drain timeout to allow draining more time to complete):cockroach node drain --host={address of node to drain} --drain-wait=15m --certs-dir=certsYou will see the draining status print to

stderr:node is draining... remaining: 50 node is draining... remaining: 0 (complete) okFilter the logs for shutdown progress messages. By default, the

OPSlogs output to acockroach.logfile:grep 'drain' node1/logs/cockroach.logI220204 00:08:57.382090 1596 1@server/drain.go:110 ⋮ [n1] 77 drain request received with doDrain = true, shutdown = false E220204 00:08:59.732020 590 jobs/registry.go:749 ⋮ [n1] 78 error processing claimed jobs: could not query for claimed jobs: ‹select-running/get-claimed-jobs›: cannot acquire lease when draining I220204 00:09:00.711459 1596 kv/kvserver/store.go:1571 ⋮ [drain] 79 waiting for 1 replicas to transfer their lease away I220204 00:09:01.103881 1596 1@server/drain.go:210 ⋮ [n1] 80 drain remaining: 50 I220204 00:09:01.103999 1596 1@server/drain.go:212 ⋮ [n1] 81 drain details: liveness record: 2, range lease iterations: 42, descriptor leases: 6 I220204 00:09:01.104128 1596 1@server/drain.go:134 ⋮ [n1] 82 drain request completed without server shutdown I220204 00:09:01.307629 2150 1@server/drain.go:110 ⋮ [n1] 83 drain request received with doDrain = true, shutdown = false I220204 00:09:02.459197 2150 1@server/drain.go:210 ⋮ [n1] 84 drain remaining: 0 I220204 00:09:02.459272 2150 1@server/drain.go:134 ⋮ [n1] 85 drain request completed without server shutdownThe

drain request completed without server shutdownmessage indicates that the node was drained.

Remove nodes

In addition to the graceful node shutdown requirements, observe the following guidelines:

- Before decommissioning nodes, verify that there are no under-replicated or unavailable ranges on the cluster.

- Do not terminate the node process, delete the storage volume, or remove the VM before a

decommissioningnode has changed its membership status todecommissioned. Prematurely terminating the process will prevent the node from rebalancing all of its range replicas onto other nodes gracefully, cause transient query errors in client applications, and leave the remaining ranges under-replicated and vulnerable to loss of quorum if another node goes down. - When removing nodes, decommission all nodes at once. Do not decommission the nodes one-by-one. This will incur unnecessary data movement costs due to replicas being passed between decommissioning nodes. All nodes must be fully

decommissionedbefore terminating the node process and removing the data storage. - If you have a decommissioning node that appears to be hung, you can recommission the node.

Step 1. Get the IDs of the nodes to decommission

Open the Cluster Overview page of the DB Console and note the node IDs of the nodes you want to decommission.

This example assumes you will decommission node IDs 4 and 5 of a 5-node cluster.

Step 2. Drain the nodes manually

Run the cockroach node drain command for each node to be removed, specifying the address of the node to drain:

cockroach node drain --host={address of node 4} --certs-dir=certs

cockroach node drain --host={address of node 5} --certs-dir=certs

You will see the draining status of each node print to stderr:

node is draining... remaining: 50

node is draining... remaining: 0 (complete)

ok

Manually draining the nodes before decommissioning prevents possible disruptions in query performance.

Step 3. Decommission the nodes

Run the cockroach node decommission command with the IDs of the nodes to decommission:

$ cockroach node decommission 4 5 --certs-dir=certs --host={address of any live node}

You'll then see the decommissioning status print to stderr as it changes:

id | is_live | replicas | is_decommissioning | membership | is_draining

-----+---------+----------+--------------------+-----------------+--------------

4 | true | 39 | true | decommissioning | true

5 | true | 34 | true | decommissioning | true

(2 rows)

The is_draining field is true because the nodes were previously drained.

Once the nodes have been fully decommissioned, you'll see zero replicas and a confirmation:

id | is_live | replicas | is_decommissioning | membership | is_draining

-----+---------+----------+--------------------+-----------------+--------------

4 | true | 0 | true | decommissioning | true

5 | true | 0 | true | decommissioning | true

(2 rows)

No more data reported on target nodes. Please verify cluster health before removing the nodes.

The is_decommissioning field remains true after all replicas have been removed from each node.

Do not terminate the node process, delete the storage volume, or remove the VM before a decommissioning node has changed its membership status to decommissioned. Prematurely terminating the process will prevent the node from rebalancing all of its range replicas onto other nodes gracefully, cause transient query errors in client applications, and leave the remaining ranges under-replicated and vulnerable to loss of quorum if another node goes down.

Step 4. Confirm the nodes are decommissioned

Check the status of the decommissioned nodes:

$ cockroach node status --decommission --certs-dir=certs --host={address of any live node}

id | address | sql_address | build | started_at | updated_at | locality | is_available | is_live | gossiped_replicas | is_decommissioning | membership | is_draining

-----+-----------------+-----------------+-------------------------+----------------------------+----------------------------+----------+--------------+---------+-------------------+--------------------+----------------+--------------

1 | localhost:26257 | localhost:26257 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:55.07734 | 2022-02-11 02:17:28.202777 | | true | true | 73 | false | active | false

2 | localhost:26258 | localhost:26258 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.203535 | 2022-02-11 02:17:29.465841 | | true | true | 73 | false | active | false

3 | localhost:26259 | localhost:26259 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.406667 | 2022-02-11 02:17:29.588486 | | true | true | 73 | false | active | false

4 | localhost:26260 | localhost:26260 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.914003 | 2022-02-11 02:16:39.032709 | | false | false | 0 | true | decommissioned | true

5 | localhost:26261 | localhost:26261 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:57.613508 | 2022-02-11 02:16:39.615783 | | false | false | 0 | true | decommissioned | true

(5 rows)

- Membership on the decommissioned nodes should have changed from

decommissioningtodecommissioned. 0replicas should remain on these nodes.

Once the nodes complete decommissioning, they will appear in the list of Recently Decommissioned Nodes in the DB Console.

Step 5. Terminate the process on decommissioned nodes

We do not recommend sending SIGKILL to perform a "hard" shutdown, which bypasses CockroachDB's node shutdown logic and forcibly terminates the process. This can corrupt log files and, in certain edge cases, can result in temporary data unavailability, latency spikes, uncertainty errors, ambiguous commit errors, or query timeouts. When decommissioning, a hard shutdown will leave ranges under-replicated and vulnerable to another node failure, causing quorum loss in the window before up-replication completes.

On production deployments, use the process manager to send

SIGTERMto the process.- For example, with

systemd, runsystemctl stop {systemd config filename}.

- For example, with

When using CockroachDB for local testing:

- When running a server on the foreground, use

ctrl-cin the terminal to sendSIGINTto the process. - When running with the

--backgroundflag, usepkill,kill, or look up the process ID withps -ef | grep cockroach | grep -v grepand then runkill -TERM {process ID}.

- When running a server on the foreground, use

The following messages will be printed:

initiating graceful shutdown of server

server drained and shutdown completed

Remove a dead node

If a node is offline for the duration set by server.time_until_store_dead (5 minutes by default), the cluster considers the node "dead" and starts to rebalance its range replicas onto other nodes.

However, if the dead node is restarted, the cluster will rebalance replicas and leases onto the node. To prevent the cluster from rebalancing data to a dead node that comes back online, do the following:

Step 1. Confirm the node is dead

Check the status of your nodes:

$ cockroach node status --decommission --certs-dir=certs --host={address of any live node}

id | address | sql_address | build | started_at | updated_at | locality | is_available | is_live | gossiped_replicas | is_decommissioning | membership | is_draining

-----+-----------------+-----------------+-------------------------+----------------------------+----------------------------+----------+--------------+---------+-------------------+--------------------+----------------+--------------

1 | localhost:26257 | localhost:26257 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:45:45.970862 | 2022-02-11 05:32:43.233458 | | false | false | 0 | false | active | true

2 | localhost:26258 | localhost:26258 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:46:40.32999 | 2022-02-11 05:42:28.577662 | | true | true | 73 | false | active | false

3 | localhost:26259 | localhost:26259 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:46:47.20388 | 2022-02-11 05:42:27.467766 | | true | true | 73 | false | active | false

4 | localhost:26260 | localhost:26260 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.914003 | 2022-02-11 02:16:39.032709 | | true | true | 73 | false | active | false

(4 rows)

The is_live field of the dead node will be false.

Alternatively, open the Cluster Overview page of the DB Console and check that the node status of the node is DEAD.

Step 2. Decommission the dead node

Run the cockroach node decommission command against the address of any live node, specifying the ID of the dead node:

$ cockroach node decommission 1 --certs-dir=certs --host={address of any live node}

id | is_live | replicas | is_decommissioning | membership | is_draining

-----+---------+----------+--------------------+-----------------+--------------

1 | false | 0 | true | decommissioning | true

(1 row)

No more data reported on target nodes. Please verify cluster health before removing the nodes.

Step 3. Confirm the node is decommissioned

Check the status of the decommissioned node:

$ cockroach node status --decommission --certs-dir=certs --host={address of any live node}

id | address | sql_address | build | started_at | updated_at | locality | is_available | is_live | gossiped_replicas | is_decommissioning | membership | is_draining

-----+-----------------+-----------------+-------------------------+----------------------------+----------------------------+----------+--------------+---------+-------------------+--------------------+----------------+--------------

1 | localhost:26257 | localhost:26257 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:45:45.970862 | 2022-02-11 06:07:40.697734 | | false | false | 0 | true | decommissioned | true

2 | localhost:26258 | localhost:26258 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:46:40.32999 | 2022-02-11 05:42:28.577662 | | true | true | 73 | false | active | false

3 | localhost:26259 | localhost:26259 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:46:47.20388 | 2022-02-11 05:42:27.467766 | | true | true | 73 | false | active | false

4 | localhost:26260 | localhost:26260 | v21.2.0-791-ga0f0df7927 | 2022-02-11 02:11:56.914003 | 2022-02-11 02:16:39.032709 | | true | true | 73 | false | active | false

(4 rows)

- Membership on the decommissioned node should have changed from

activetodecommissioned.

Once the node completes decommissioning, it will appear in the list of Recently Decommissioned Nodes in the DB Console.

Recommission nodes

If you accidentally started decommissioning a node, or have a node with a hung decommissioning process, you can recommission the node. This cancels replica removal from the decommissioning node.

Recommissioning can only cancel an active decommissioning process. If a node has completed decommissioning, you must start a new node. A fully decommissioned node is permanently decommissioned, and cannot be recommissioned.

Step 1. Cancel the decommissioning process

Press ctrl-c in each terminal with an ongoing decommissioning process that you want to cancel.

Step 2. Recommission the decommissioning nodes

Run the cockroach node recommission command with the ID of the node to recommission:

$ cockroach node recommission 1 --certs-dir=certs --host={address of any live node}

The value of is_decommissioning will change back to false:

id | is_live | replicas | is_decommissioning | membership | is_draining

-----+---------+----------+--------------------+------------+--------------

1 | false | 73 | false | active | true

(1 row)

If the decommissioning node has already reached the draining stage, you may need to restart the node after it is recommissioned.

On the Cluster Overview page of the DB Console, the node status of the node should be LIVE. After a few minutes, you should see replicas rebalanced to the nodes.