New in v1.1: This page shows you how to decommission and permanently remove one or more nodes from a CockroachDB cluster. You might do this, for example, when downsizing a cluster or reacting to hardware failures.

For information about temporarily stopping a node, see Stop a Node.

Overview

How It Works

When you decommission a node, CockroachDB lets the node finish in-flight requests, rejects any new requests, and transfers all range replicas and range leases off the node so that it can be safely shut down.

Basic terms:

- Range: CockroachDB stores all user data and almost all system data in a giant sorted map of key value pairs. This keyspace is divided into "ranges", contiguous chunks of the keyspace, so that every key can always be found in a single range.

- Range Replica: CockroachDB replicates each range (3 times by default) and stores each replica on a different node.

- Range Lease: For each range, one of the replicas holds the "range lease". This replica, referred to as the "leaseholder", is the one that receives and coordinates all read and write requests for the range.

Considerations

- Before decommissioning a node, make sure other nodes are available to take over the range replicas from the node. If no other nodes are available, the decommission process will hang indefinitely. See the Examples below for more details.

- If a node has died, for example, due to a hardware failure, do not use the

--wait=allflag to decommission the node. Doing so will cause the decommission process to hang indefinitely. Instead, use--wait=live. See Remove a Single Node (Dead) and Remove Multiple Nodes for more details.

Examples

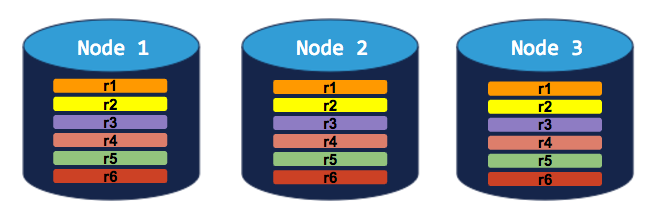

3-node cluster with 3-way replication

In this scenario, each range is replicated 3 times, with each replica on a different node:

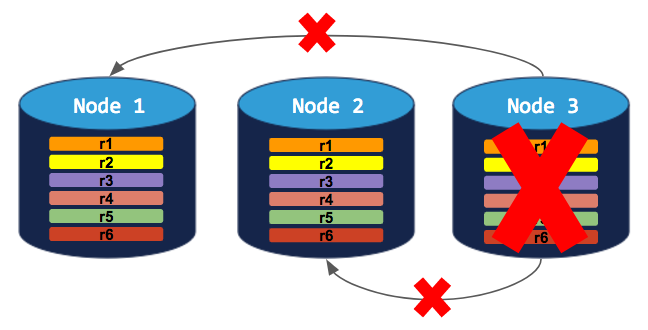

If you try to decommission a node, the process will hang indefinitely because the cluster cannot move the decommissioned node's replicas to the other 2 nodes, which already have a replica of each range:

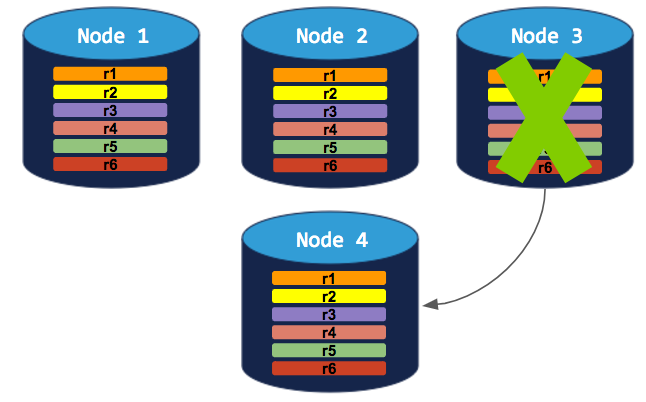

To successfully decommission a node, you need to first add a 4th node:

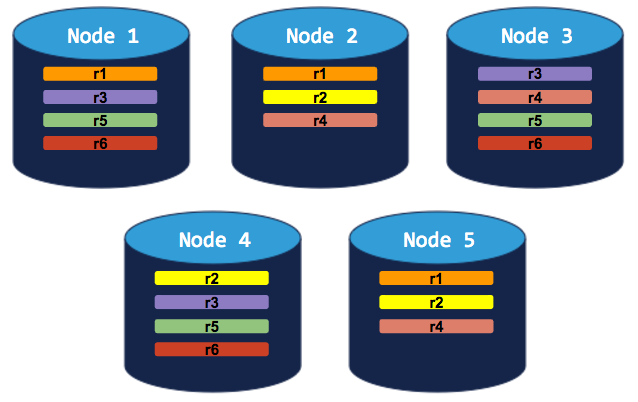

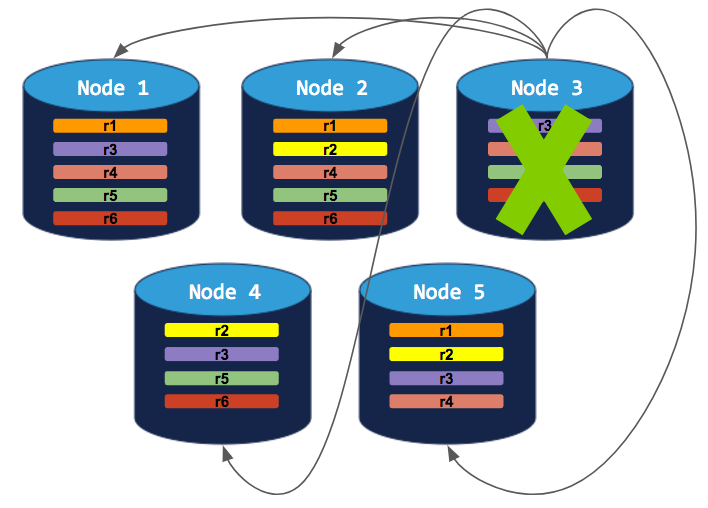

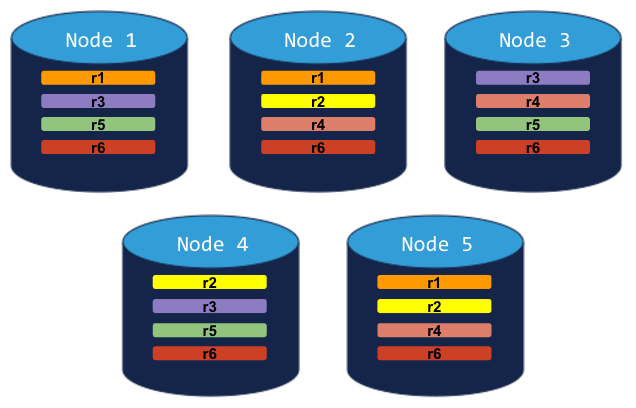

5-node cluster with 3-way replication

In this scenario, like in the scenario above, each range is replicated 3 times, with each replica on a different node:

If you decommission a node, the process will run successfully because the cluster will be able to move the node's replicas to other nodes without doubling up any range replicas:

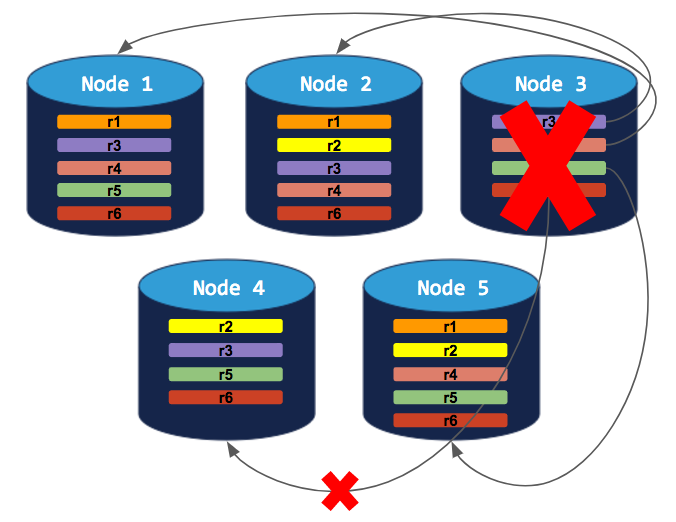

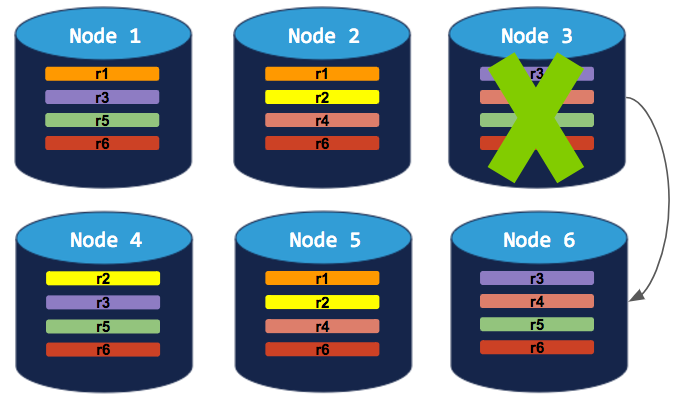

5-node cluster with 5-way replication for a specific table

In this scenario, a custom replication zone has been set to replicate a specific table 5 times (range 6), while all other data is replicated 3 times:

If you try to decommission a node, the cluster will successfully rebalance all ranges but range 6. Since range 6 requires 5 replicas (based on the table-specific replication zone), and since CockroachDB will not allow more than a single replica of any range on a single node, the decommission process will hang indefinitely:

To successfully decommission a node, you need to first add a 6th node:

Remove a Single Node (Live)

Before You Begin

Confirm that there are enough nodes to take over the replicas from the node you want to remove. See some Example scenarios above.

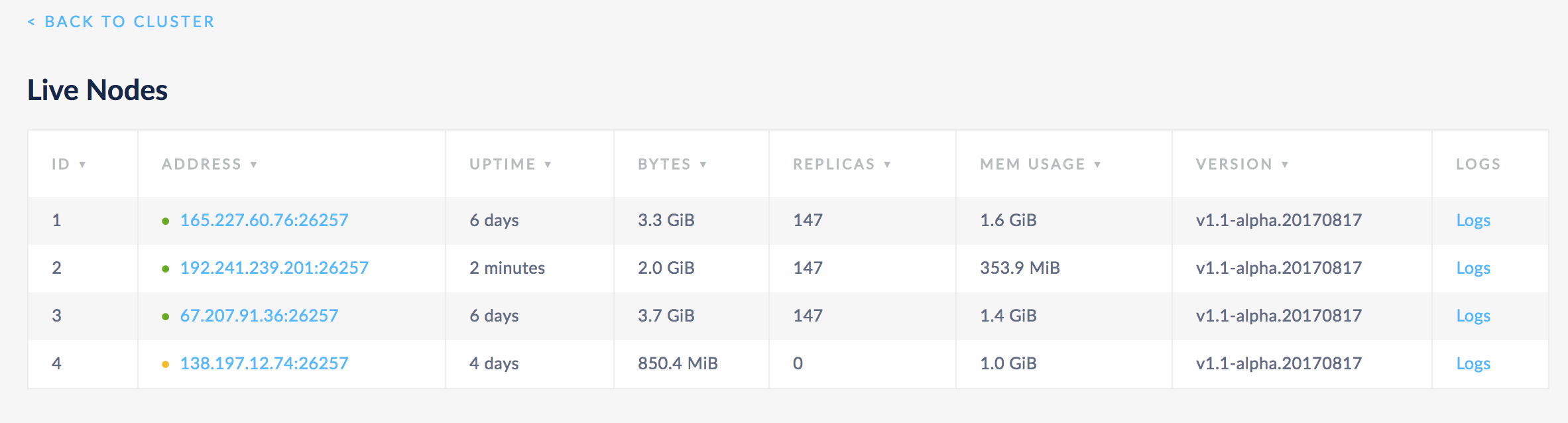

Step 1. Check the node before decommissioning

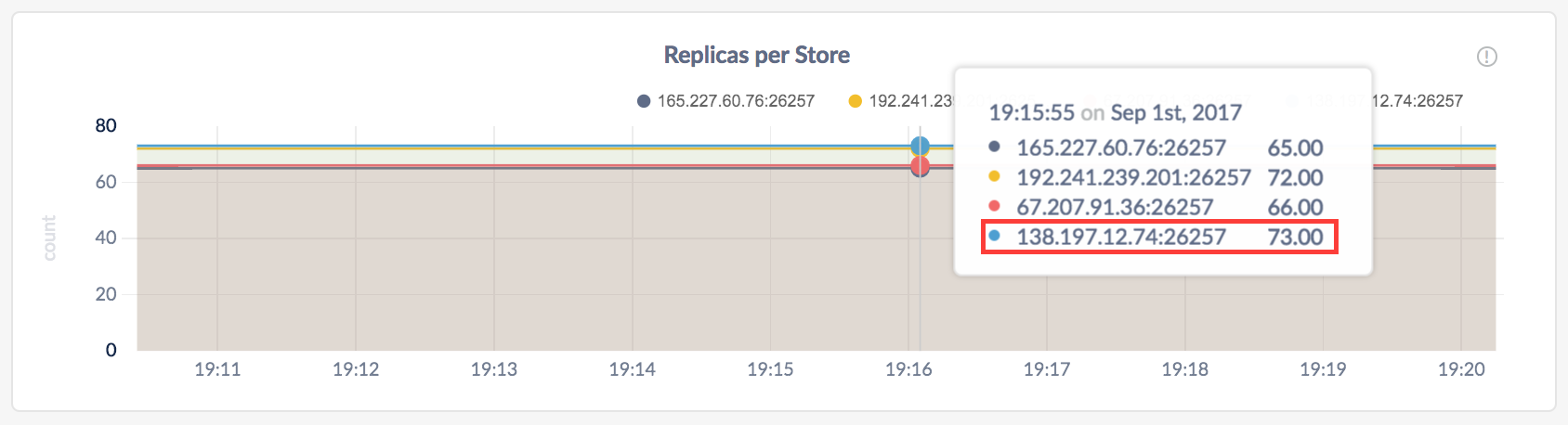

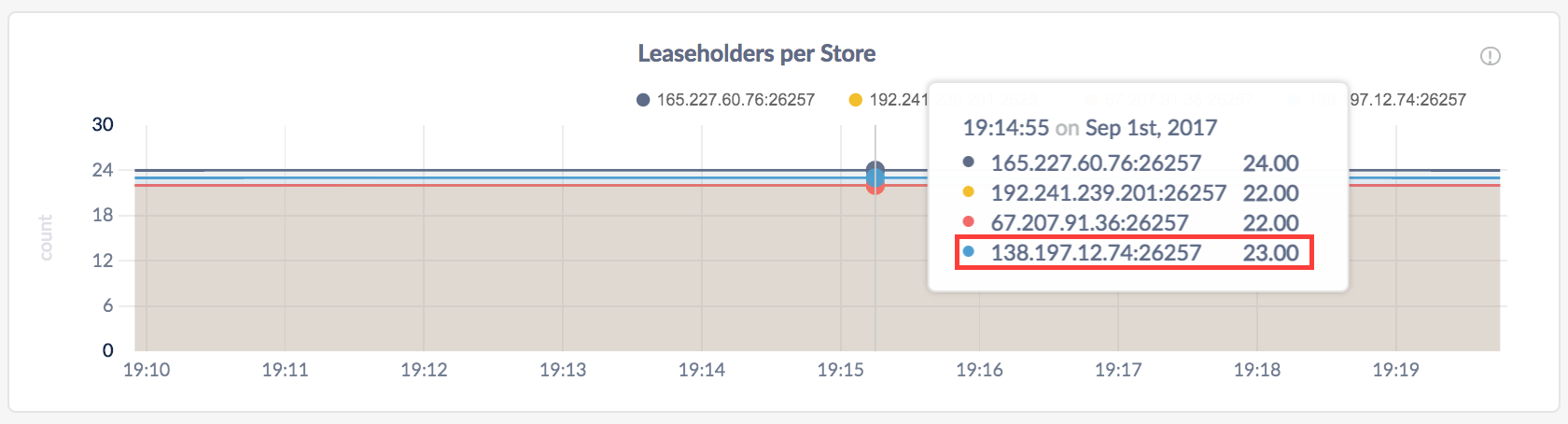

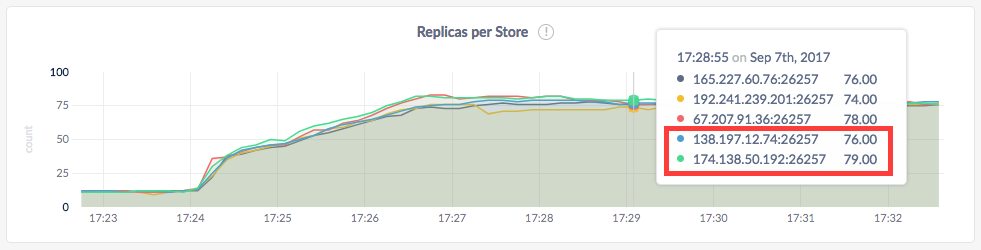

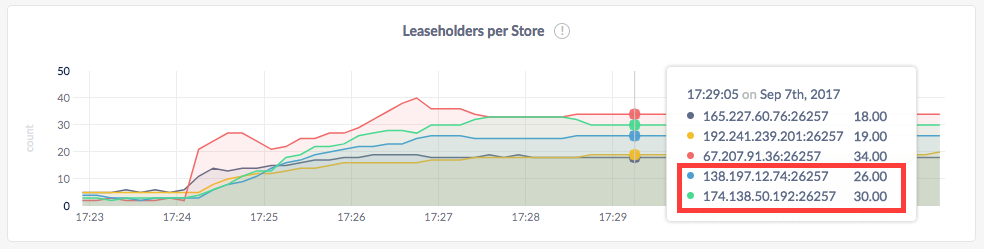

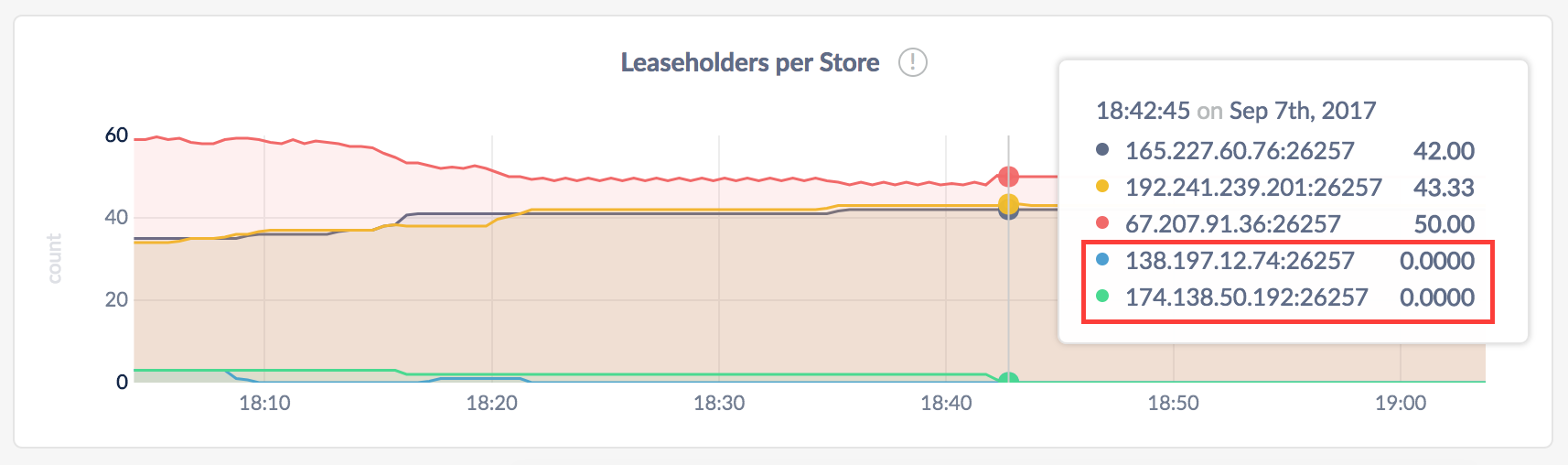

Open the Admin UI, go to the Replication dashboard and hover over the Replicas per Store and Leaseholders per Store graphs:

Step 2. Decommission and remove the node

SSH to the machine where the node is running and execute the cockroach quit command with the --decommission flag and other required flags:

$ cockroach quit --decommission --certs-dir=certs --host=<address of node to remove>

$ cockroach quit --decommission --insecure --host=<address of node to remove>

Every second or so, you'll then see the decommissioning status:

+----+---------+-------------------+--------------------+-------------+

| id | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+---------+-------------------+--------------------+-------------+

| 4 | true | 73 | false | false |

+----+---------+-------------------+--------------------+-------------+

(1 row)

+----+---------+-------------------+--------------------+-------------+

| id | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+---------+-------------------+--------------------+-------------+

| 4 | true | 73 | true | false |

+----+---------+-------------------+--------------------+-------------+

(1 row)

Once the node has been fully decommissioned and stopped, you'll see a confirmation:

+----+---------+-------------------+--------------------+-------------+

| id | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+---------+-------------------+--------------------+-------------+

| 4 | true | 13 | true | true |

+----+---------+-------------------+--------------------+-------------+

(1 row)

+----+---------+-------------------+--------------------+-------------+

| id | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+---------+-------------------+--------------------+-------------+

| 4 | true | 0 | true | true |

+----+---------+-------------------+--------------------+-------------+

(1 row)

All target nodes report that they hold no more data. Please verify cluster health before removing the nodes.

ok

Step 3. Check the node and cluster after decommissioning

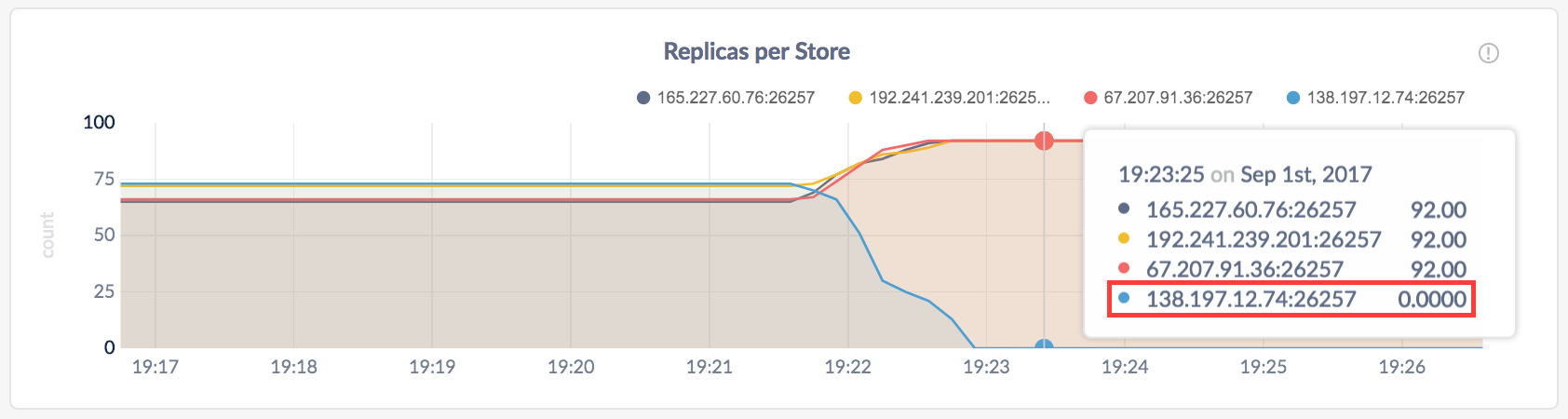

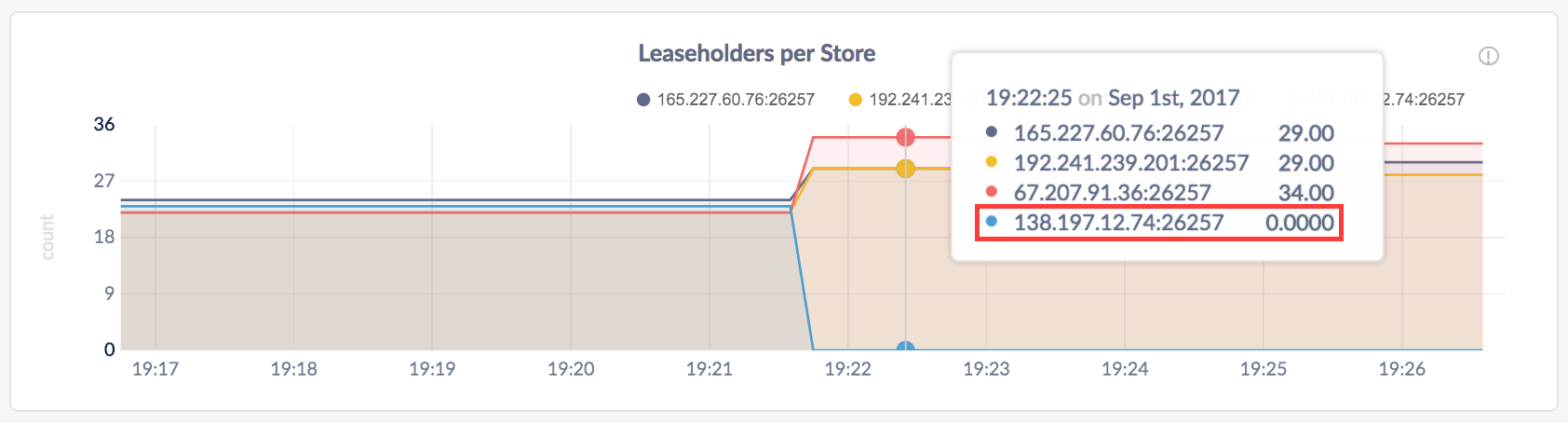

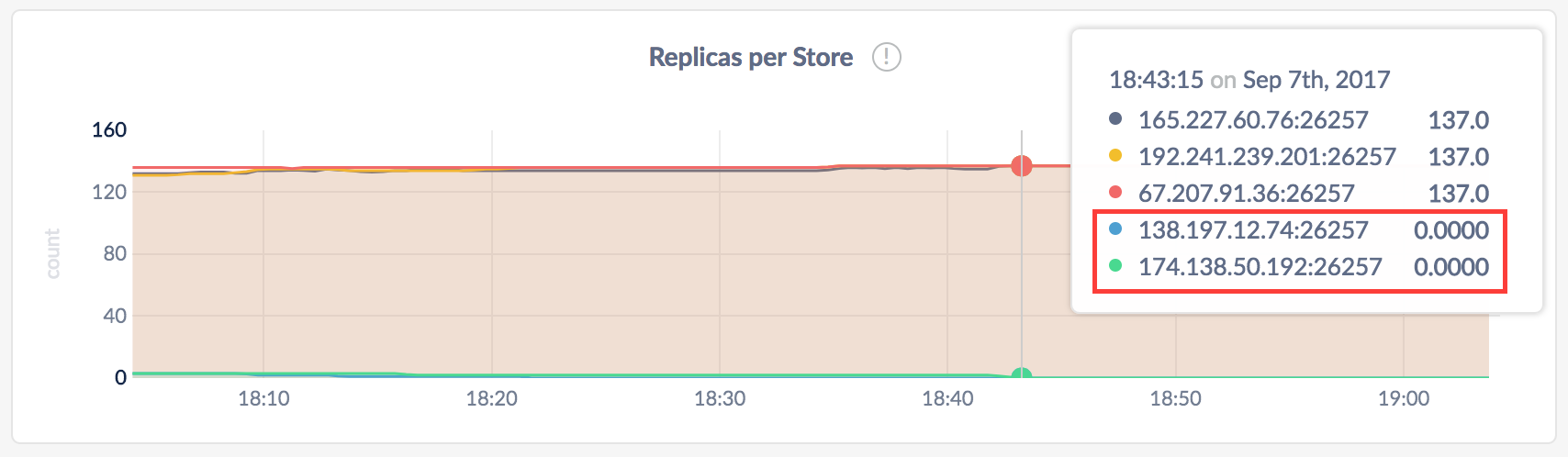

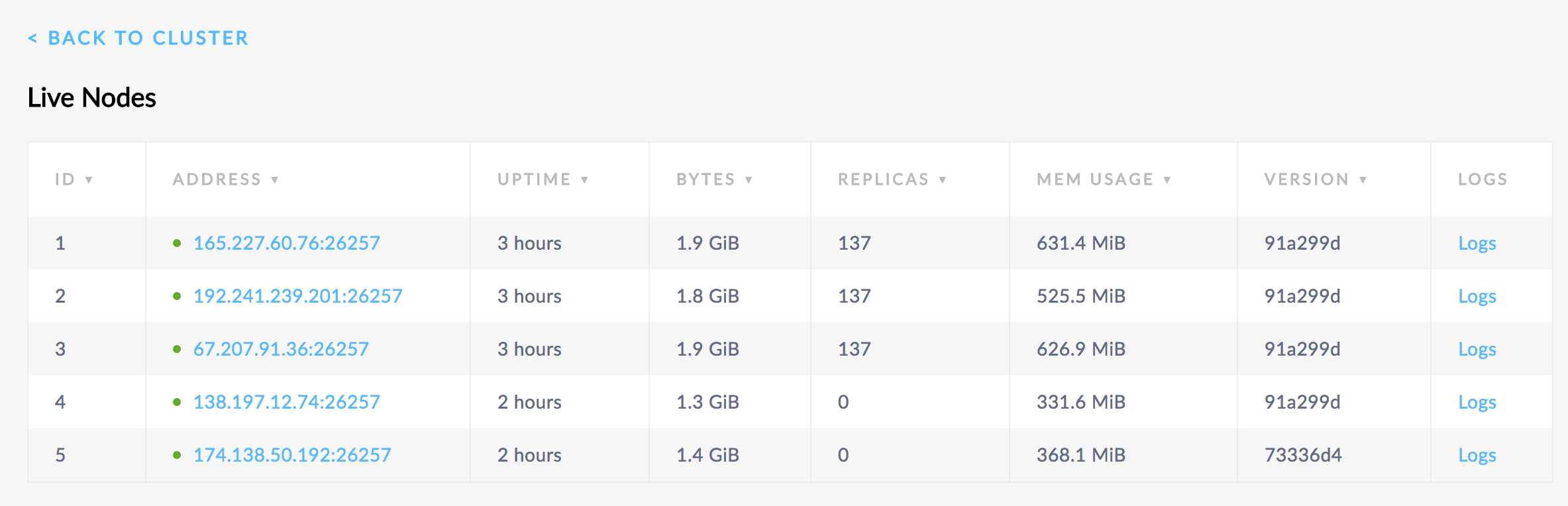

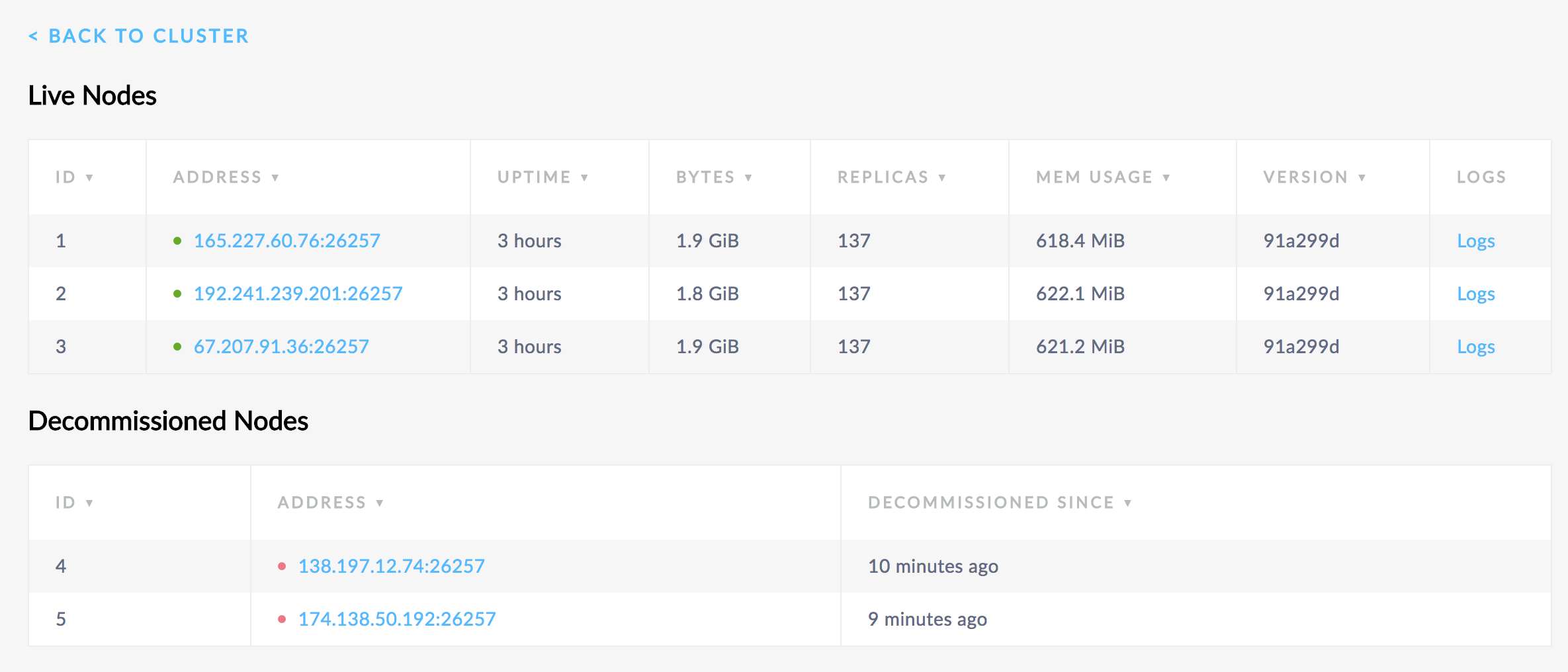

In the Admin UI, again hover over the Replicas per Store and Leaseholders per Store graphs. For the node that you decommissioned, the counts should be 0:

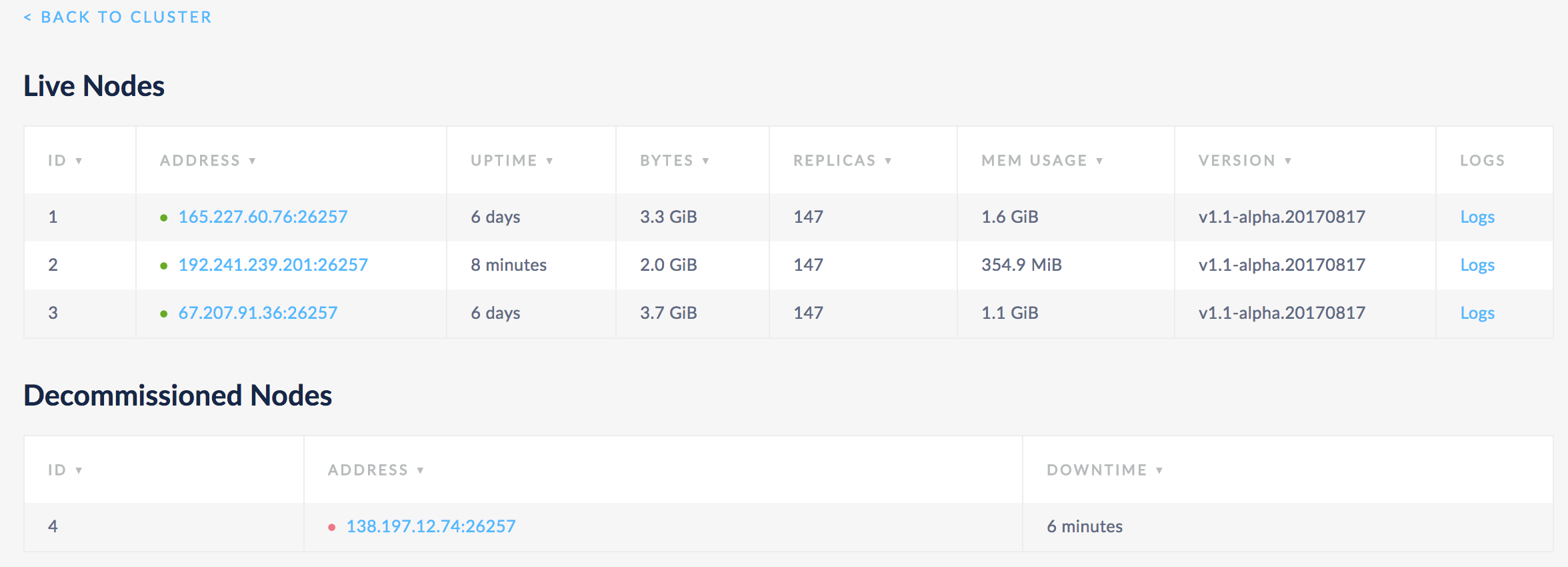

Then click View nodes list in the Summary area and make sure all nodes but the one you removed are healthy (green):

In about 5 minutes, you'll see the removed node listed under Decommissioned Nodes:

Remove a Single Node (Dead)

Once a node has been dead for 5 minutes, CockroachDB automatically transfers the range replicas and range leases on the node to available live nodes. However, if it is restarted, the cluster will rebalance replicas and leases to it.

To prevent the cluster from rebalancing data to a dead node if it comes back online, do the following:

Step 1. Identify the ID of the dead node

Open the Admin UI, click View nodes list in the Summary area, and note the ID of the node listed under Dead Nodes:

Step 2. Mark the dead node as decommissioned

SSH to any live node in the cluster and run the cockroach node decommission command with the ID of the node to officially decommission:

--wait=live. If not specified, this flag defaults to --wait=all, which will cause the node decommission command to hang indefinitely.$ cockroach node decommission 4 --wait=live --certs-dir=certs --host=<address of live node>

$ cockroach node decommission 4 --wait=live --insecure --host=<address of live node>

+----+---------+-------------------+--------------------+-------------+

| id | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+---------+-------------------+--------------------+-------------+

| 4 | false | 12 | true | true |

+----+---------+-------------------+--------------------+-------------+

(1 row)

Decommissioning finished. Please verify cluster health before removing the nodes.

If the node is ever restarted, it will be listed as Live in the Admin UI, but the cluster will recognize it as decommissioned and will not rebalance any data to the node. If the node is then stopped again, a short time later, it will be listed as Decommissioned in the Admin UI:

Remove Multiple Nodes

Before You Begin

Confirm that there are enough nodes to take over the replicas from the nodes you want to remove. See some Example scenarios above.

Step 1. Identify the IDs of the nodes to decommission

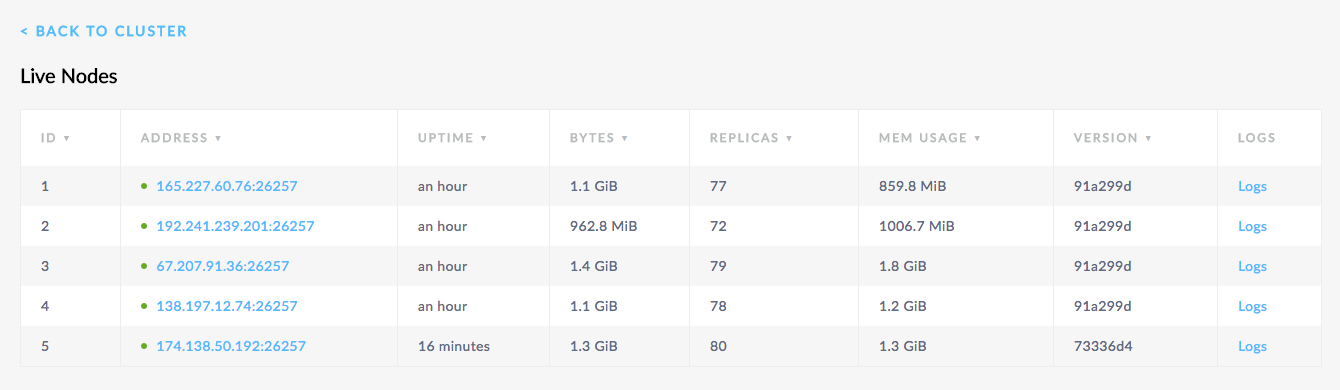

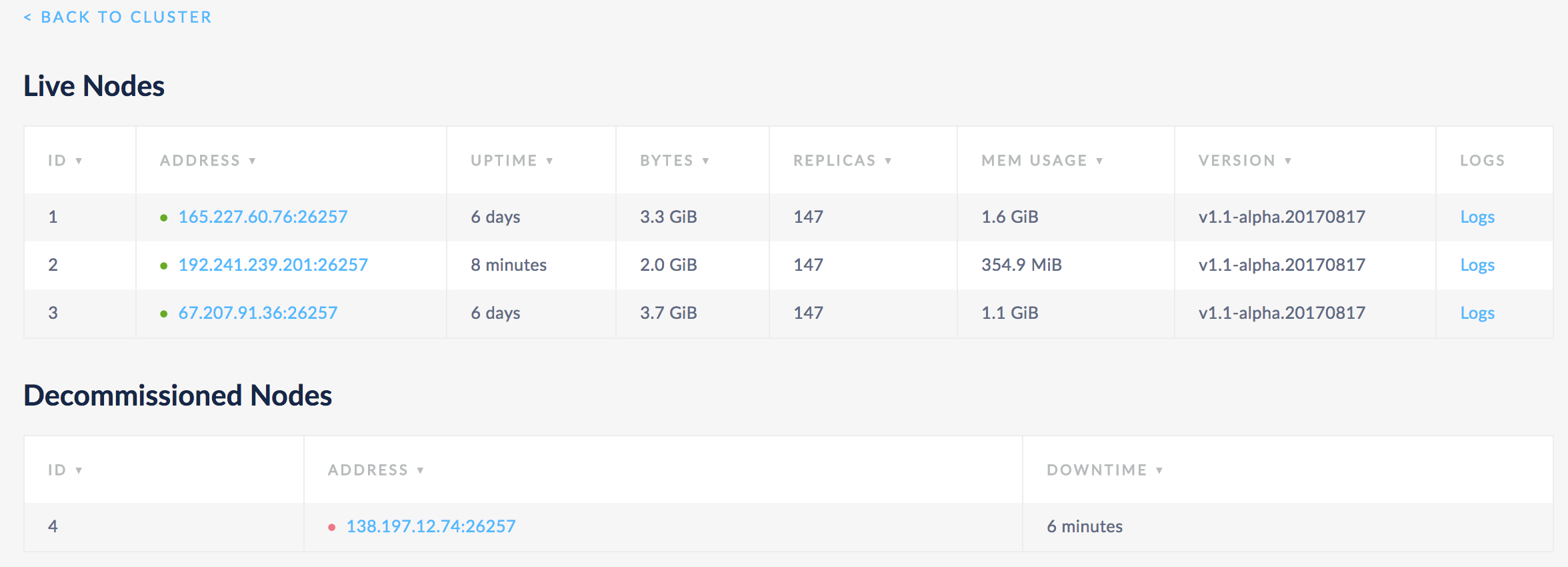

Open the Admin UI, click View nodes list in the Summary area, and note the IDs of the nodes that you want to decommission:

Step 2. Check the nodes before decommissioning

In the Admin UI, go to the Replication dashboard and hover over the Replicas per Store and Leaseholders per Store graphs:

Step 3. Decommission the nodes

SSH to any live node in the cluster and run the cockroach node decommission command with the IDs of the nodes to officially decommission:

--wait=live. This will ensure that the command will not wait indefinitely for dead nodes to finish decommissioning.$ cockroach node decommission 4 5 --wait=live --certs-dir=certs --host=<address of live node>

$ cockroach node decommission 4 5 --wait=live --insecure --host=<address of live node>

Every second or so, you'll then see the decommissioning status:

+----+---------+-------------------+--------------------+-------------+

| id | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+---------+-------------------+--------------------+-------------+

| 4 | true | 8 | true | false |

| 5 | true | 9 | true | false |

+----+---------+-------------------+--------------------+-------------+

(2 rows)

+----+---------+-------------------+--------------------+-------------+

| id | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+---------+-------------------+--------------------+-------------+

| 4 | true | 8 | true | false |

| 5 | true | 9 | true | false |

+----+---------+-------------------+--------------------+-------------+

(2 rows)

Once the nodes have been fully decommissioned, you'll see a confirmation:

+----+---------+-------------------+--------------------+-------------+

| id | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+---------+-------------------+--------------------+-------------+

| 4 | true | 0 | true | true |

| 5 | true | 0 | true | true |

+----+---------+-------------------+--------------------+-------------+

(2 rows)

Decommissioning finished. Please verify cluster health before removing the nodes.

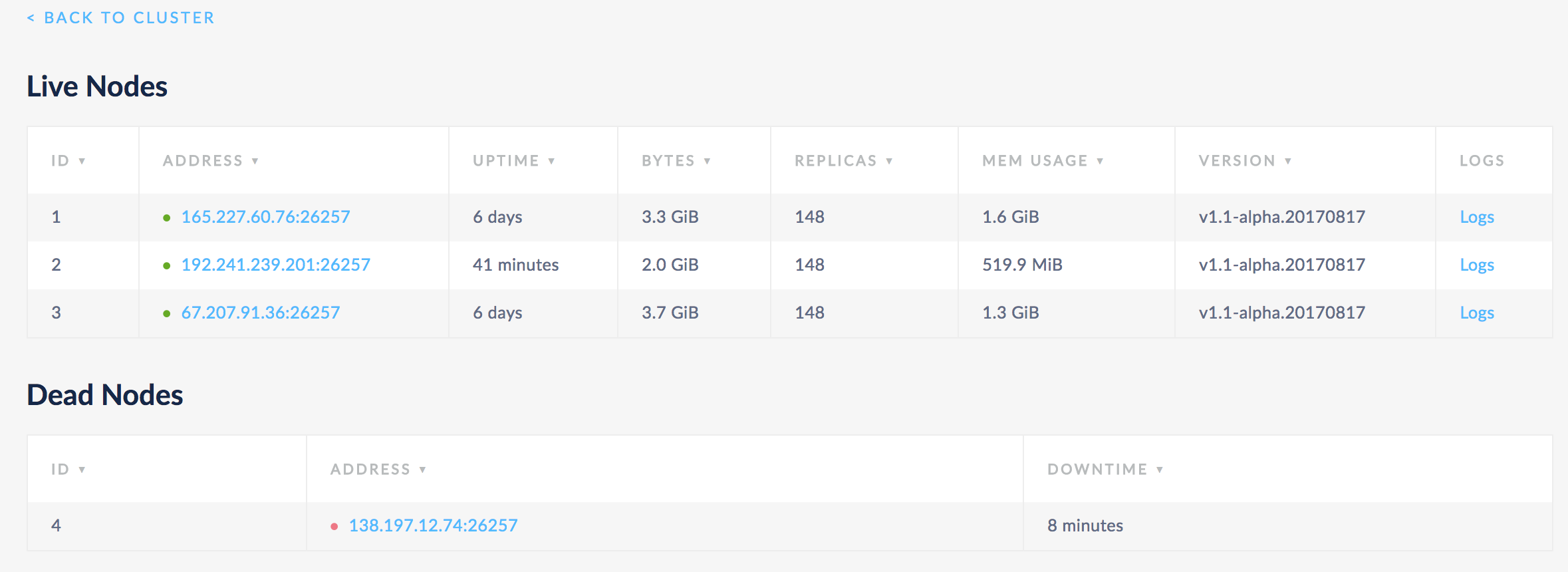

Step 4. Check the nodes and cluster after decommissioning

In the Admin UI, again hover over the Replicas per Store and Leaseholders per Store graphs. For the nodes that you decommissioned, the counts should be 0:

Then click View nodes list in the Summary area and make sure all nodes are healthy (green) and the decommissioned nodes have 0 replicas:

Step 5. Remove the decommissioned nodes

At this point, although the decommissioned nodes are live, the cluster will not rebalance any data to them, and the nodes will not accept any client connections. However, to officially remove the nodes from the cluster, you still need to stop them.

For each decommissioned node, SSH to the machine running the node and execute the cockroach quit command:

$ cockroach quit --certs-dir=certs --host=<address of decommissioned node>

$ cockroach quit --insecure --host=<address of decommissioned node>

In about 5 minutes, you'll see the nodes listed under Decommissioned Nodes:

Recommission Nodes

If you accidentally decommissioned any nodes, or otherwise want decommissioned nodes to rejoin a cluster as active members, do the following:

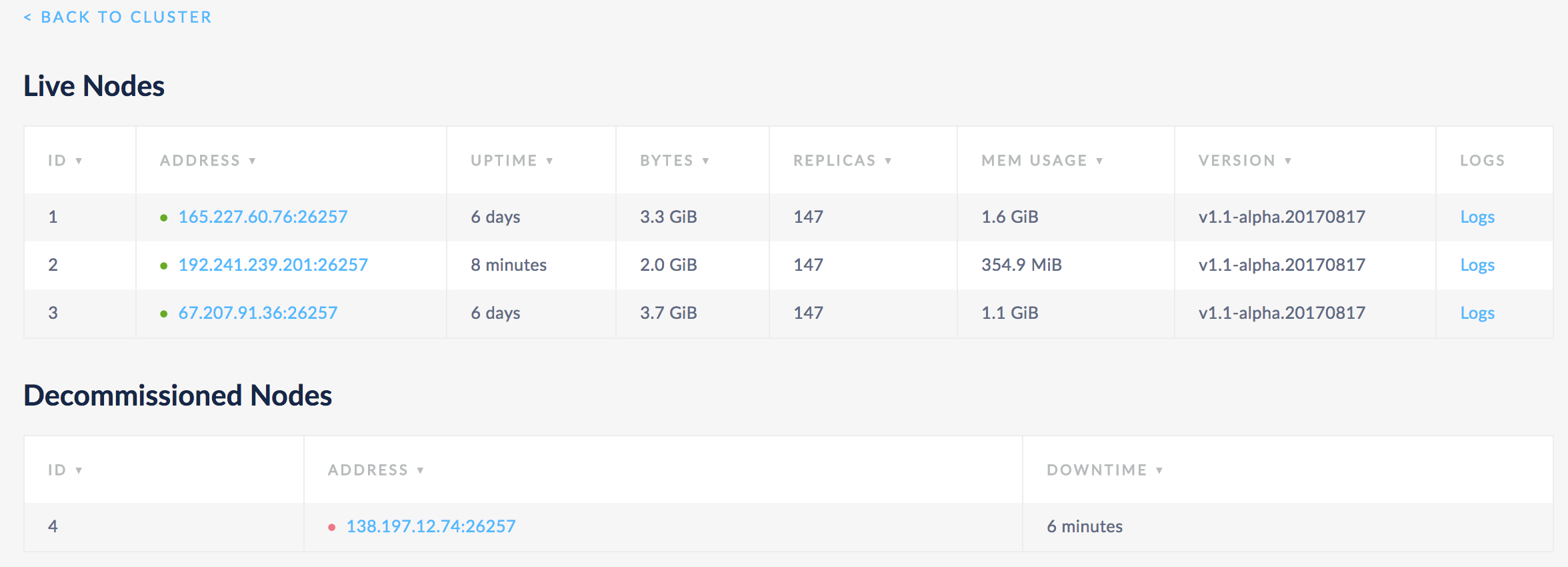

Step 1. Identify the IDs of the decommissioned nodes

Open the Admin UI, click View nodes list in the Summary area, and note the IDs of the nodes listed under Decommissioned Nodes:

Step 2. Recommission the nodes

SSH to one of the live nodes and execute the cockroach node recommission command with the IDs of the nodes to recommission:

$ cockroach node recommission 4 --certs-dir=certs --host=<address of live node>

$ cockroach node recommision 4 --insecure --host=<address of live node>

+----+---------+-------------------+--------------------+-------------+

| id | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+---------+-------------------+--------------------+-------------+

| 4 | false | 12 | false | true |

+----+---------+-------------------+--------------------+-------------+

(1 row)

The affected nodes must be restarted for the change to take effect.

Step 3. Restart the recommissioned nodes

SSH to each machine with a recommissioned node and run the same cockroach start command that you used to initially start the node, for example:

$ cockroach start --certs-dir=certs --host=<address of node to restart> --join=<address of node 1>:26257 --background

$ cockroach start --insecure --host=<address of node to restart> --join=<address of node 1>:26257 --background

In the Admin UI, click View nodes list in the Summary area. You should very soon see the recommissioned nodes listed under Live Nodes and, after a few minutes, you should see replicas rebalanced to it.

Check the Status of Decommissioning Nodes

To check the progress of decommissioning nodes, you can run the cockroach node status command with the --decommission flag:

$ cockroach node status --decommission --certs-dir=certs --host=<address of any live node>

$ cockroach node status --decommission --insecure --host=<address of any live node>

+----+-----------------------+---------+---------------------+---------------------+---------+-------------------+--------------------+-------------+

| id | address | build | updated_at | started_at | is_live | gossiped_replicas | is_decommissioning | is_draining |

+----+-----------------------+---------+---------------------+---------------------+---------+-------------------+--------------------+-------------+

| 1 | 165.227.60.76:26257 | 91a299d | 2017-09-07 18:16:03 | 2017-09-07 16:30:13 | true | 134 | false | false |

| 2 | 192.241.239.201:26257 | 91a299d | 2017-09-07 18:16:05 | 2017-09-07 16:30:45 | true | 134 | false | false |

| 3 | 67.207.91.36:26257 | 91a299d | 2017-09-07 18:16:06 | 2017-09-07 16:31:06 | true | 136 | false | false |

| 4 | 138.197.12.74:26257 | 91a299d | 2017-09-07 18:16:03 | 2017-09-07 16:44:23 | true | 1 | true | true |

| 5 | 174.138.50.192:26257 | 91a299d | 2017-09-07 18:16:07 | 2017-09-07 17:12:57 | true | 3 | true | true |

+----+-----------------------+---------+---------------------+---------------------+---------+-------------------+--------------------+-------------+

(5 rows)